October 21 news – Recently, Tiantai Technology launched a new audio-visual robot capable of moving freely at home and responding instantly when called.

Core Mobility: Autonomous Navigation & Flexible Maneuvering for Home Scenarios

Equipped with an advanced autonomous navigation system, this audio-visual robot can accurately identify obstacles in the home environment and flexibly maneuver through living rooms, bedrooms, studies, and other spaces without manual assistance. It features Tiantai’s independently developed “dual-laser SLAM + fully self-developed servo-driven wheels” technology, enabling adaptive environmental navigation and human recognition for follow-up scenarios.

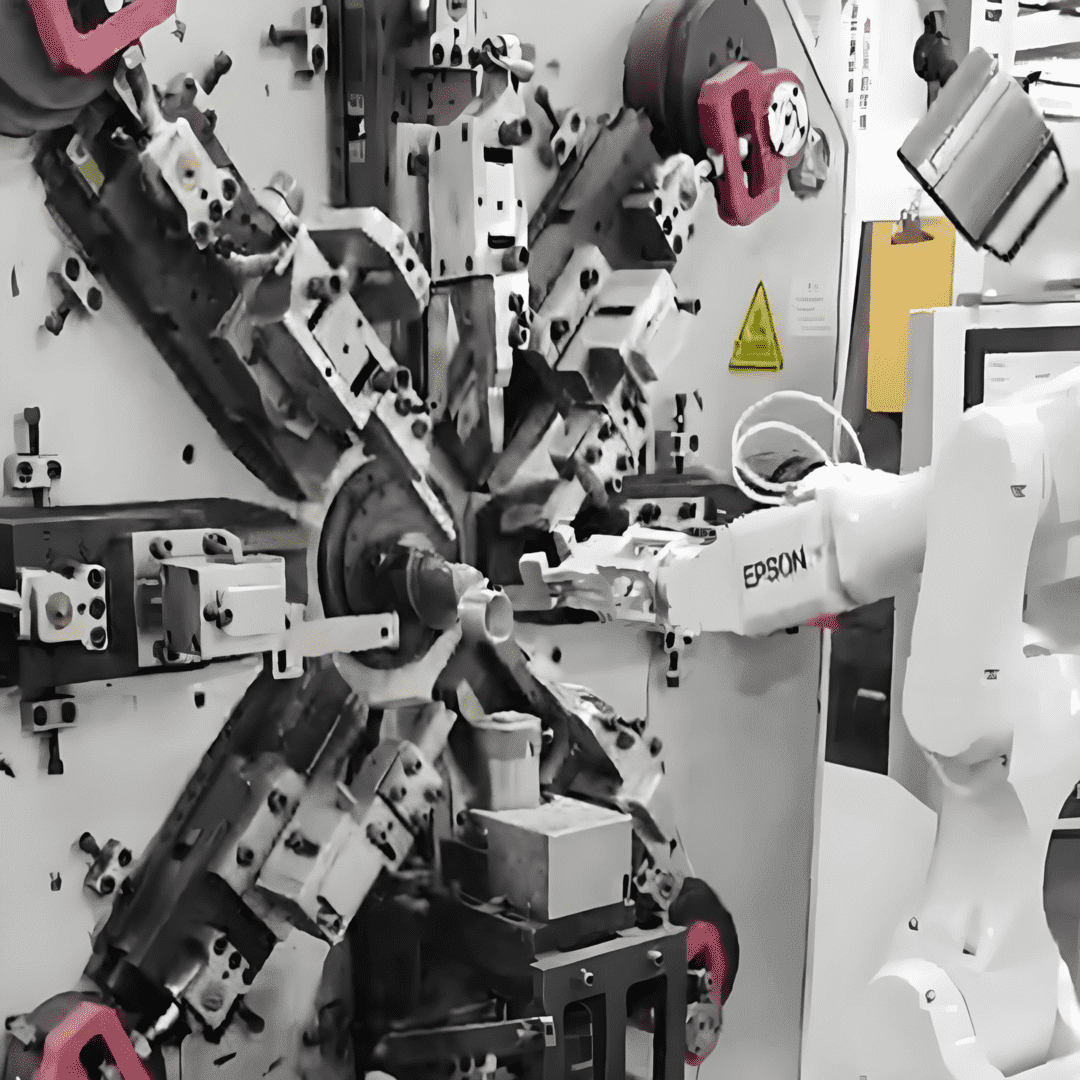

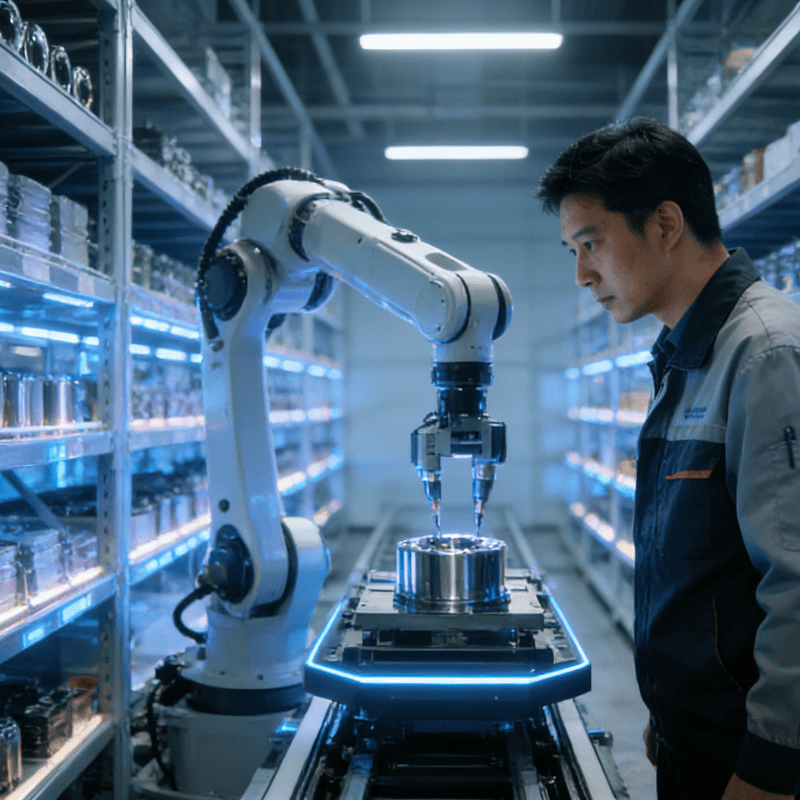

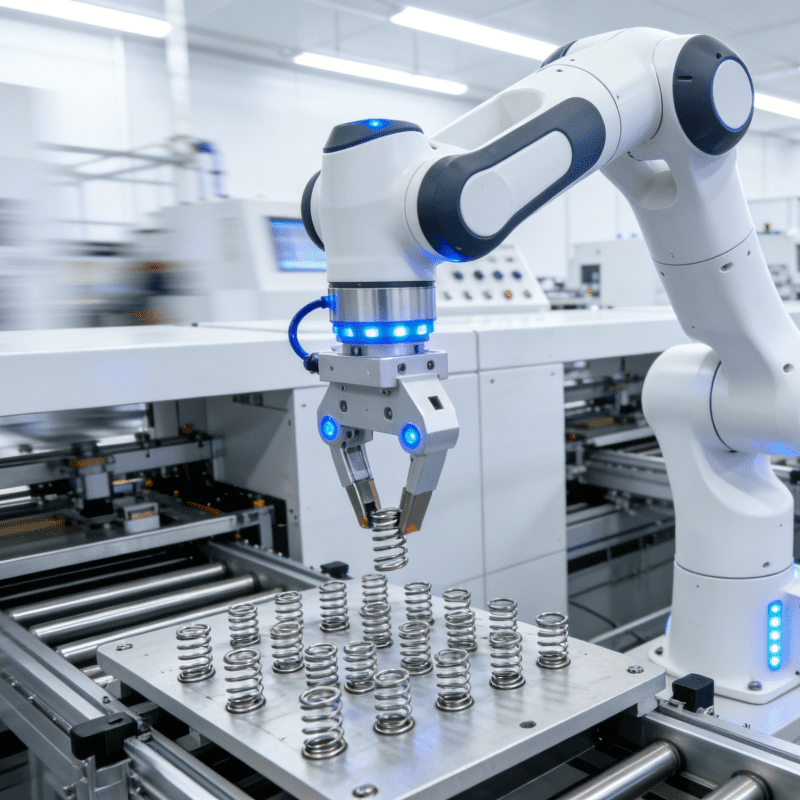

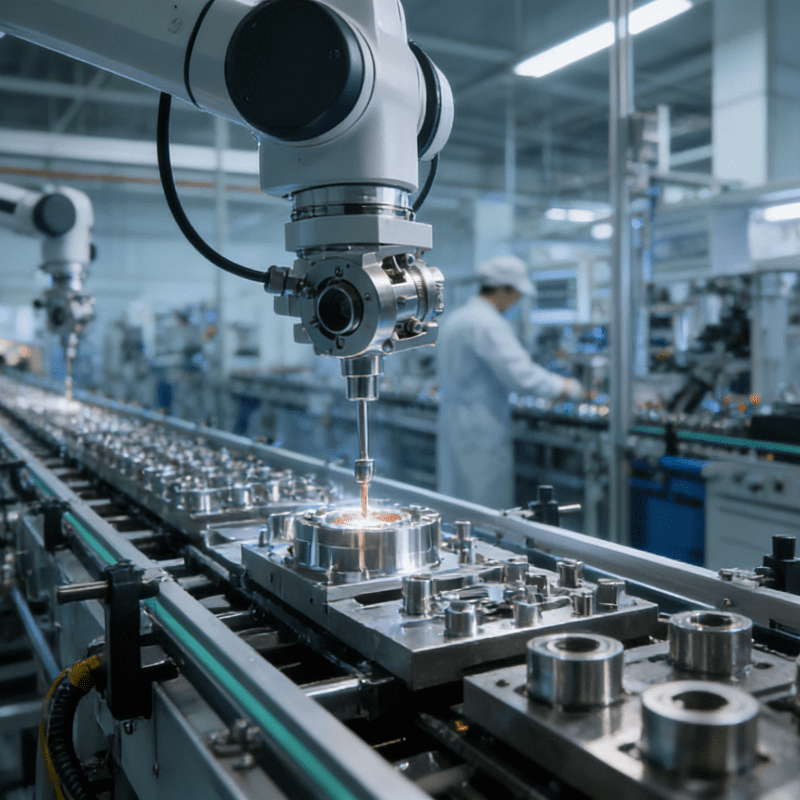

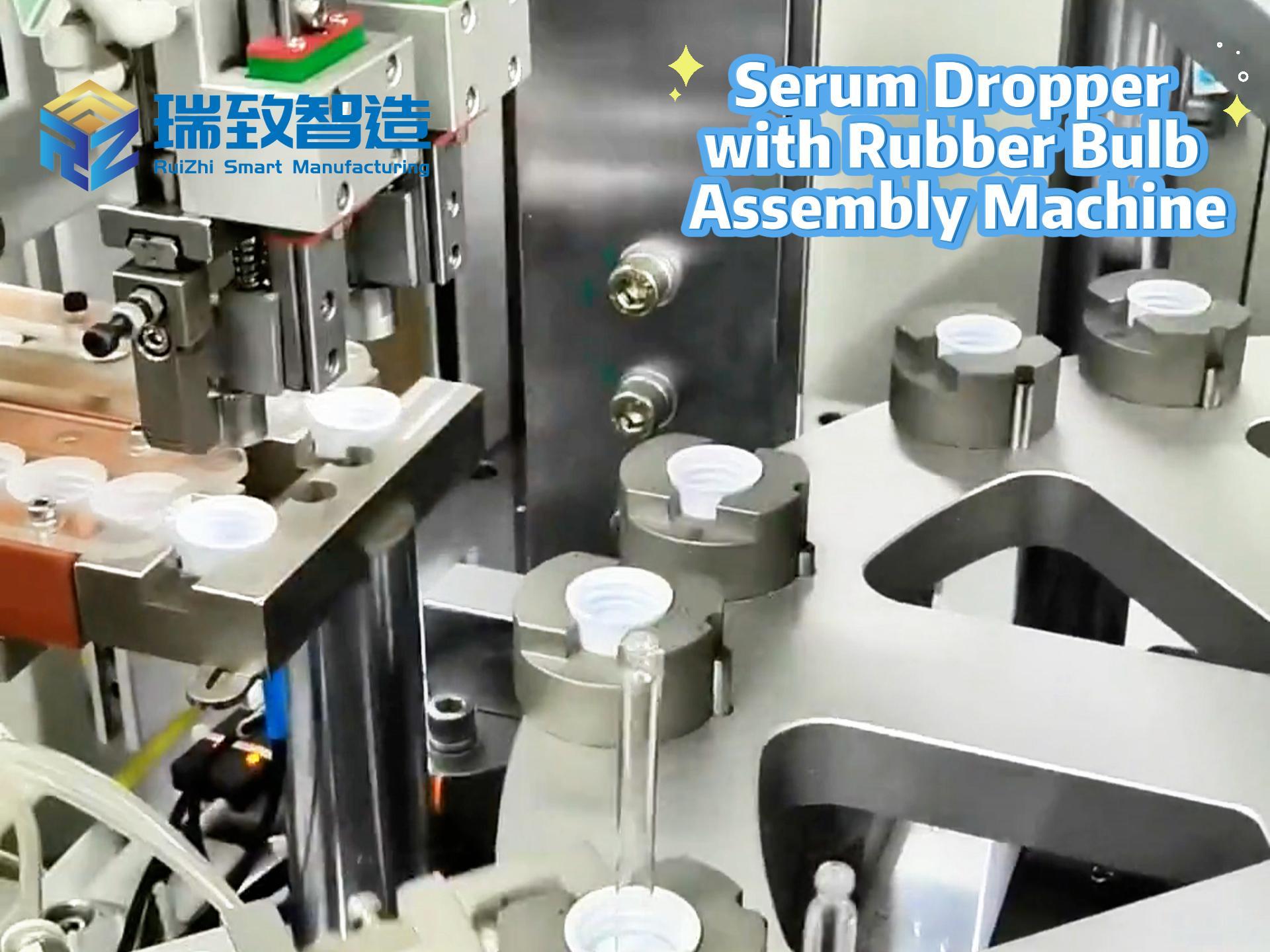

Core Component Production: Relying on Precision Manufacturing, Sharing Technical Logic with Medical Assembly Equipment

Notably, the stable mass production of the robot’s core components—such as its high-precision servo-driven wheels and environmental perception sensor modules—relies on technical support from upstream precision manufacturing equipment, a logic that aligns closely with Syringe Automatic Assembly Equipment in the medical field. Syringe automatic assembly equipment requires micron-level positioning accuracy via visual guidance systems to ensure the coaxiality of syringes and plungers, uses real-time pressure monitoring modules to control assembly force and avoid component damage, and is equipped with 100% airtightness testing functions to guarantee medical safety. Similarly, when assembling the audio-visual robot’s servo-driven wheels, Tiantai leverages similar precision assembly technologies: high-precision torque control ensures smooth wheel operation, while visual calibration maintains the collaborative accuracy of the dual-laser SLAM system and drive system—laying the foundation for the robot’s flexible and stable movement in complex home environments.

Immersive Experience & Intelligent Interaction: Audio-Visual Hardware + Multimodal AI

Integrating a 21.5-inch high-definition touchscreen, HiFi high-fidelity speakers, and a 4K projector (up to 1200 lumens), combined with a stable mobile chassis, the robot allows users to enjoy an immersive audio-visual experience anywhere. Additionally, the audio-visual robot can construct geometric perception and state modeling of 3D environments—it not only recognizes objects but also understands their spatial relationships (e.g., warning of a potentially tipping water cup on a table edge). Supported by the HALI multimodal large model SOLEMATE, it enables voice, touch, and visual interactive modes, actively memorizing user preferences to achieve emotional and personalized interactions that feel as natural as communicating with family.