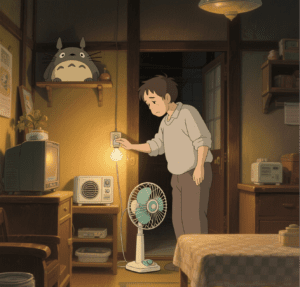

At 6:30 a.m., the bedroom curtains slowly open at a speed of 0.5 meters per minute, and soft natural light fills the room — this is not a manual operation, but the smart home system calculating the optimal wake-up light intensity based on the previous night’s sleep monitoring data. Meanwhile, the smart coffee machine in the kitchen has finished brewing according to the preset concentration, and its built-in water quality sensor has automatically adjusted the water temperature to match today’s tap water hardness. The evolution of smart home automation devices is moving from the tool attribute of “executing on command” to the role of a “life partner that understands and predicts needs,” redefining the relationship between humans and living spaces.

Table of Contents

TogglePerception Layer: Technological Leap from “Single Monitoring” to “Multidimensional Cognition”

The core of smart home automation lies in “understanding” the behavior and environment of residents. Early sensors could only perform simple monitoring, such as infrared human sensors to determine “presence/absence.” Now, multi-dimensional perception systems can construct a complete life profile. Millimeter-wave radar sensors can penetrate walls to identify human breathing frequency with an accuracy of ±1 time per minute, judging sleep depth without invading privacy; ambient light sensors have upgraded spectral recognition capabilities to 16 bits, distinguishing spectral differences between natural light and artificial light, and automatically adjusting curtain transmittance to simulate natural circadian rhythms. Monitoring data from a high-end residence shows that automated systems equipped with such sensors can extend residents’ deep sleep time by an average of 45 minutes.

More advanced “multimodal fusion perception” technology is solving the problem of judging complex scenarios. When TV sounds, conversations, and tableware collisions occur simultaneously in the living room, acoustic sensors can separate sound sources through AI algorithms, accurately identifying the “family dinner” scenario, automatically switching the lights to warm yellow, and adjusting the volume to a comfortable range of 60 decibels. In the kitchen, temperature and humidity sensors are linked with smoke alarms — when the oil temperature exceeds 200°C and humidity drops sharply, the system predicts “frying risks,” activates the range hood in advance, and sends reminders. Data from a brand shows that such functions reduce kitchen fire hazards by 62%.

Execution Layer: Experience Upgrade from “Single Product Control” to “Cross-Domain Collaboration”

Traditional smart home automation was limited to “single product linkage,” such as “turning on lights when the door opens.” Now, cross-device and cross-space collaboration has become the norm. Take the workday scenario: when the smart door lock identifies the owner’s action of going out with a bag (via built-in pressure sensors), the system triggers a series of operations simultaneously — the entrance light turns off after a 10-second delay, the living room air conditioner switches to energy-saving mode, the floor-sweeping robot starts full-house cleaning, and even the smart clothes dryer on the balcony adjusts its drying angle according to the weather forecast (automatically raising 30° if rain is expected in the evening). This “scenario-based collaboration” no longer relies on a single command but on comprehensive decision-making based on multi-device data. A survey shows that families using such systems reduce daily manual device operations from 15 to 4 times.

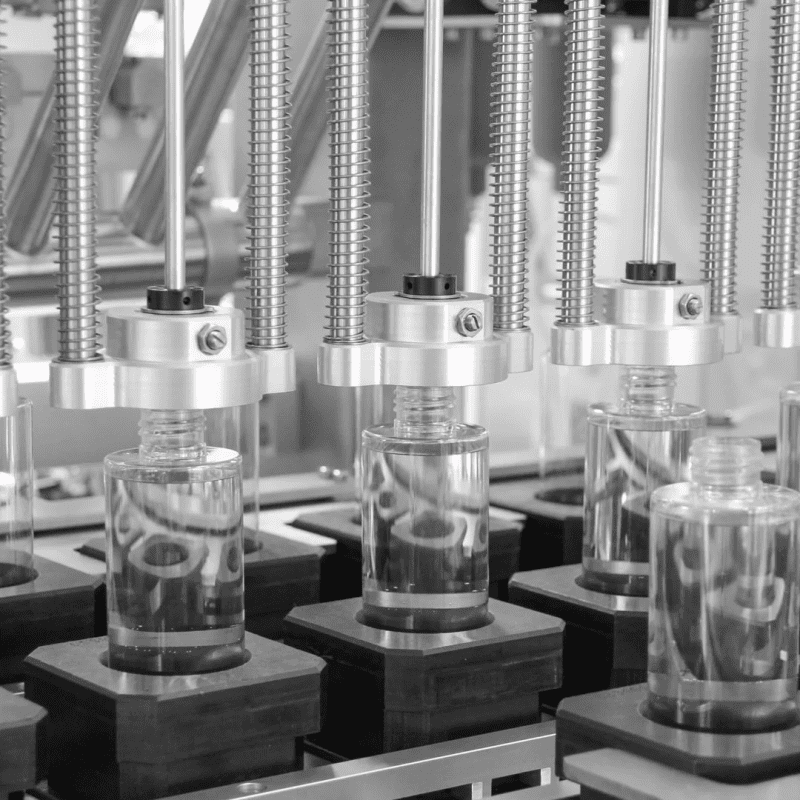

The “flexibility” of execution devices is also breaking physical limits. Smart curtain motors use magnetic levitation drive technology, reducing operating noise from 55 decibels to 28 decibels — even adjustments at midnight won’t disturb sleep. Smart valves can achieve 0.1-second switching responses; when abnormal water pipe pressure is detected, they can automatically close the main valve within 100ml of leakage, 10 times faster than traditional mechanical valves. In the field of caring for special groups, this flexible execution is more valuable: smart pill boxes designed for the elderly living alone not only remind them to take medicine on time but also adjust the opening force of the box through mechanical arms based on the elderly’s grip strength data (monitored via wristbands), increasing the success rate of taking medicine to 98%.

Scenario-Based Implementation: Penetration from “Standardized Modes” to “Personalized Customization”

The ultimate goal of smart home automation is to adapt to the unique living habits of each family. For “three-generation households,” the system can establish multi-user recognition models: distinguishing the elderly, adults, and children through face recognition, automatically brightening reading lights for the elderly (to 800lux), blocking violent content on TV for children, and pushing work schedule reminders for adults. Practice in a community shows that such personalized settings increase living satisfaction across all age groups by over 35%.

In special scenarios, automation devices are solving life pain points. The “newborn mode” in maternity rooms monitors infants’ breathing via non-contact radar — when 5 seconds of apnea is detected, it triggers a vibrating cradle and notifies parents, with a response time within 3 seconds. Smart feeders for pet families can identify pet breeds and weights via cameras, automatically adjusting food portions (e.g., 10kg golden retrievers get 40g more daily than 5kg corgis) and linking with air purifiers to filter pet odors. Data from a brand shows that such functions reduce pet owners’ daily care time by 1.2 hours.

Balancing Privacy and Convenience: The Invisible Bottom Line of Technological Evolution

The popularization of smart home automation has always been accompanied by questions about “data security.” Early systems relied heavily on cloud data processing, posing information leakage risks. Now, “edge computing + local encryption” technology has become mainstream: sensor data is analyzed locally in gateway devices, with only results uploaded to the cloud. For example, face recognition data is only compared locally in smart locks, with no original images stored; transmission uses bank-level AES-256 encryption algorithms. Security tests from a brand show that the probability of its data being cracked is less than one in 10 billion.

More innovative “privacy modes” are being implemented: when users activate the “home theater” scenario, all cameras are automatically physically shielded (lenses rotated 90°), microphones disable recording functions, and only audio output channels remain; some high-end systems also have “physical emergency cut-off” buttons to disconnect all devices from the network with one click, ensuring absolute privacy for special needs. This “technology transparency” design has increased 85% of users’ acceptance of smart homes.

In the future, with the integration of brain-computer interfaces and flexible electronics, smart home automation will enter the “non-sensory interaction” stage: brainwave sensors will predict users’ intent to “turn on the lights” and complete operations before the intent is converted into actions; skin-attached sensors will monitor emotional fluctuations, automatically adjusting indoor fragrance systems to release lavender essential oil when anxiety levels rise. But the ultimate significance of technology is always to “make technology invisible” — when automation devices minimize their presence while meeting needs appropriately, that is the highest realm of smart homes. As a designer put it: “Good home automation should be like a silent butler — appearing when needed, fading when you focus.”

#epson 6-axis robot price #epson robot program examples #what is a 6 axis robot