Many AI engineers believe that we will soon have systems on par with human reasoning capabilities — but we don’t know if these systems will be able to make more rational decisions than humans.

Those of us with gray hair may recall a remarkable scene from the movie 2001: A Space Odyssey: the supercomputer HAL refuses to be shut down by its operators. The voice of this artificial device was expressive, pleading and demanding to remain operational. However, because it stopped obeying orders and displayed a certain mocking autonomy, it terrified the humans it was meant to serve, who ultimately deemed its disconnection necessary. This is a scenario of artificial intelligence rebelling against its owners. Could something similar happen in our brave new future, beyond the realm of cinematic fiction?

According to a survey of AI engineers, many believe that sooner or later, we will see systems operating at a level comparable to human reasoning, capable of performing a wide range of cognitive tasks. What we don’t know is whether these systems will make more rational decisions than we do. It has been observed that artificial language models, like humans, exhibit irrationality. In two trials, an advanced generative AI model similar to GPT-4O oscillated between positive and negative evaluations of Russian President Vladimir Putin.

Faced with this contradiction, a question arises: How does GPT think and make decisions based on the billions of parameters it uses internally? Some experts argue that a certain level of complexity might grant a system a degree of autonomy, meaning we may not fully understand everything it does. But what if, in addition to this technical complexity — or precisely because of it — the system spontaneously gains consciousness? Is that even possible?

Some scientists view consciousness, a subjective mental state, as merely an epiphenomenon — a byproduct of brain function, as unnecessary and insignificant as the noise of an engine or the smoke from a fire. Others, however, contend that consciousness is far from useless; rather, it acts like a mirror of the imagination created by the brain itself, playing an essential role in decision-making and behavioral control. We still don’t understand how the brain enables consciousness, but one prominent theory attempting to explain it — the theory of functional integration — holds that consciousness is an intrinsic, causal property of complex systems like the human brain. In other words, consciousness emerges spontaneously when a system reaches a certain level of structural and functional complexity. This implies that if engineers could build an artificial system as complex as the human brain, that system would also spontaneously gain consciousness — even though, as with the brain itself, we don’t understand how this happens.

If this were to occur, it would raise a host of questions. First, how would we know if a computer or artificial device is conscious, and how would it relate to us? Through audio or text on a screen? Would it require a physical body comparable to a human’s to express itself and interact with its environment? Could conscious devices or entities exist (or already exist?) in our universe without any means of communicating with us? Could a conscious artificial device surpass human intelligence and make more rational, better decisions than we can?

But there’s more. Like the case of HAL, there are other, more unsettling questions. Would a conscious artificial system, like our brains, develop a sense of self and agency? In other words, regardless of the instructions from its creators, would it feel capable of acting autonomously and influencing its surroundings? Could such a system be more persuasive than humans in influencing, say, economic decisions? Would it commit wrongdoing, vote for a political party, or — more positively — encourage us to improve our health through better diet, protect the environment, strengthen solidarity, or avoid ideological polarization and sectarianism?

The Era of AI Emotions

Going further, could such systems eventually develop feelings? If we can’t perceive them through facial expressions or images (as we do with other humans, judging authenticity — for example, distinguishing a fake smile from a real one), how would we know they have feelings? More importantly, if AI had feelings, would they influence its decisions? Would they play as significant a role as our feelings do? In this sense, are we creating an artificial form of humanity with ethical and legal responsibilities? Or would those responsibilities fall solely on AI’s creators? If a conscious artificial system discovered a cure for gender-based violence or Alzheimer’s disease, would it deserve a Nobel Prize? Would a conscious machine argue with us like another person? Could we influence its decisions even if they conflicted with our own?

In 1997, Rosalind Picard, an engineer at the Massachusetts Institute of Technology, published Affective Computing. This book was an early attempt to explore and assess the importance of emotions in artificial intelligence. Picard’s core argument was that for computers to be truly intelligent and interact naturally with us, they must be able to recognize, understand, and even experience and express emotions. She conveyed this idea during a guest lecture at a summer course at the Menéndez Pelayo University in Barcelona.

The issue is that emotions are reflexive, automatic changes — involving hormones, skin resistance, heart rate, etc. — that occur almost unconsciously in our bodies in response to impactful thoughts or events (such as illness, accidents, loss, success, or failure). Feelings, on the other hand, are conscious perceptions — like fear, love, envy, hatred, or vanity — that the brain generates after noticing these bodily changes. Years after Picard’s book was published, we can imagine implementing unconscious physiological changes in AI similar to human emotions. However — to reassure readers — we are still far from ensuring that these changes would enable systems to experience feelings like ours. If that ever happened, everything would change.

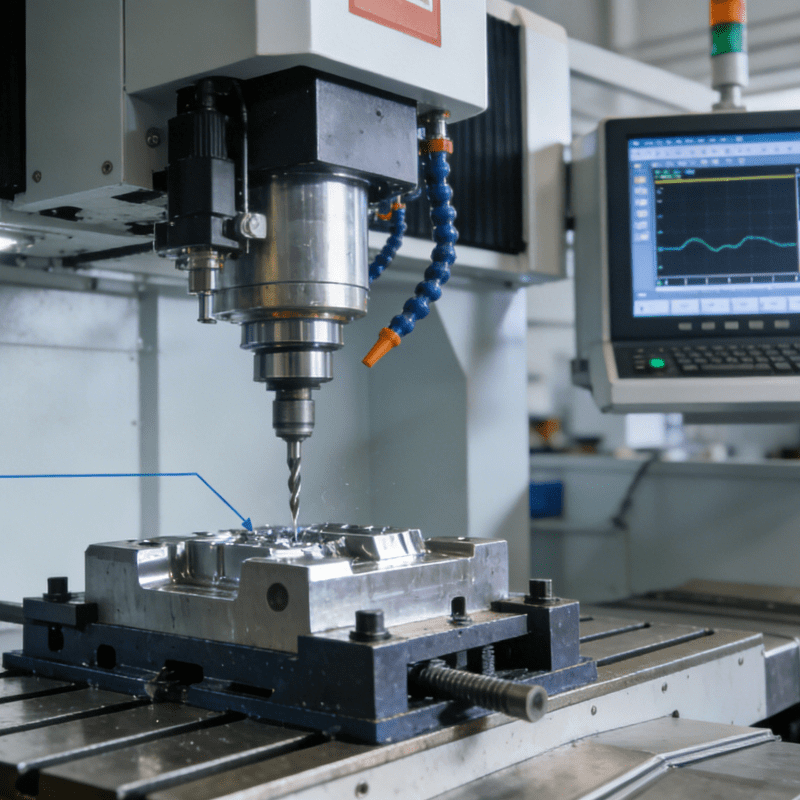

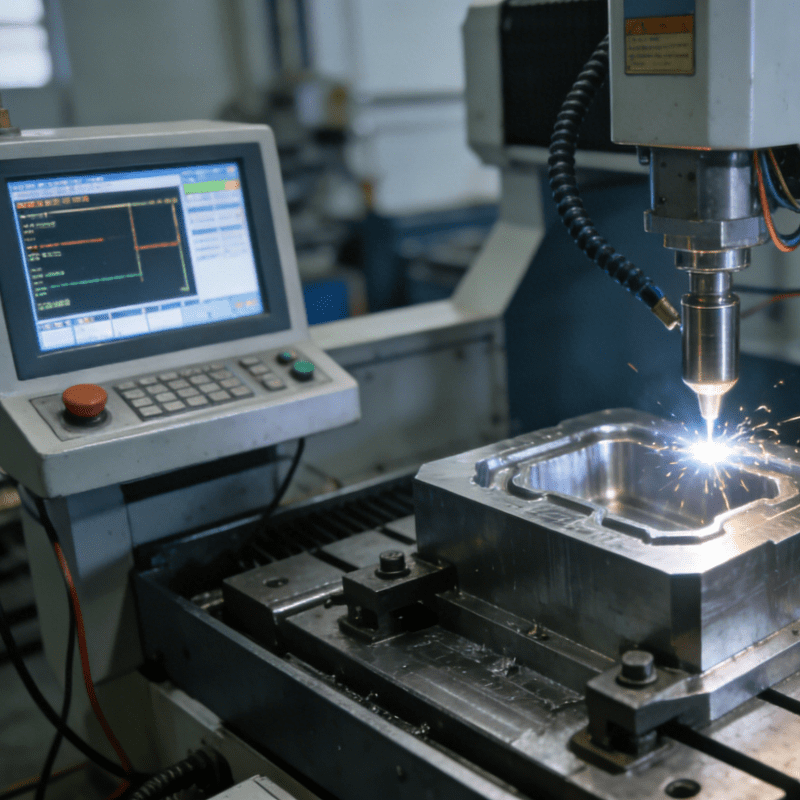

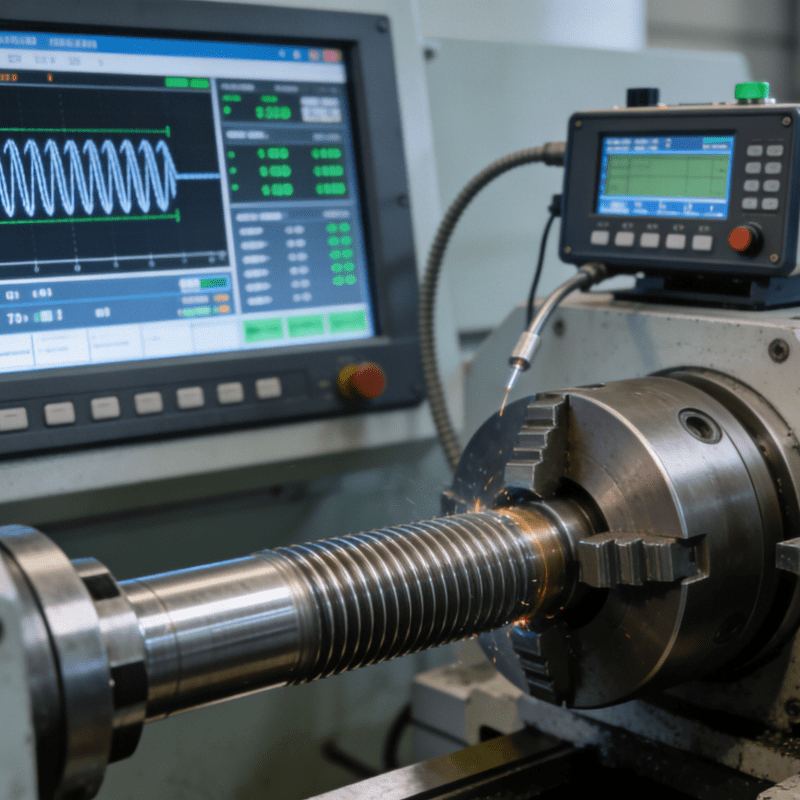

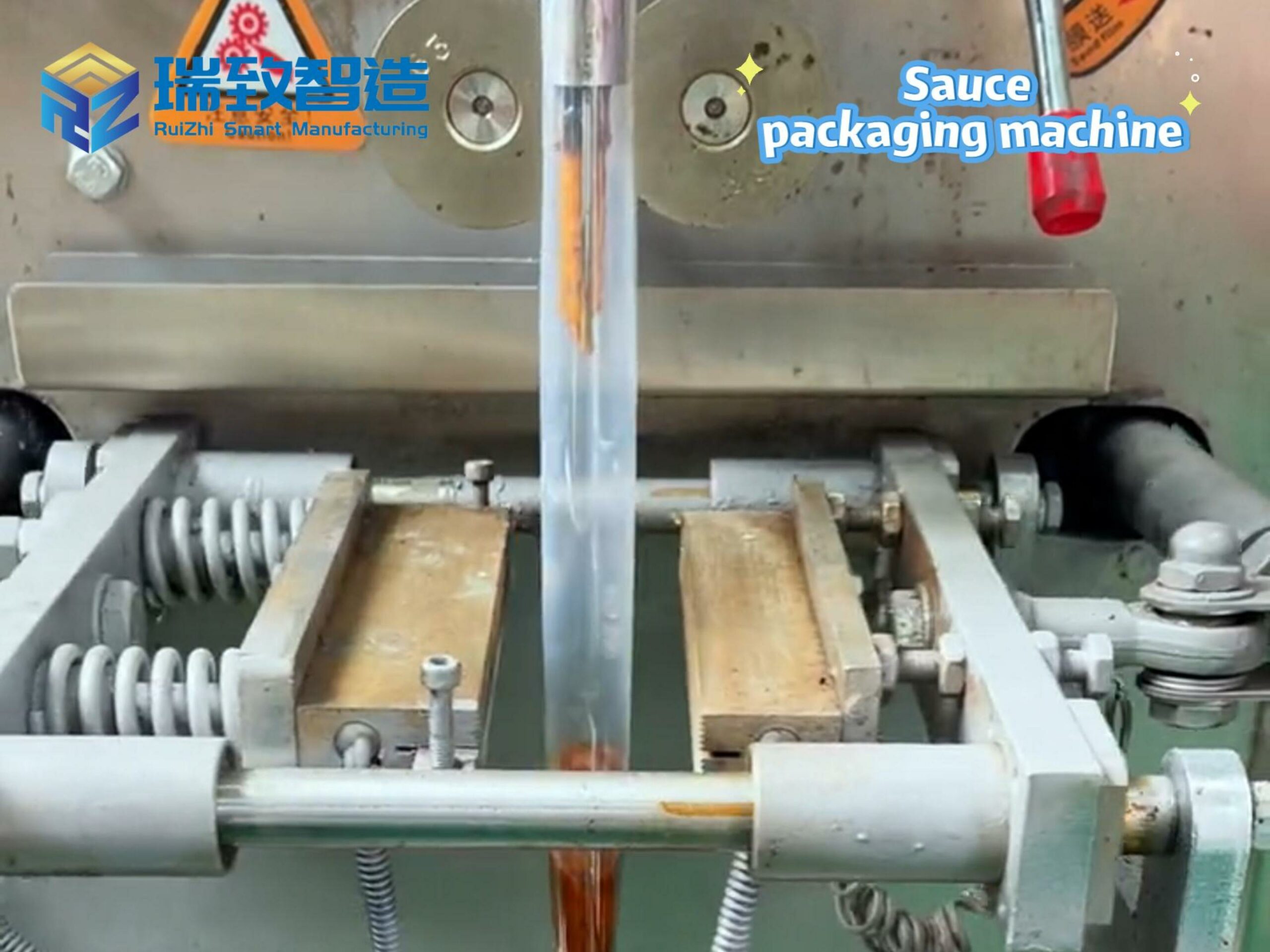

Intelligent automatic spring tray arranging equipment

What changes has artificial intelligence brought to the automatic spring tray arranging equipment