“Technology happens because it is possible,” OpenAI CEO Sam Altman told the New York Times in 2019, echoing Robert Oppenheimer, the father of the atomic bomb. It’s a Silicon Valley mantra: technology marches forward, unstoppable.

Another common belief in tech circles is that artificial general intelligence (AGI)—human-level AI—will lead to one of two fates: a post-scarcity utopia or human extinction. For countless species, humans spelled doom not because we were stronger, but because we were smarter and more coordinated. Extinction was often a byproduct of our goals, not a deliberate act. AGI would be a new species—one that might outthink or outnumber us, seeing humanity as an obstacle (like an anthill in a dam’s path) or a resource (like factory-farmed animals).

Altman and other top AI lab leaders acknowledge that AI-driven extinction is a real risk—joining hundreds of researchers and public figures in this concern. Given this, a simple question arises: Should we build a technology that could kill us if it fails?

The most common reply is: “AGI is inevitable.” It’s too useful to ignore—the “last invention humanity will ever need,” as a colleague of Alan Turing put it. And if we don’t build it, someone else will—less responsibly. A Silicon Valley ideology called “effective accelerationism” (e/acc) even claims AGI is inevitable due to thermodynamics and “technocapital”: “The engine can’t be stopped. Progress only moves forward.”

But this is a myth. Technology isn’t a force of nature—it’s a product of human choices, shaped by incentives, values, and action. History proves we’ve reined in powerful technologies before.

We’ve Regulated Powerful Technologies Before

Fearing risks, biologists banned then regulated recombinant DNA experiments in the 1970s. Human cloning has been technically possible for over a decade, yet no one has done it; the only scientist who genetically engineered humans was imprisoned. Nuclear power, despite its carbon-free potential, faces strict regulations due to catastrophe fears.

Even nuclear weapons—now seemingly inescapable—were a “contingent” creation. The U.S. built them in 1945 partly because of a false belief that Germany was racing to do the same. Historian Philip Zelikow notes: “If the U.S. hadn’t built the atomic bomb in WWII, it’s unclear if it ever would have been built.” Later, Reagan and Gorbachev nearly agreed to eliminate all nukes; while that failed, global stockpiles are now under 20% of their 1986 peak, thanks to international agreements.

Climate action offers another example. Fossil fuels have massive economic incentives, yet advocacy shifted public opinion and accelerated decarbonization. Extinction Rebellion’s 2019 protests pushed the UK to declare a climate emergency. The Sierra Club’s “Beyond Coal” campaign closed a third of U.S. coal plants in five years, dropping U.S. per capita emissions below 1913 levels.

AGI Is Not Inevitable—We Control the Timeline

Regulating AGI is easier than decarbonization. Fossil fuels power 82% of global energy; we don’t depend on hypothetical AGI. Slowing AGI development wouldn’t stop us from using existing AI for medicine, climate, or other critical needs.

Capitalists love AGI—it could cut workers out of the loop—but governments care about more than profits: employment, stability, democracy. They aren’t prepared for a world with mass technological unemployment. And while capital often wins, it doesn’t always. As one OpenAI safety researcher noted, politicians like AOC or Josh Hawley could “derail” unchecked AI progress.

AGI boosters claim it’s “imminent,” but timelines matter. We had the computing power to train GPT-2 over a decade before OpenAI did—we just didn’t see the point. Today, top labs race so fiercely they skip safety measures their own teams recommend. A “safety tax” slows progress, and no lab wants to fall behind. But this is a choice, not a law of nature.

Governments could change this. AGI requires massive supercomputers and specialized chips—resources controlled by a small, regulated industry. “Compute governance” could halt unchecked training runs over a certain threshold (e.g., $100 million per run) without stifling smaller innovators. International treaties could share AI benefits while preventing reckless scaling—just as the Montreal Protocol fixed the ozone layer, or the Non-Proliferation Treaty curbed nuclear spread.

The Public Doesn’t Want AGI

When polled, most Americans oppose superhuman AI. As AI becomes more common, opposition grows. Boosters dismiss this as “neo-Luddism,” but their “inevitability” talk is a dodge: they don’t want to argue their case in public, because they’d lose.

AGI’s allure is strong, but its risks are existential. We need a global effort to resist it. Technology doesn’t “happen”—people make it happen. And we can choose not to.

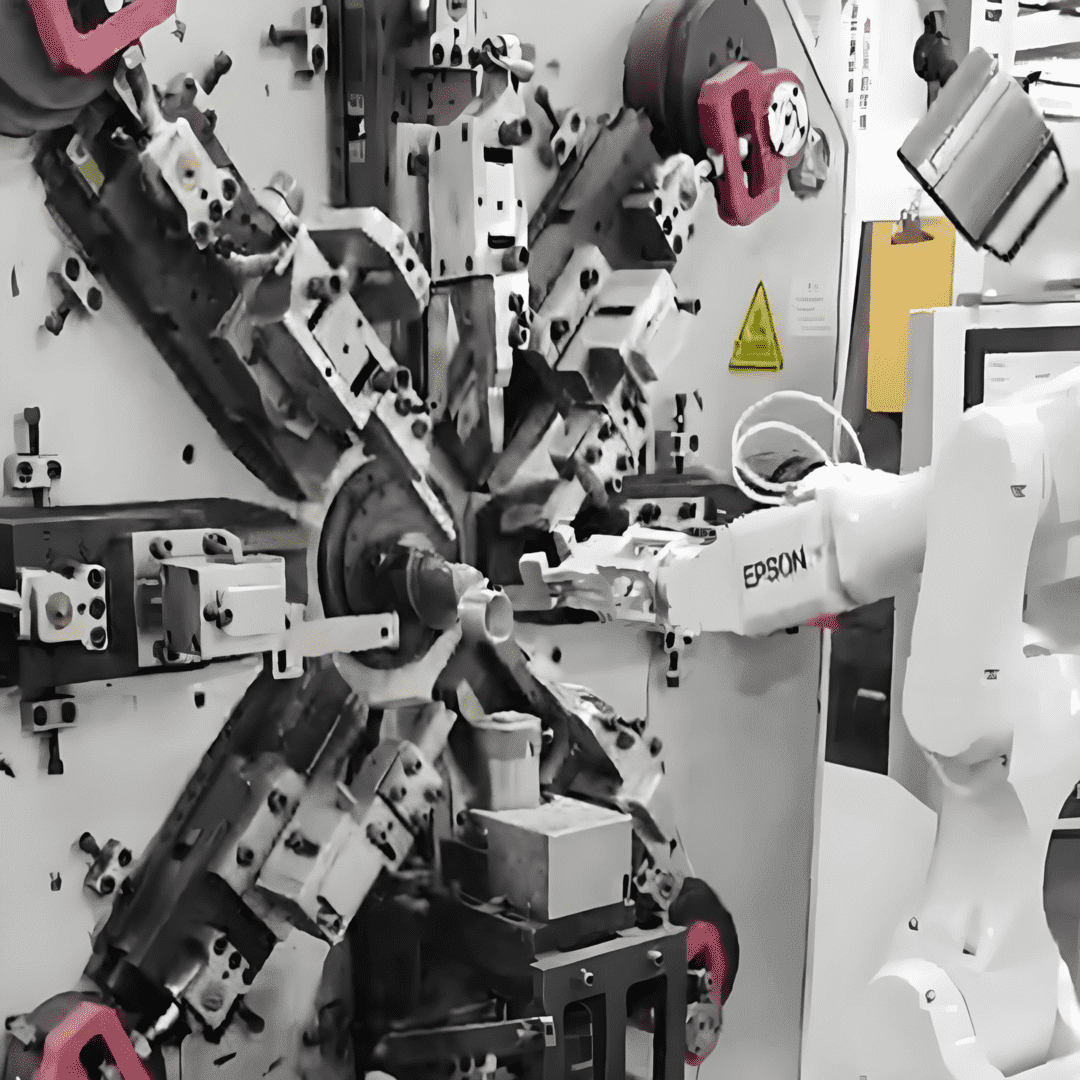

Which companies produce medical device assembly machines?

Can medical device assembly machines be optimized with artificial intelligence?