Bots and automated tools have emerged as the biggest security risks in cloud environments, with cybercriminals taking the lead in applying automated decision-making to steal credentials, transfer funds, and carry out other malicious activities.

Sergej Epp, Chief Information Security Officer (CISO) at Sysdig, stated that while few threats are fully automated at this stage—most attacks exploit misconfigurations—they are gradually evolving to execute more advanced actions, such as installing crypto-mining programs or moving laterally across systems.

This automation has drastically reduced attackers’ dwell time. Traditional attacks typically measured dwell time in days. In contrast, automated attacks have been known to exfiltrate data within 5 minutes, Epp noted.

He predicts that attacks will become increasingly automated, with existing “attack bots” being enhanced using newer large language models (LLMs). This poses a challenge: cybersecurity experts know what needs to be done to protect their organizations, but the question is whether they can take necessary actions quickly enough.

Epp also believes there will be a surge in attacks targeting “companies below the cyber poverty line”—those lacking resources to implement adequate defenses.

He recommends taking steps parallel to the evolution of endpoint security measures: first, create an inventory of all cloud assets and identify any misconfigurations, of which there may be many.

The next step is to prioritize and remediate these issues. However, because daily scans are insufficient to address real-time threats, this asset inventory and vulnerability identification process must be repeated continuously. Finally, organizations should adopt cloud detection and response systems to identify and address abnormal activities.

Pressure to Adopt AI

Business pressures to rapidly adopt AI are complicating the issue. But Epp says most people misunderstand AI security—they focus on models, when the real priority should be infrastructure.

He points out that there are over 1.8 million models in the Hugging Face library, and organizations cannot simply trust them, as traditional scans are ineffective for opaque models. Additionally, there are currently no technical solutions for prompt injection attacks, and firewalls are useless because AI-related network traffic is probabilistic rather than deterministic.

Epp warns that these attacks are not limited to scenarios where attackers directly access chatbots. Malicious prompts can be hidden in shared documents or uploaded PDF invoices being processed.

The solution, he says, is to treat AI workloads like any other cloud workload by applying runtime security best practices.

Fundamental principles such as assuming breach, zero trust, and defense-in-depth still apply, but runtime security agents must be added to each container to detect improper settings or activities—such as application programming interfaces (APIs) with excessive permissions or attempts to escape the container.

While security operations centers (SOCs) have the necessary data, the challenge lies in delivering the right subset of data to the right agents to trigger the correct actions. Barriers include talent shortages and the time required to develop needed software.

The ephemeral nature of the cloud—60% of containers run for less than a minute—makes automation critical. Collecting security data from these containers is no easy task, and Epp says storing more than 20% of available data may be impractical.

The key question is which 20% to collect. According to Epp, Sysdig’s approach is to adopt a top-down perspective. He acknowledges this is not easy but notes that the company’s extensive Kubernetes background makes it possible, pointing out that founder and CTO Loris Degioanni created Falco, an open-source container security tool, on which the Sysdig platform is built.

While most security operations still rely on humans to analyze data and take action, Epp says the Sysdig Sage AI analyst transforms data into real-time recommendations. He positions the company as a leader in the journey toward autonomous cloud security, though he admits fully autonomous systems require a high level of trust that nothing will be disrupted.

This reveals a critical asymmetry. For cybercriminals, the cost of AI-triggered errors is low—they might expose their presence or simply mean having to retry. For defenders, however, errors can be costly both financially and reputationally. For example, accidentally taking a major online bank or retailer offline would make headlines and drive away business.

“We need to accelerate the adoption of security controls,” Epp says. “To move fast in business, we need to move fast in security too.”

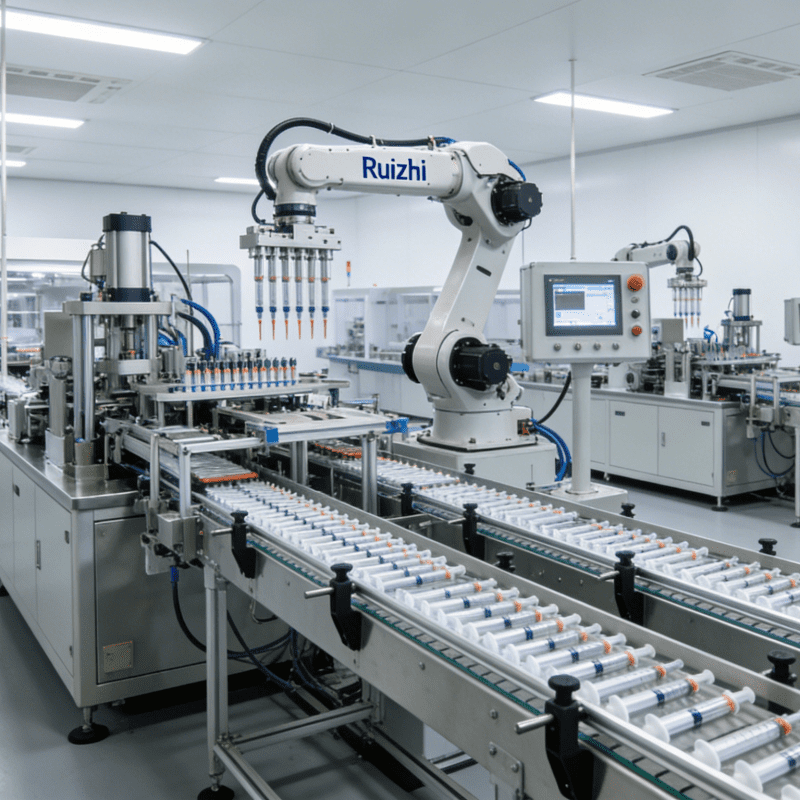

What are the application fields of Medical Products Assembly Machine?

What are the benefits of automation for Medical Products Assembly Machine?