In today’s increasingly intense digital offense and defense battles, traditional cybersecurity methods (such as fixed-rule firewalls and manual alert screening) can hardly cope with the challenges of “diversified attacks, concealed threats, and explosive growth of traffic.” With its advantages of “predictability, adaptability, and automation,” artificial intelligence (AI) is opening a new track in cybersecurity—from predicting attacks in advance to actively confusing attackers, six innovative applications are redefining the landscape of offense and defense.

- Predicting Attack Occurrence: Moving Defense “Forward” Before Threats Emerge

“Traditional security is ‘fighting fires after the incident,’ while predictive AI allows us to build a firewall before the spark ignites.” This is how Andre Piazza, security strategist at predictive technology developer BforeAI, puts it. The core of this technology lies in the dual support of “data + algorithms”: on one hand, it collects massive amounts of data and metadata from the internet to establish a “ground truth” database (recording the characteristics of good/bad infrastructure) and a malicious behavior database; on the other hand, it uses “random forest” machine learning algorithms to analyze data and accurately identify early signs of attacks. More importantly, the algorithm updates in real time—whether it’s changes in IP/DNS records or new attack methods developed by criminals, they can all be incorporated into the analysis model to ensure long-term accuracy. Ultimately, the system can automatically respond to threats, greatly reducing manual intervention and alleviating the predicament of security teams being “drowned in alerts.”

- Generative Adversarial Networks (GAN): Training Defense Capabilities by “Simulating Attacks” with AI

In the face of complex attacks never seen before, how can defense systems “train in advance”? Michel Sahyoun, Chief Solutions Architect at cybersecurity company NopalCyber, offers the answer: generative adversarial networks (GAN). A GAN consists of two “opponents”: the generator mimics the strategies of real attackers to generate scenarios such as new malware variants, phishing email templates, and network intrusion patterns; the discriminator is responsible for “finding faults” and learning to distinguish between these simulated threats and legitimate behaviors. The two form a dynamic cycle—the generator continuously optimizes attack simulations, while the discriminator constantly improves its detection capabilities, allowing the system to master methods for dealing with millions of new threats through “real combat exercises.” This model breaks the curse of “defense lagging behind attacks” and narrows the gap between offense and defense by actively simulating unknown threats.

- AI Analysis Assistant: Enabling Entry-Level Analysts to Handle Threats Efficiently

Hughes Network Systems is using AI to solve an industry pain point: the shortage of Security Operations Center (SOC) analysts and the slow onboarding of newcomers. The AI analysis assistant is not meant to replace humans, but to “do the dirty work”: trained on the playbooks and runbooks of senior analysts, it can automatically monitor alerts, correlate multi-source data (such as logs and threat intelligence), and generate contextual reports—transforming what used to take an hour of manual work into a process that takes just minutes. “Analysts no longer need to spend time checking logs or doing cross-validation; they can focus solely on threat verification and response,” says Ajith Edakandi, head of Hughes’ enterprise cybersecurity products. This not only improves efficiency but also enables entry-level analysts to become competent quickly.

- Micro-Deviation Detection Model: Capturing “Inconspicuous” Abnormal Signals

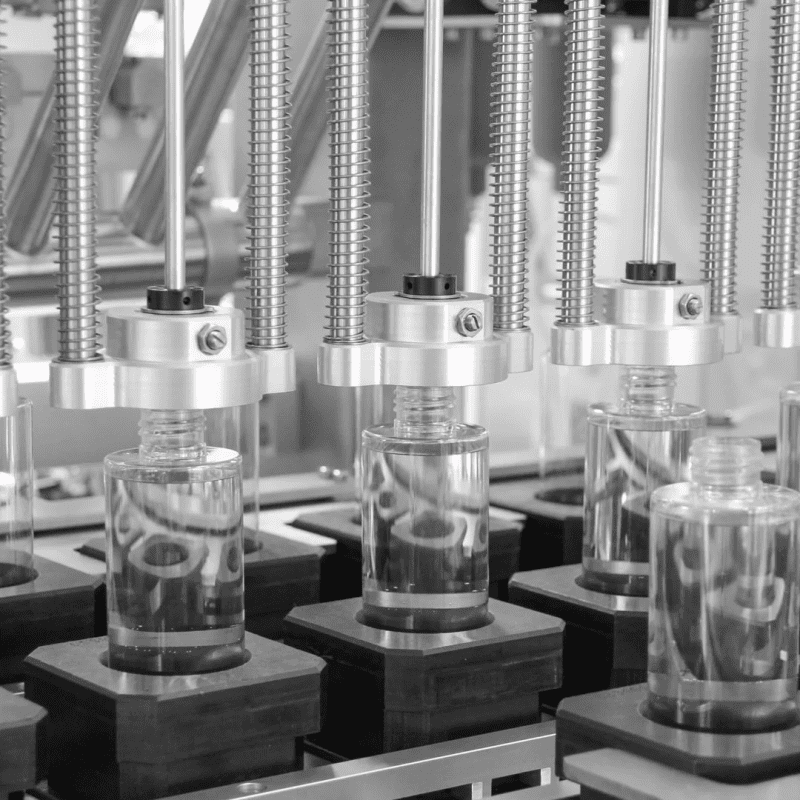

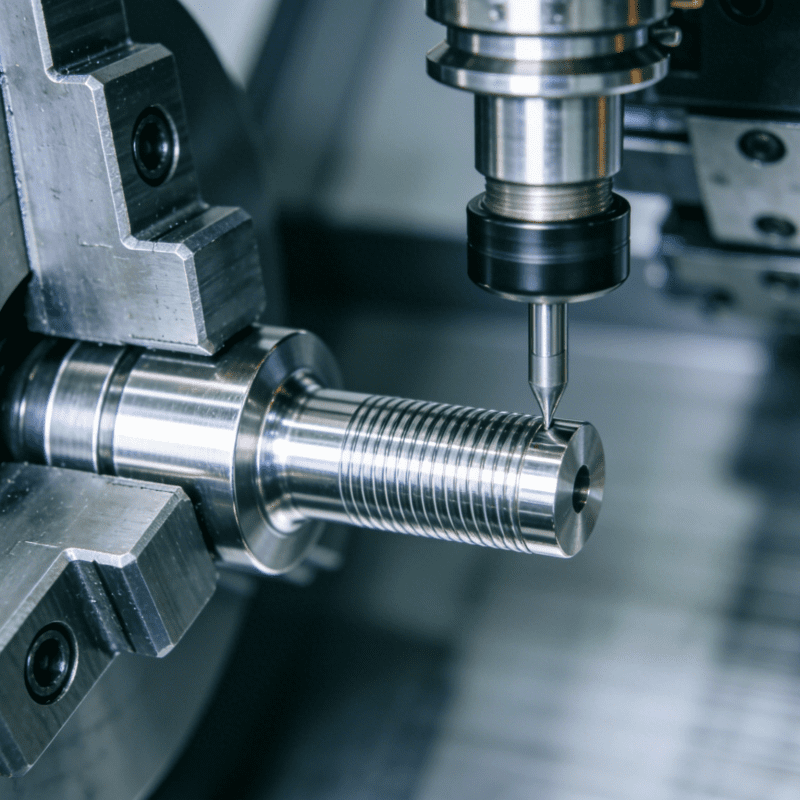

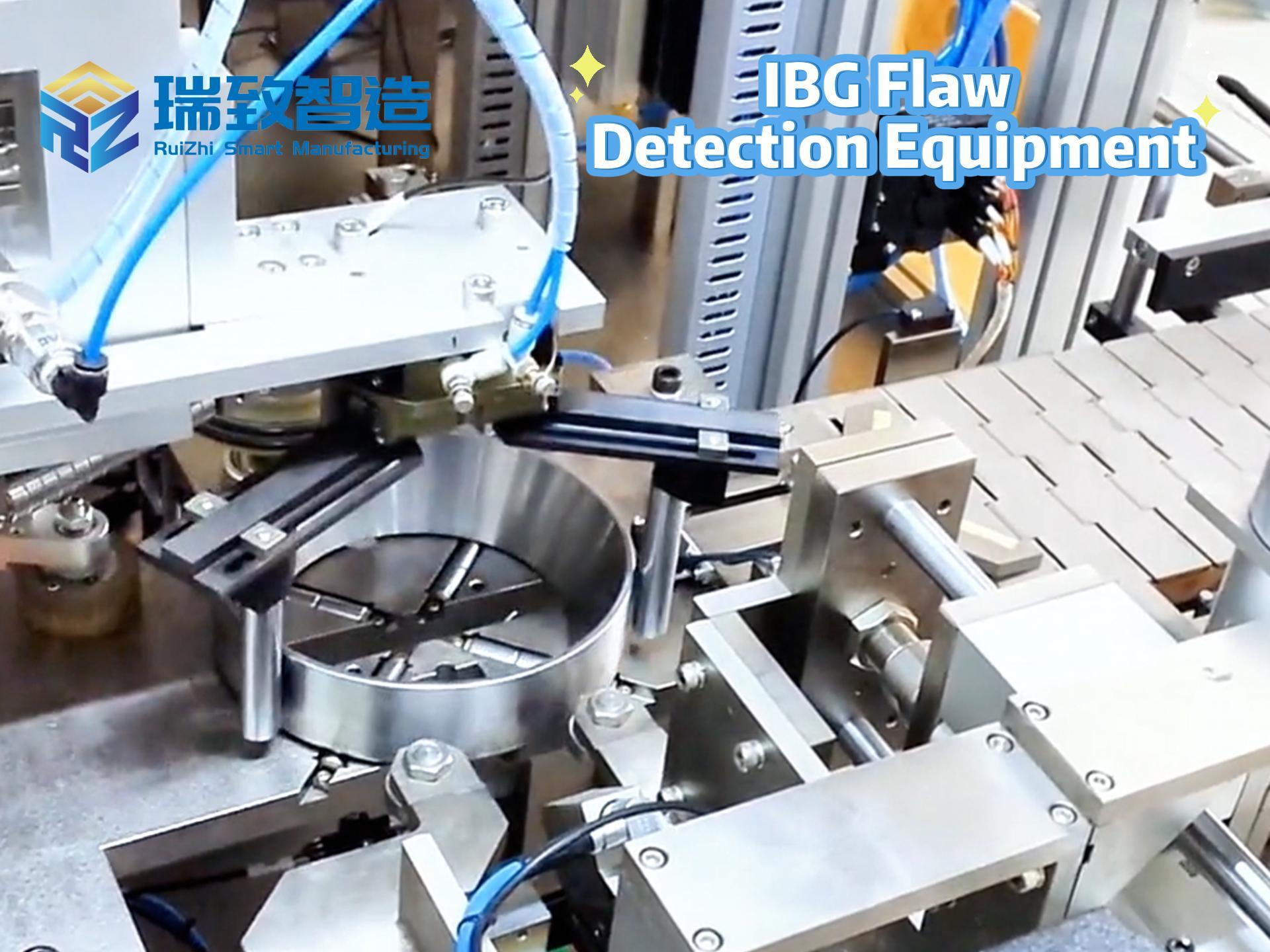

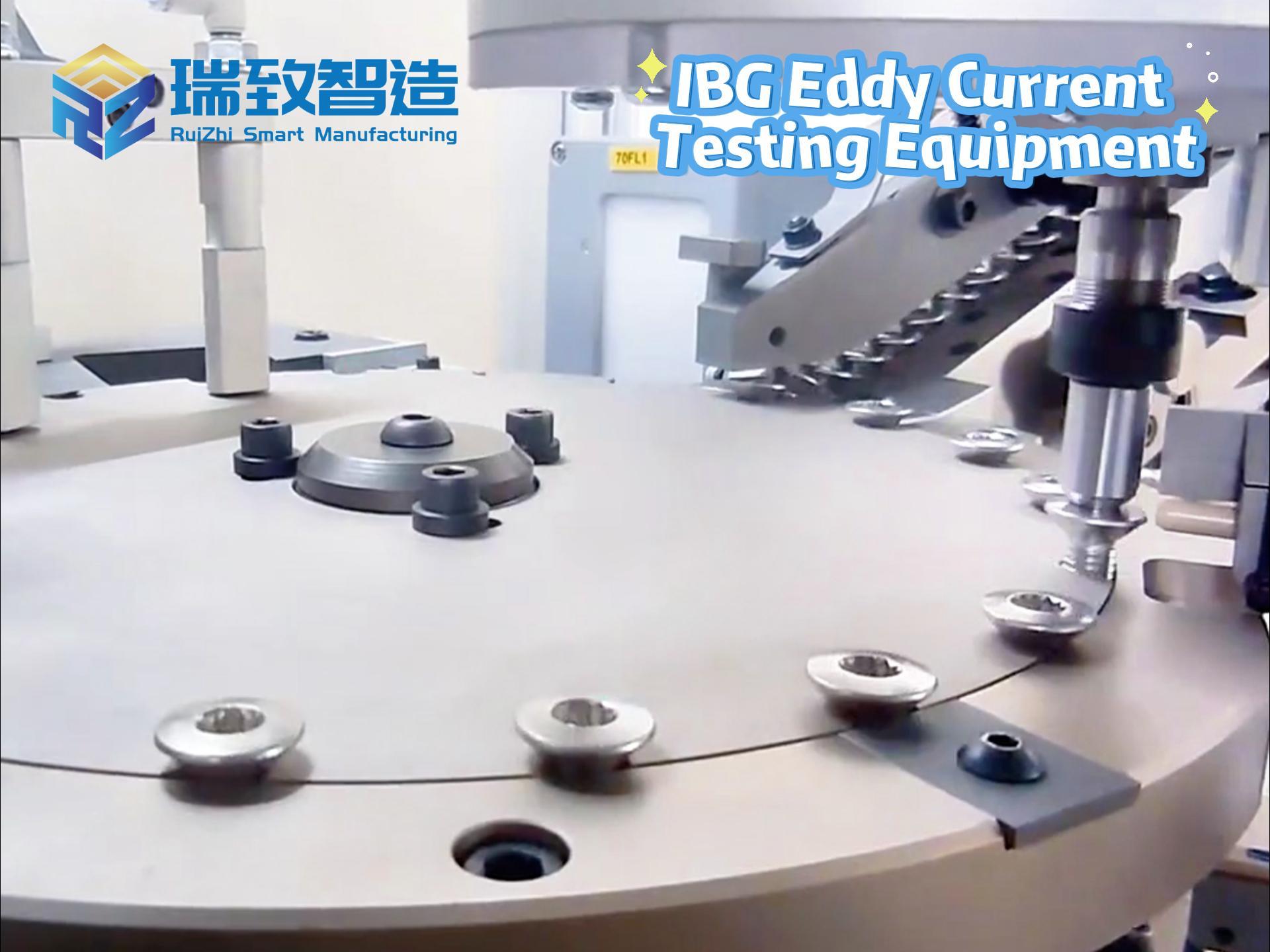

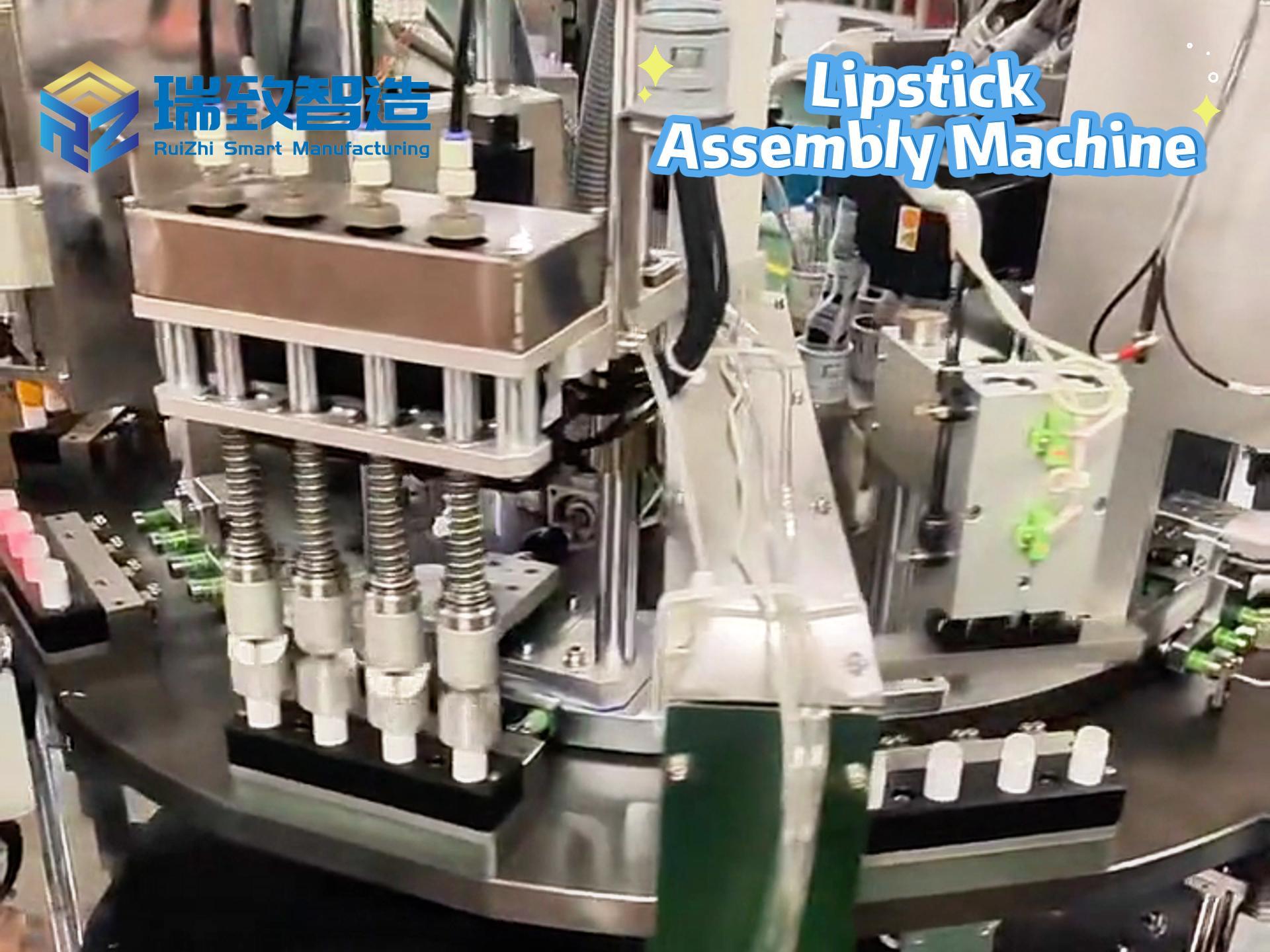

“Traditional systems only stare at ‘known bad,’ while AI can learn ‘normal good’—and identify the slightest traces of deviation from normal.” This is how Steve Tcherchian, CEO of security company XYPRO Technology, explains it. This AI model continuously learns the “normal behavior baseline” of systems, users, networks, and processes—such as employees’ regular login times, frequently used devices, and data access habits. Notably, in the medical device manufacturing field, this model plays a critical role in safeguarding the operation of Biological Indicator Assembly Machines—core equipment for producing biological indicators (key tools to verify sterilization effectiveness in hospitals, pharmaceutical factories, and food processing facilities). These assembly machines require ultra-high precision in processes like microbial culture medium filling and seal welding; the AI model learns their normal operating baselines (including assembly speed, pressure parameters for component bonding, and real-time data transmission frequency with the MES system). Once anomalies occur—such as sudden jitters in the machine’s robotic arm during needle installation, or unauthorized access to the machine’s control system to modify sterilization indicator parameters—the system immediately triggers risk alerts. This not only prevents production defects of biological indicators caused by equipment malfunctions but also blocks cyberattacks that attempt to tamper with device programs to compromise the accuracy of sterilization detection, effectively protecting the safety of downstream medical and food industries. As data accumulates, the model’s recognition accuracy continues to improve, especially excelling at capturing “micro-deviations” that humans or fixed-rule systems might overlook, making it a “detector” of hidden threats.

- Automatic Alert Classification and Response: Solving the “Alert Flood” Problem

“A company with 1,000 employees may receive 200 security alerts in a single day, and each alert requires 20 minutes of manual investigation—meaning at least nine analysts are needed to handle them all.” This is the dilemma facing SMEs, as pointed out by Kumar Saurabh, CEO of managed detection company AirMDR. The emergence of AI has automated alert processing: the system first determines the nature of each alert, collaborates with enterprise security tools to collect necessary data, and automatically filters out alerts that “require urgent handling” and those that “can be safely ignored.” If it’s a malicious alert, AI can also generate a remediation plan and notify the team in real time. This application has completely changed the situation where “most alerts are ignored,” allowing limited security personnel to focus on real threats.

- Proactive Generative Deception: Setting “Digital Traps” for Attackers

“Rather than defending passively, it’s better to actively dig pits for attackers.” This is a new idea proposed by Gyan Chawdhary, CEO of cybersecurity training company Kontra: AI proactive generative deception. This technology goes beyond traditional “honeypots” (single baits); AI continuously creates realistic fake network segments, fake data, and fake user behaviors—equivalent to building a “digital maze” for attackers. Attackers waste time and resources exploring decoy systems, stealing fake data, and analyzing forged traffic, while defenders can take the opportunity to collect intelligence on their tactics, techniques, and procedures (TTP), and even gain valuable remediation time. However, this solution has no low threshold: it requires powerful cloud infrastructure, GPU resources, and a professional team composed of AI/ML engineers and security architects, as well as diverse network traffic datasets to train the AI and ensure the decoys are sufficiently “realistic.”

Conclusion: AI Transforms Cybersecurity from “Passive” to “Active”

From predicting attacks to actively deceiving attackers, AI is pushing cybersecurity from “after-the-fact remedy” to a new stage of “pre-event prevention” and “active control.” However, it should be noted that AI is not omnipotent—it requires high-quality data support and also faces the risk of being exploited by attackers (such as AI-generated deepfake attacks). In the future, competition in cybersecurity will essentially be competition in “AI defense capabilities”: whoever can better empower defense with AI will take the initiative in the digital battle between offense and defense.

What is the work done using automated equipment and machines called?