Table of Contents

ToggleOne-shot learning-driven autonomous robotic assembly via human-robot symbiotic interaction

Abstract

In the realm of multi-procedure robotic assembly, where robots must sequentially assemble components, traditional programming proves labor-intensive, and end-to-end learning struggles with vast task spaces. This paper introduces a one-shot learning-from-demonstration (LfD) framework leveraging third-person visual observations to minimize human intervention and enhance adaptability. We propose an object-centric representation to preprocess human assembly demonstrations via RGB-D cameras, a kinetic energy-based changepoint detection algorithm to segment procedures and decode human intent, and a demo-trajectory adaptation-enhanced dynamical movement primitive (DA-DMP) to improve motion skill generalization. Validated on a robotic assembly platform, the system achieves accurate sequence learning from a single demonstration, efficient motion planning, and a 93.3% success rate, advancing trustworthy human–machine symbiotic manufacturing aligned with human-centered automation.

giriiş

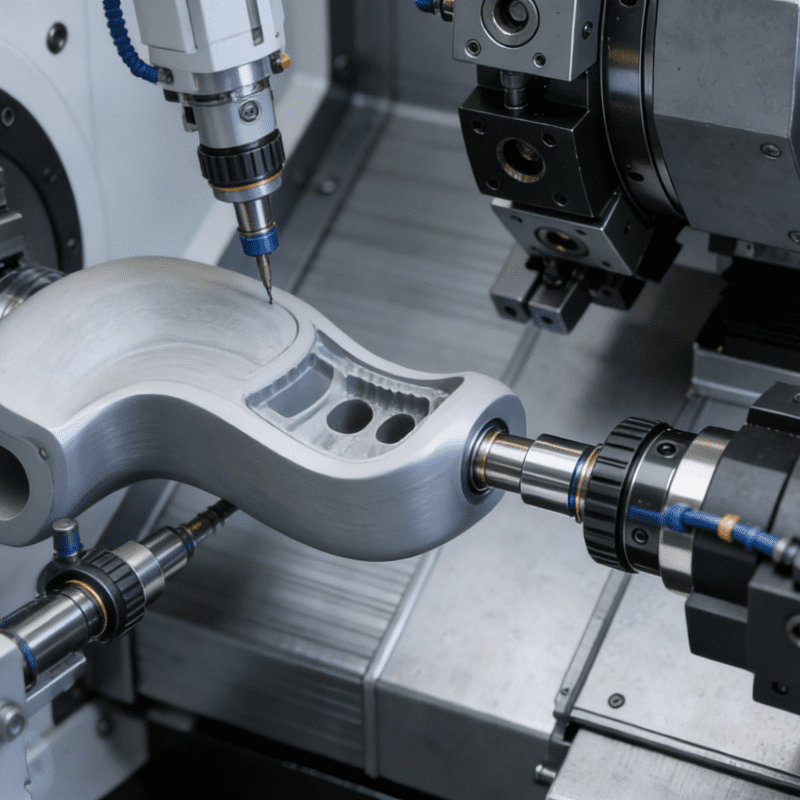

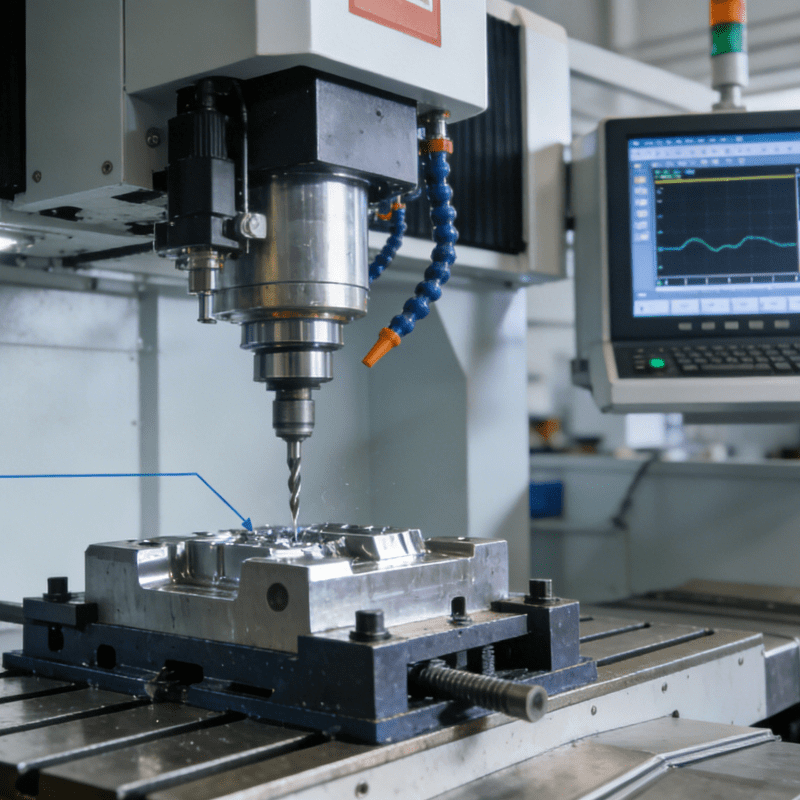

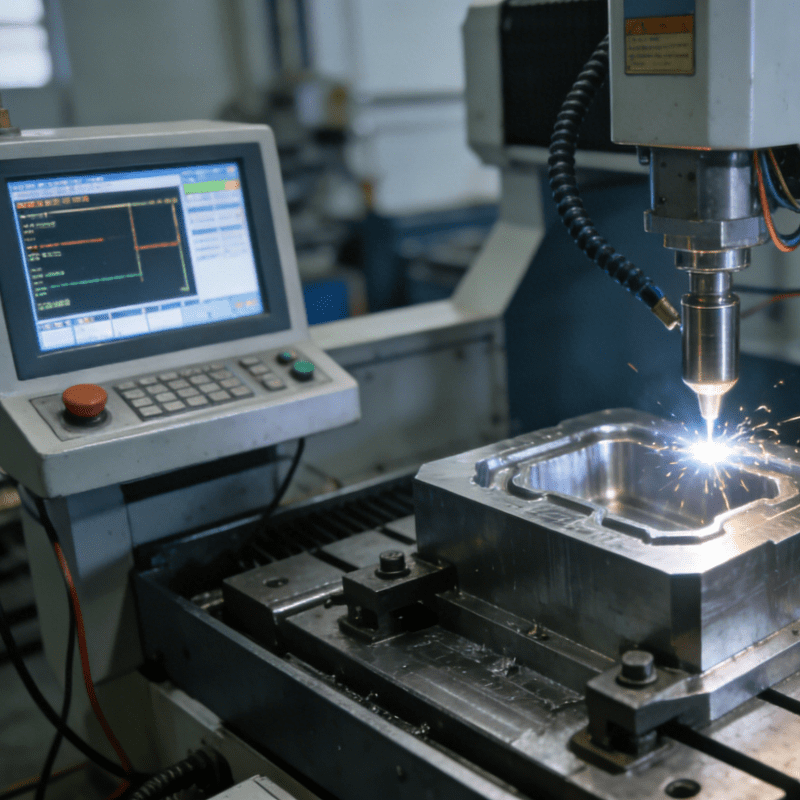

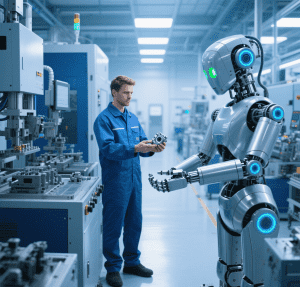

Amid Industry 5.0’s human-centric manufacturing paradigm, industrial robots must adapt intuitively to dynamic environments, particularly in assembly tasks that account for 50% of manufacturing time and 30% of costs. While manual programming offers reliability, it relies on expert knowledge, and end-to-end learning demands extensive data and training. Learning from Demonstration (LfD) bridges this gap, enabling skill transfer via imitation. Among LfD paradigms, passive visual observation stands out for multi-procedure tasks, requiring no physical guidance or teleoperation—ideal for natural human–robot symbiosis.

This paper presents a one-shot LfD approach for long-horizon assembly tasks using third-person visual data. By extracting object-centric representations, automating procedure segmentation, and enhancing motion primitives, the framework empowers robots to learn complex assembly sequences from a single demonstration. Unlike prior work requiring multiple trials or hybrid teaching methods, our approach minimizes human effort and facilitates rapid deployment in real-world manufacturing, as validated in a seven-part shaft-gear assembly case study.

Conclusion

The proposed one-shot LfD framework redefines multi-procedure robotic assembly by integrating visual perception, automatic task segmentation, and adaptive motion planning. By enabling robots to learn from a single human demonstration, the system reduces programming overhead, enhances adaptability to new scenarios, and achieves a 93.3% success rate in real-world testing. This advances human–machine symbiosis in manufacturing, aligning with Industry 5.0’s vision of intuitive, efficient production.

For broader industrial applicability, the framework’s object-centric design and adaptive motion primitives could extend to bathroom components assembly machines, where multi-procedure tasks (e.g., installing faucets, aligning pipes, and securing fixtures) require precise, sequential operations. By applying our one-shot LfD approach, such machines could rapidly learn to assemble diverse bathroom components—from sinks to shower systems—with minimal human intervention, enhancing flexibility in customized manufacturing while maintaining high precision and throughput. This underscores the methodology’s potential to transform not only automotive or mechanical assembly but also specialized industries requiring intricate, multi-step robotic manipulation.