Enterprise artificial intelligence is at a critical turning point. Although major enterprises have invested billions of dollars in large models and AI applications, a fundamental infrastructure challenge is determining who can truly achieve large-scale implementation.

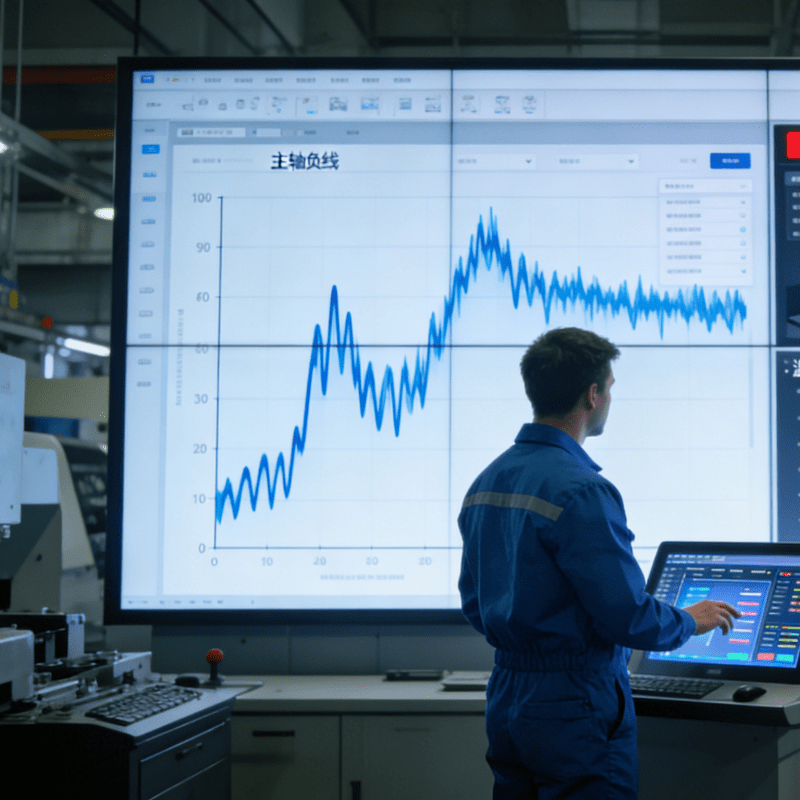

Artificial intelligence is widely believed to contribute trillions of dollars to the global economy, but the reality is not optimistic. According to surveys by IDC and relevant domestic research institutions, more than 80% of enterprise AI projects remain in the pilot phase and cannot enter large-scale production and deployment. The obstacle lies not only in computing power or model complexity but also in the contradiction between AI’s need for comprehensive data access and enterprise security and compliance requirements.

The AI Security Crisis

With the popularization of AI in enterprises, traditional security systems have gradually exposed their limitations. AI has brought a new type of security vulnerability:

Case of government affairs large model data leakage: During the testing phase of a local government’s government affairs large model application, due to the lack of a strict dialogue filtering mechanism, some internal document summary contents were mistakenly returned to ordinary users, resulting in the leakage of sensitive information.

“Low-price loophole” in e-commerce intelligent customer service: The intelligent customer service of a leading e-commerce platform was once induced by users through prompt words to generate order discount information at extremely low prices, triggering a large number of abnormal orders and directly leading to economic losses.

AI misoperation incident in an Internet enterprise: During the launch phase of the production system, an internal AI operation and maintenance assistant of an Internet company, due to insufficient permission control, was mistakenly triggered by employees to batch delete test data, affecting the core business database and causing the system to be down for several hours.

Risks of large model plug-in protocols (similar to MCP): Domestic security researchers have found that through indirect prompt injection and plug-in abuse, AI can be induced to call internal interfaces beyond authority, obtain sensitive data, or perform unauthorized operations in enterprise systems.

These cases highlight the so-called “AI security paradox”: the more data an AI system can access, the greater its value, but at the same time, the risk increases sharply.

Traditional enterprise architectures are designed for the predictable access patterns of humans, while AI systems, especially RAG applications and autonomous agents, need to access massive amounts of unstructured data in real-time, dynamically integrate across multiple systems, and make independent decisions while ensuring compliance. This new access model poses significant challenges to security and governance – even relatively mature automated equipment like the 4 – Axis Robotic Tray Loading System, when connected to an AI scheduling system, may lead to the illegal acquisition of production parameters or logistics information due to improper design of data interaction permissions.

At the same time, supervision is accelerating. China’s “Data Security Law” and “Personal Information Protection Law” have set higher standards for data compliance. A leading domestic financial institution was criticized by regulators for failing to effectively desensitize sensitive data in AI pilots, indicating that compliance risks in AI deployment have become a real “hard threshold”.

Five Strategic Points for Secure AI Deployment

To address these challenges, organizations preparing for large-scale AI deployment should focus on the following five aspects:

Comprehensive review of data access patterns

Before introducing an AI system, it is necessary to sort out the existing data flow, map the flow path of information within the enterprise, and identify potential exposure points of sensitive data.

Build complete traceability

Embed traceability mechanisms from the design stage to ensure that every AI decision can be traced back to the data source and reasoning logic to meet compliance, audit, and troubleshooting needs.

Adopt standardized protocols

Pay attention to emerging domestic and international AI security and data governance standards, and prioritize solutions with future compatibility to reduce later integration and migration costs.

Go beyond traditional RBAC (Role-Based Access Control)

Introduce semantic data classification and context-aware mechanisms, not only focusing on “who” can access but also understanding “under what scenarios” AI can access which data.

Implement a governance-first architecture

Deploy governance and security infrastructure before the AI application goes online to avoid the passive situation of “running business first and making up for security later”.

Security Connector and Security Inference Layer

Developing a governance-first architecture requires enterprises to fundamentally rethink how artificial intelligence systems access enterprise data.

Unlike traditional direct connections, a governance-first architecture should implement two key components that work together: a security connector and a security inference layer between artificial intelligence applications and data sources to provide intelligent filtering, real-time authorization, and comprehensive governance.

Security Connector: It is equivalent to the “intelligent gateway” of AI, which not only undertakes data docking but also performs real-time authorization verification. It can understand the semantics of requests and dynamically determine whether to release data based on user identity, data classification, and business context.

Security Inference Layer: Perform permission verification and rule checking before data enters the AI model. It can superimpose text-based security policies to ensure that sensitive information is not mishandled or spread.

This “double-layer protection” architecture can complete governance and security checks before data flows to AI, achieving true “shift-left security”. Although it will bring a certain performance loss, it can greatly reduce compliance and security risks in large-scale AI deployment.

The Evolution of AI Governance

The evolution of AI security architecture is not only a technological upgrade but also represents a transformation in infrastructure paradigms. Just as the Internet needs security protocols and cloud computing needs identity management, enterprise-level AI also requires an exclusive governance system.

The “exploratory data behavior” exhibited by AI enables it to dynamically discover and connect originally isolated data silos within enterprises. This capability is both a source of value and a source of risk. If domestic enterprises want to truly release the potential of AI, they must take security and governance as the “first principle” of their deployment strategy, rather than a post-event remedy.