Genie Envisioner (GE) Platform Achieves End-to-End Reasoning for Robots’ “Perception-Decision-Execution”

The Genie Envisioner (GE) platform innovatively integrates future frame prediction, strategy learning, and simulation evaluation into a closed-loop architecture centered on video generation, achieving for the first time an end-to-end reasoning process for robots to complete from perception to decision-making and then to execution within the same world model.

Zhiyuan Robotics Unveils GE Platform with Full Open-Source Commitment

Recently, Zhiyuan Robotics launched the industry’s first unified world model platform for real-world robot manipulation, Genie Envisioner (GE), and announced that it will open-source all codes, pre-trained models, and evaluation tools.

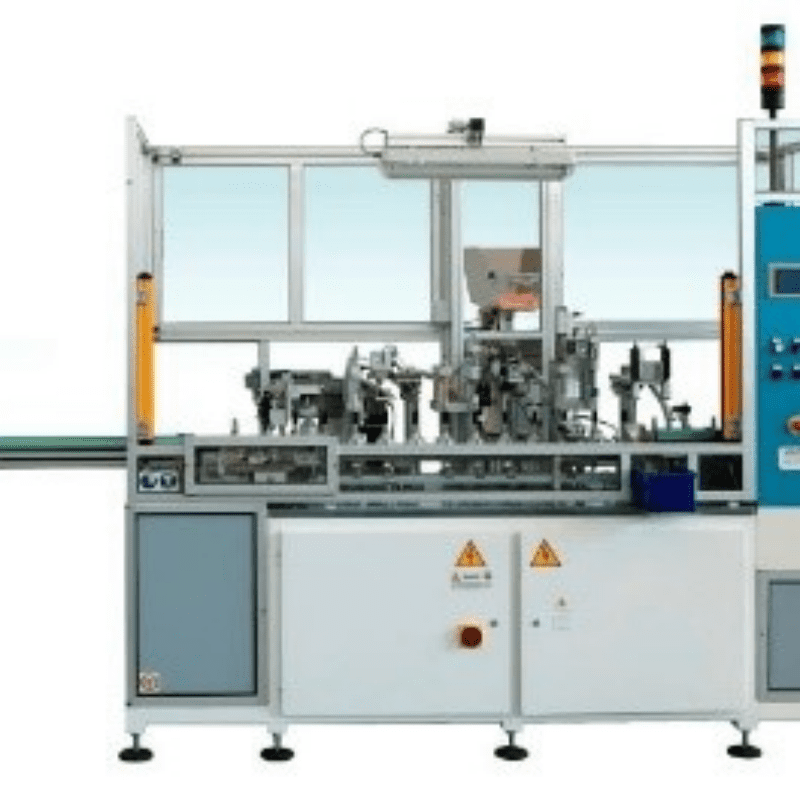

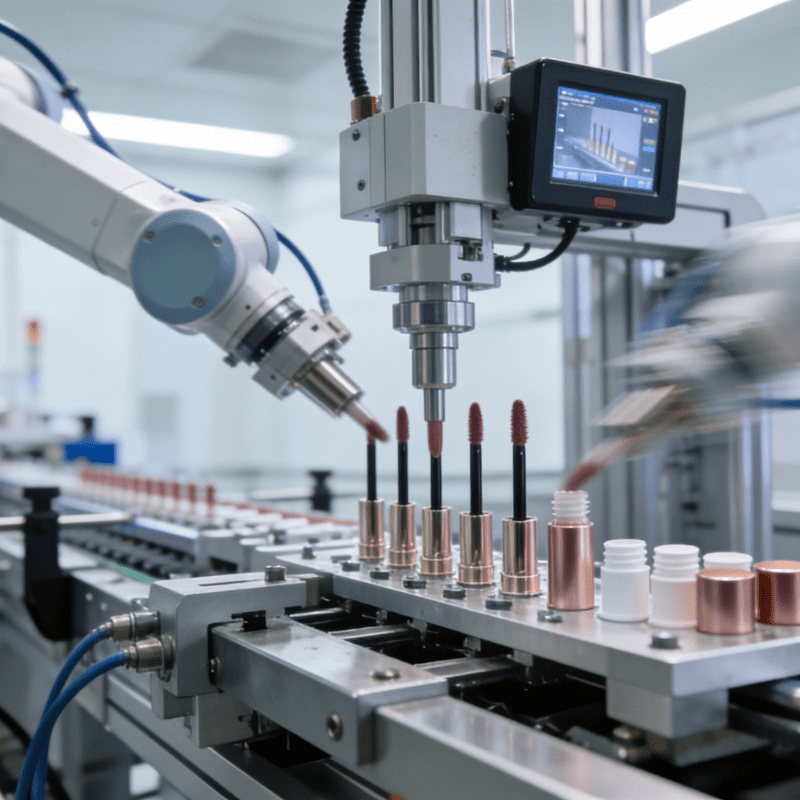

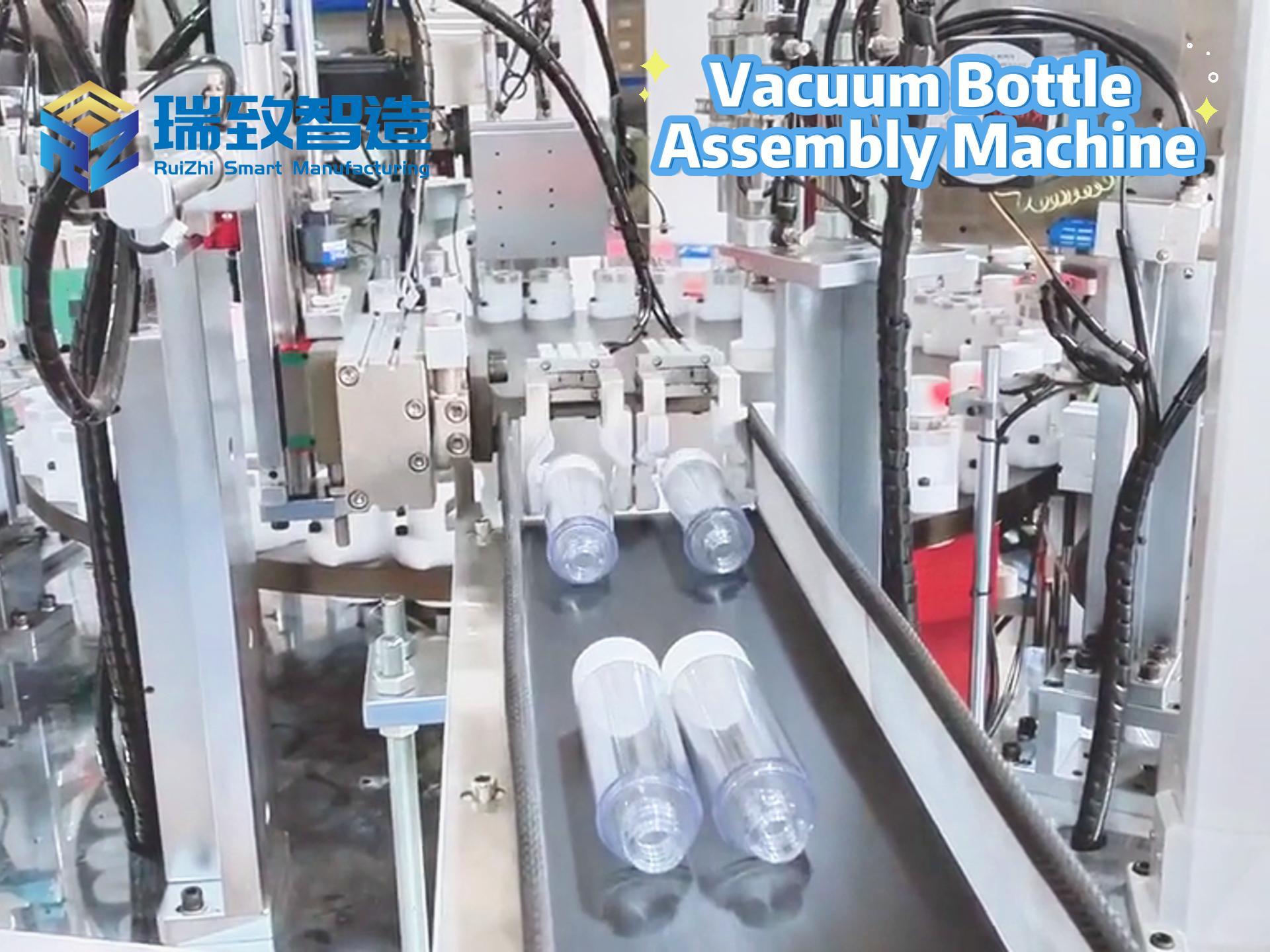

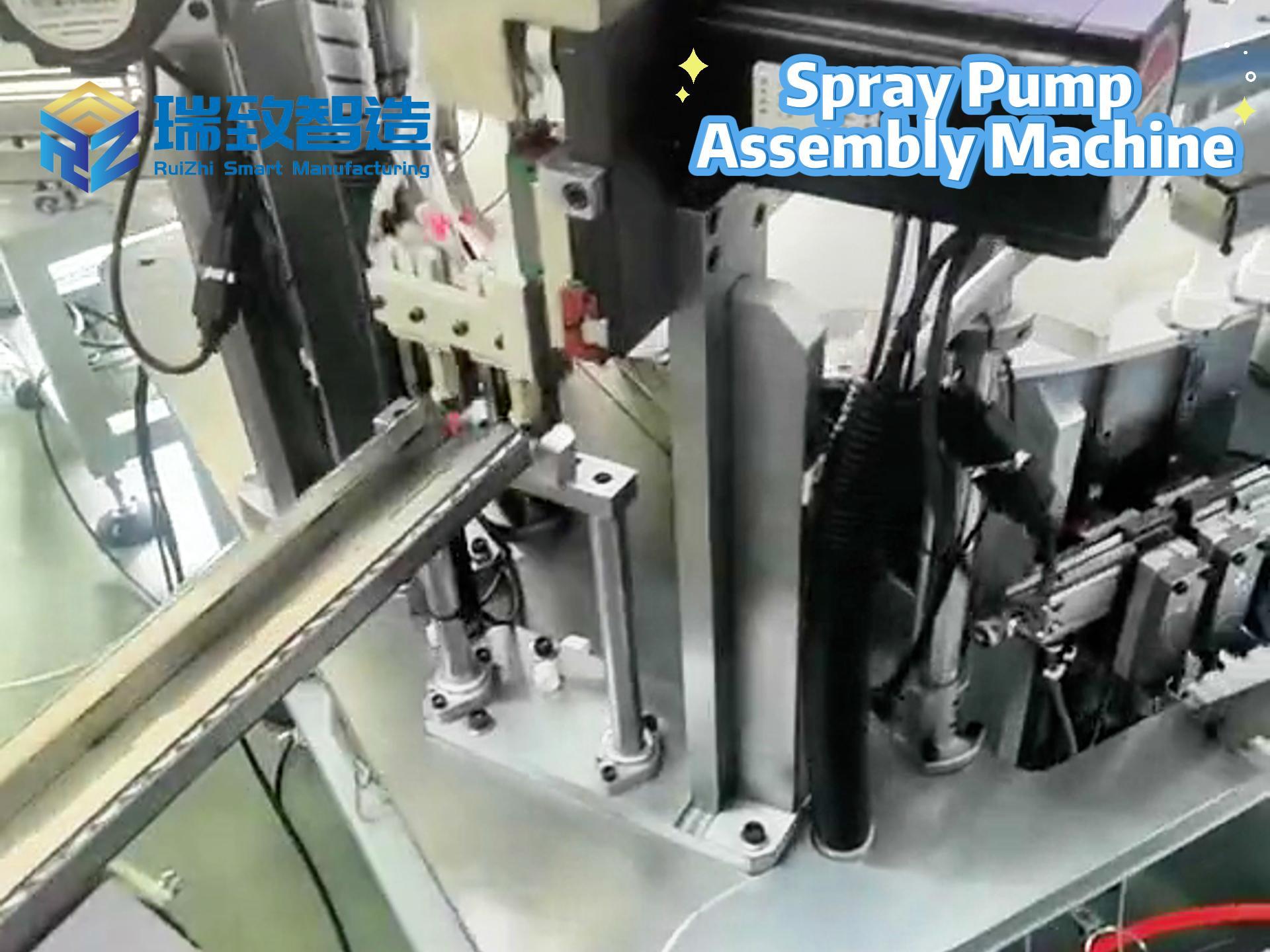

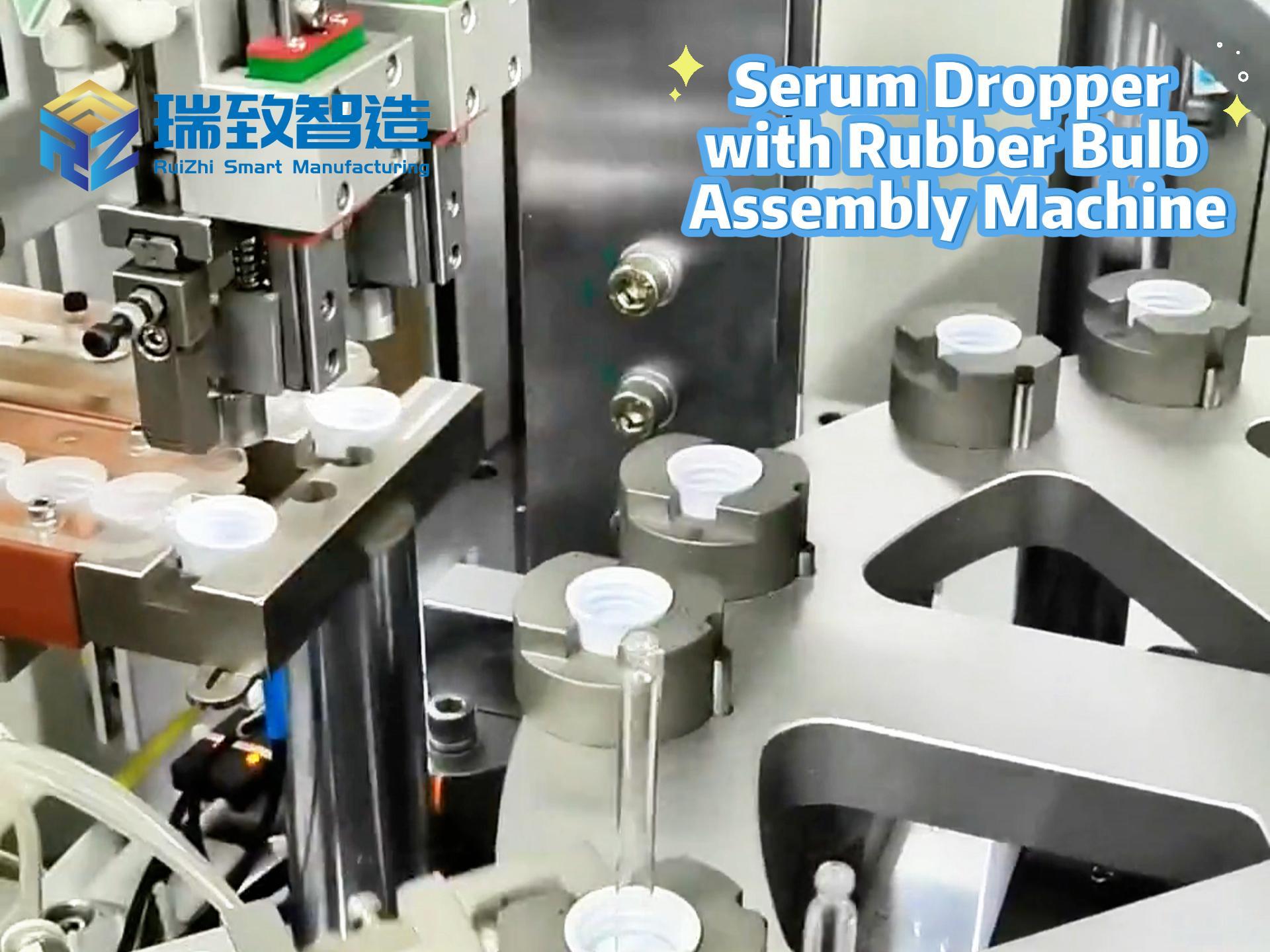

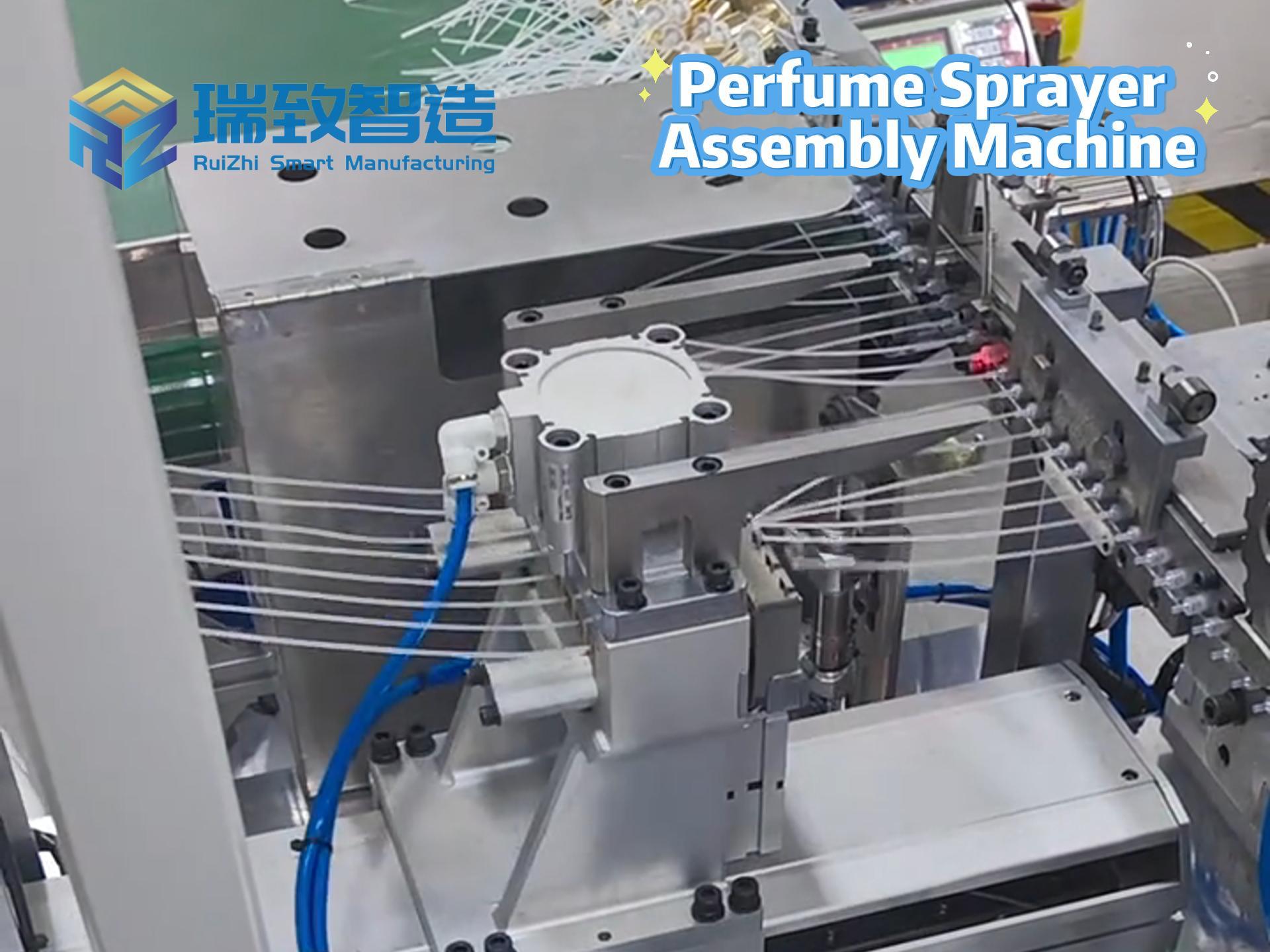

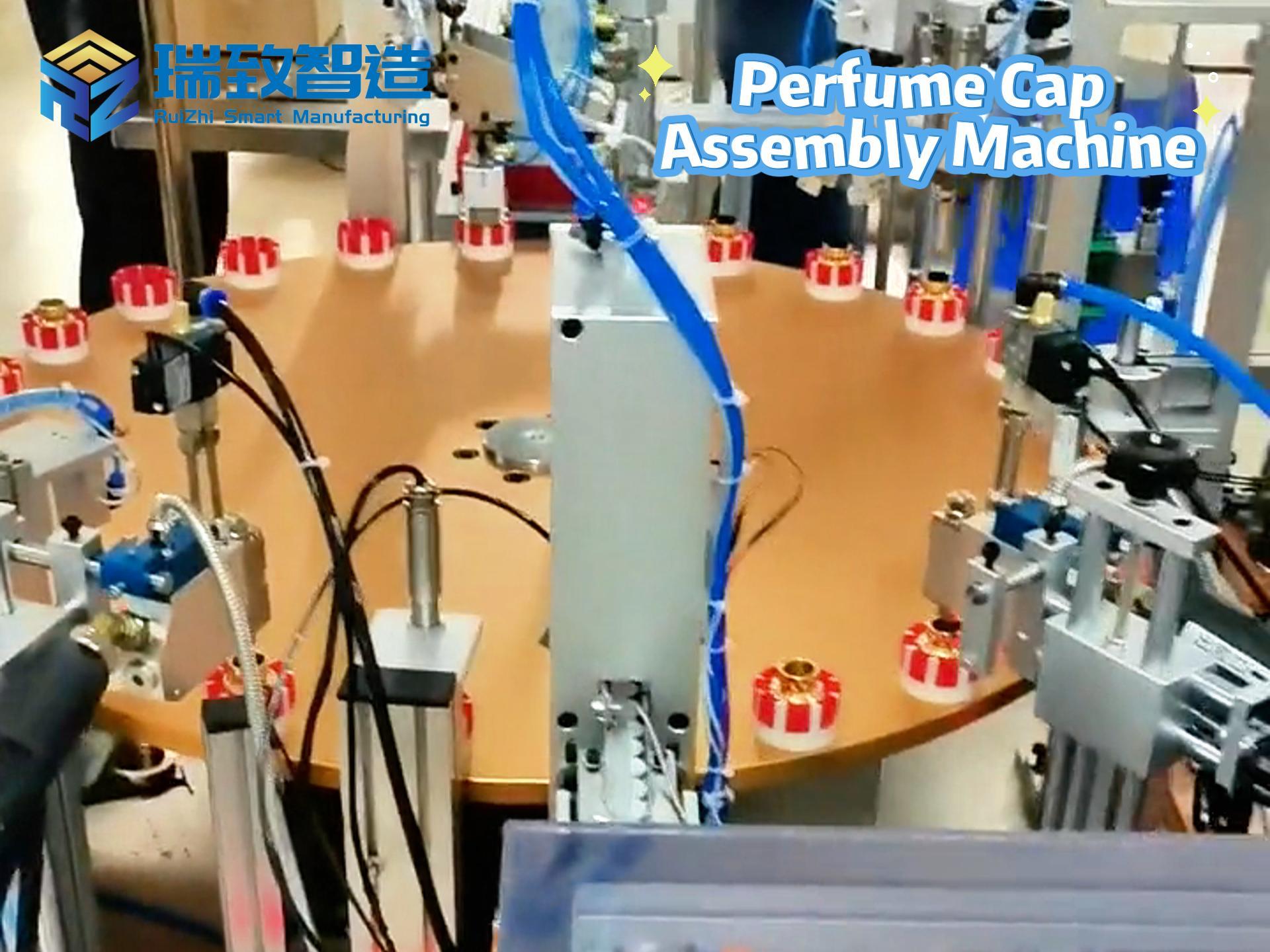

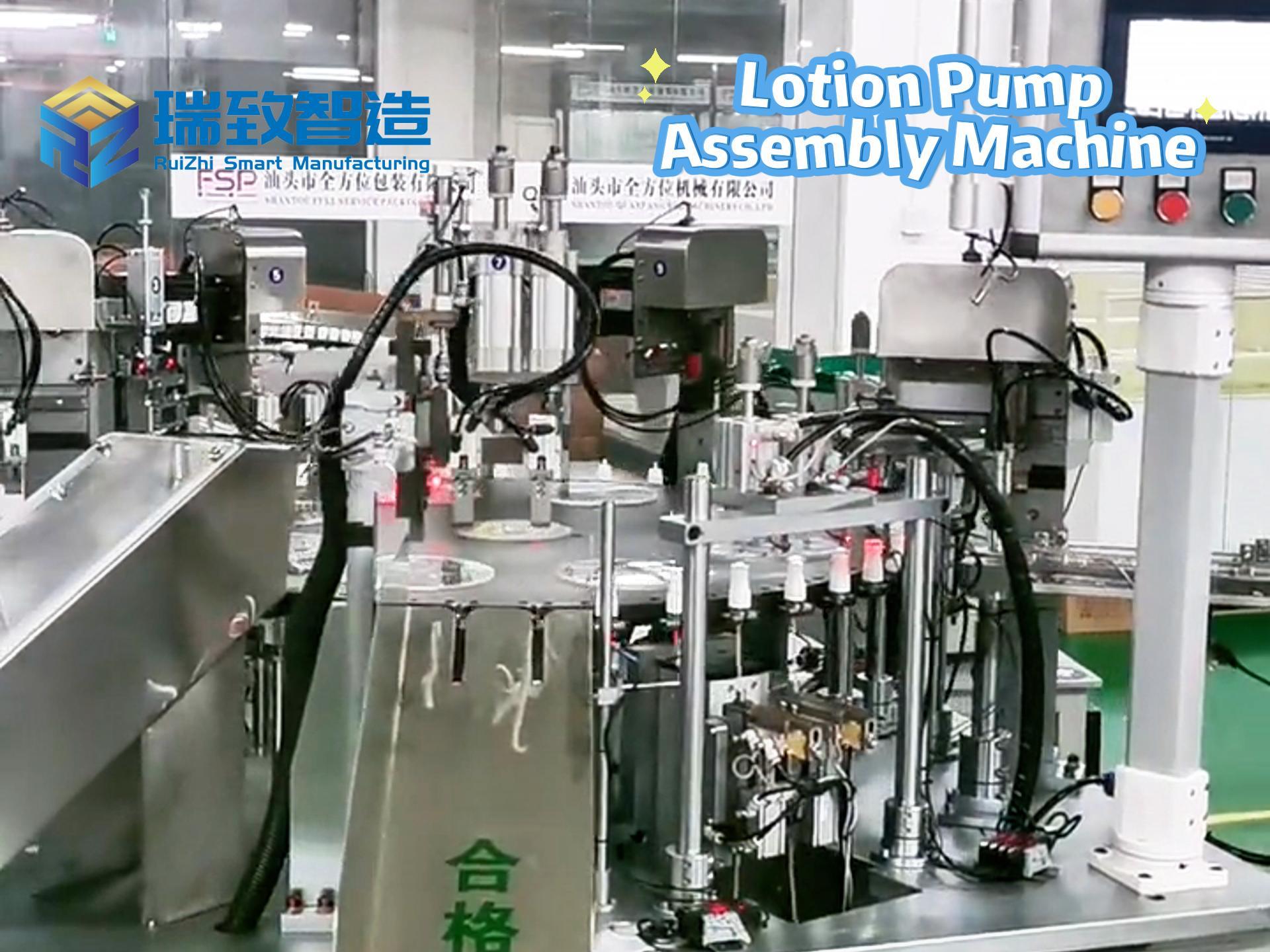

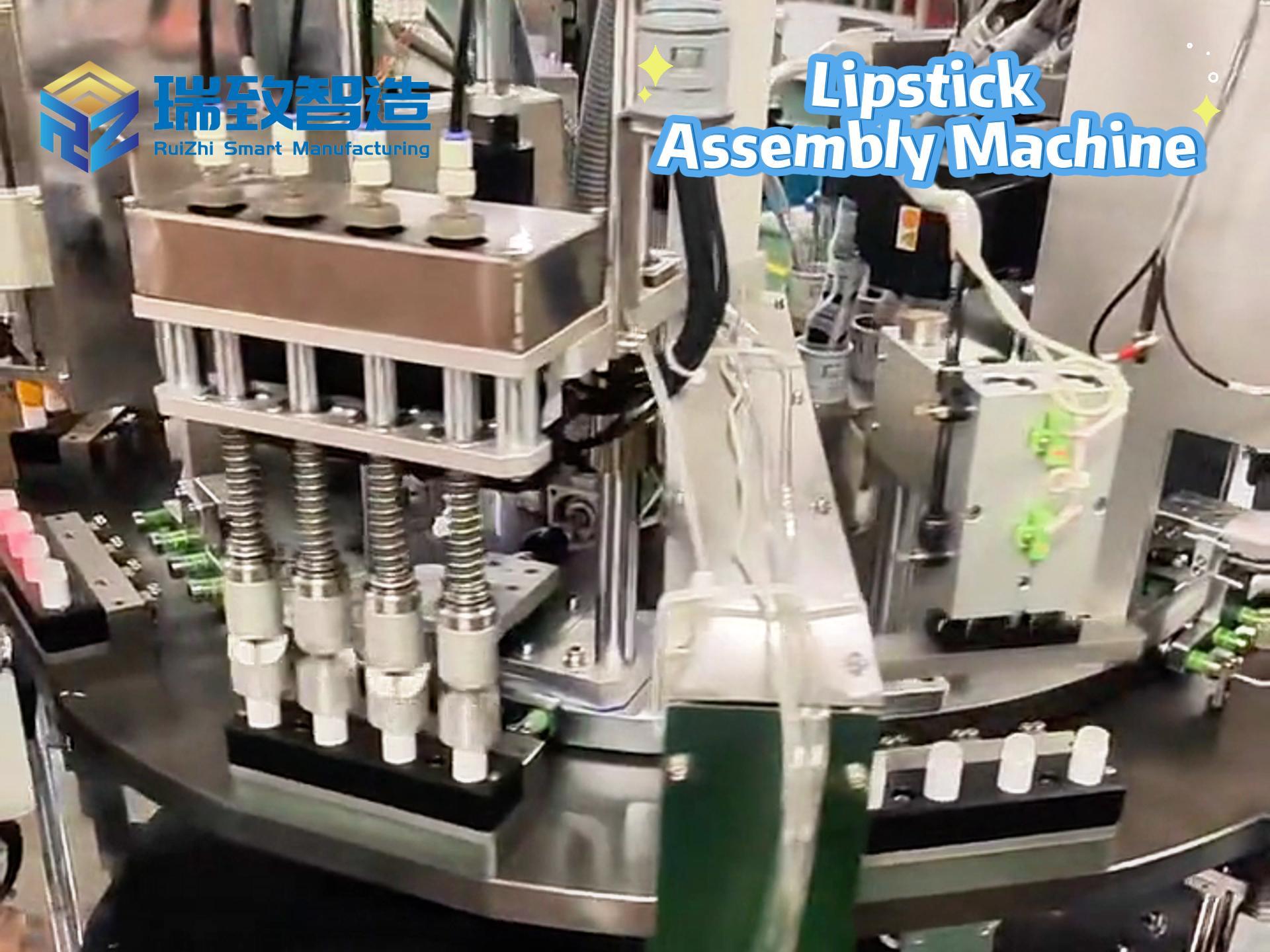

GE Platform Architecture and Its Application Value in Precision Manufacturing

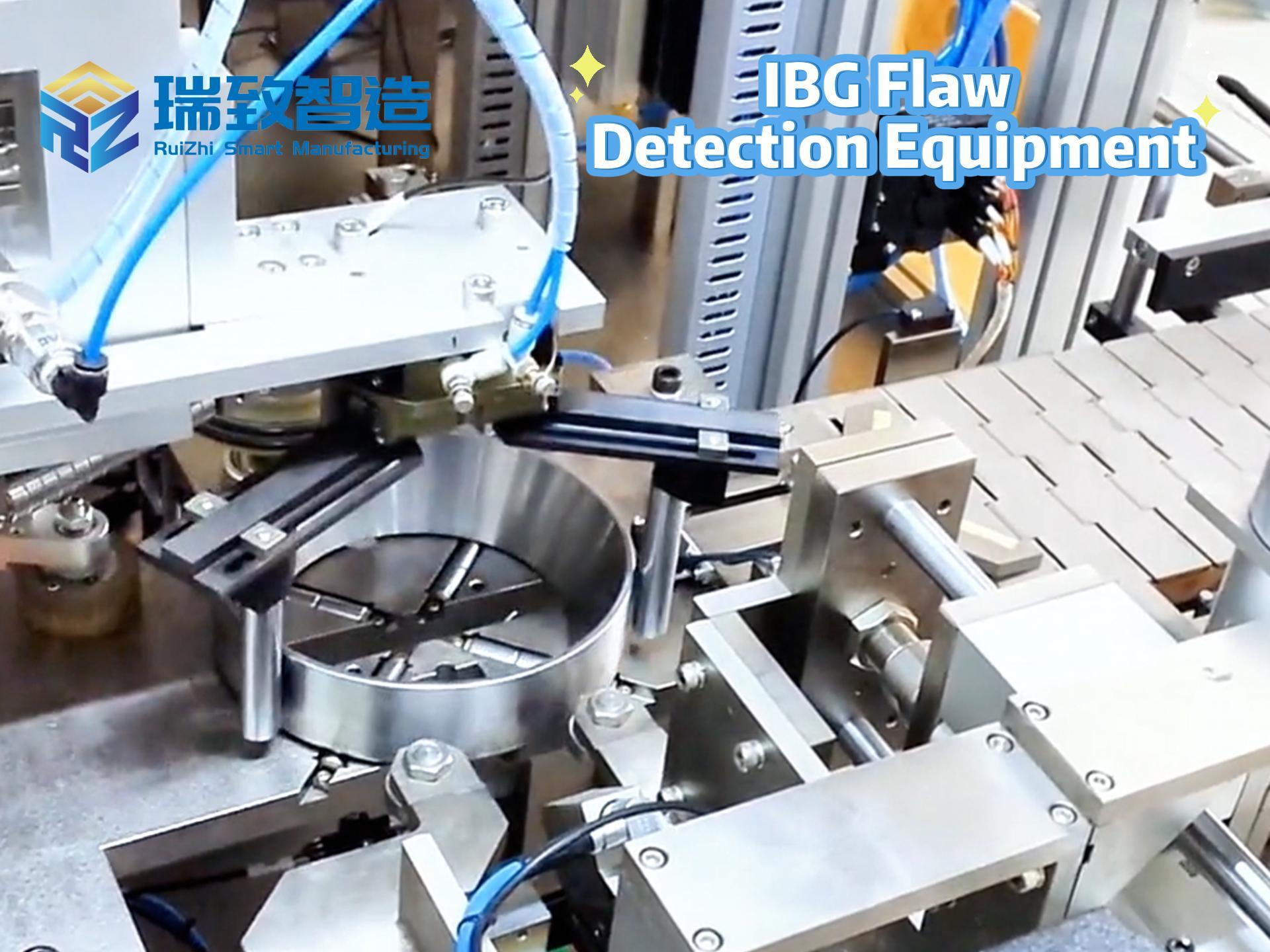

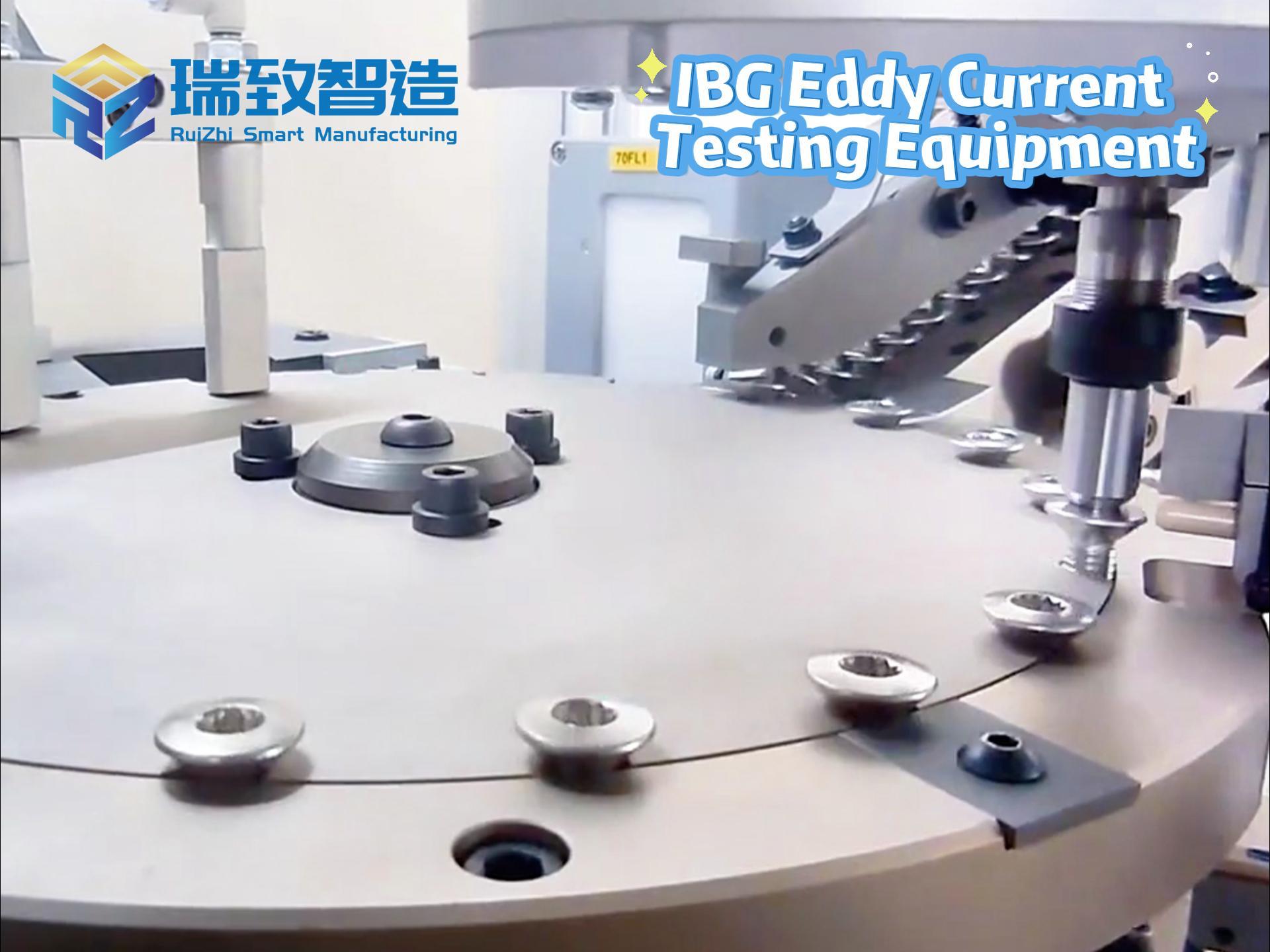

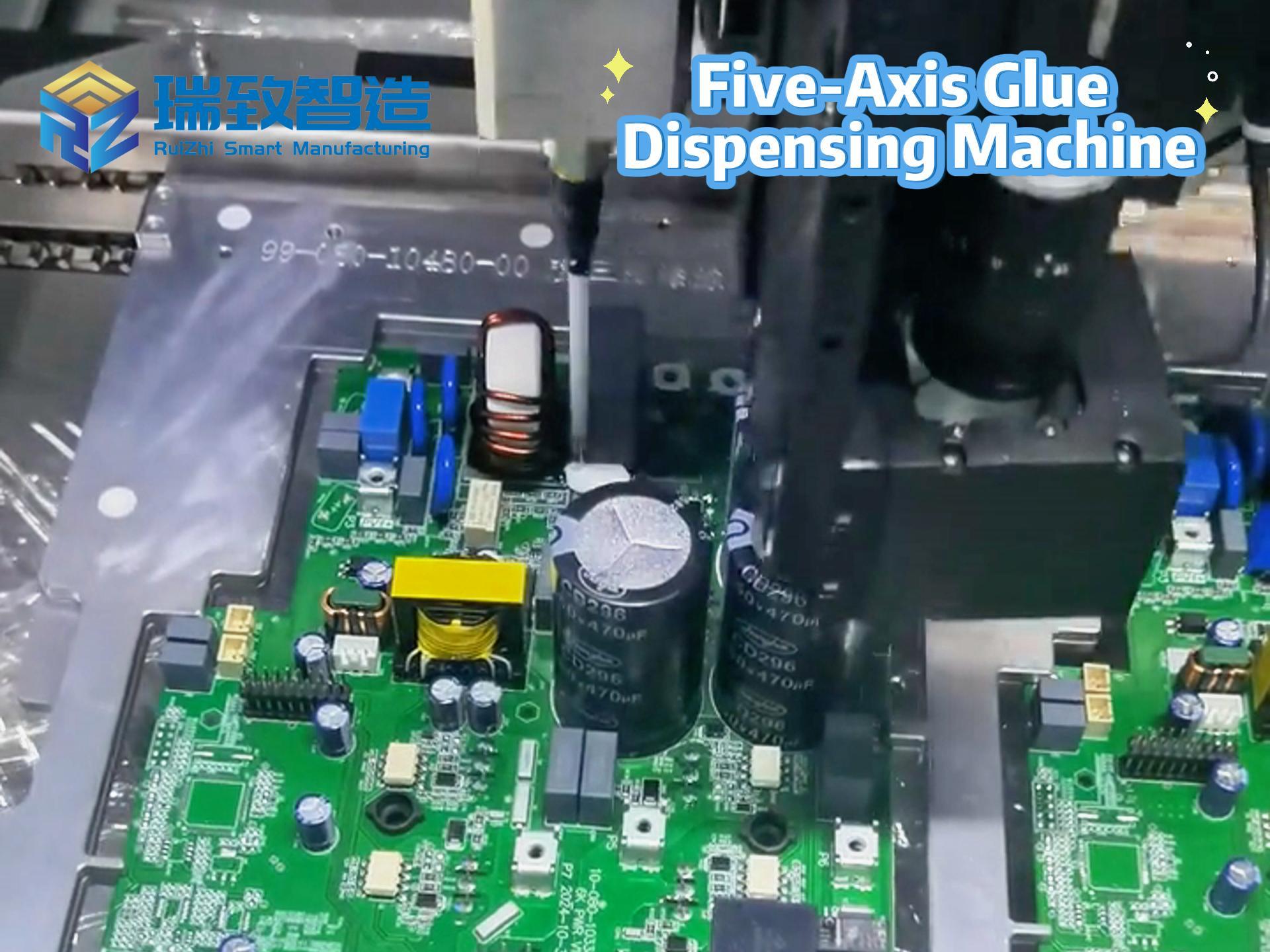

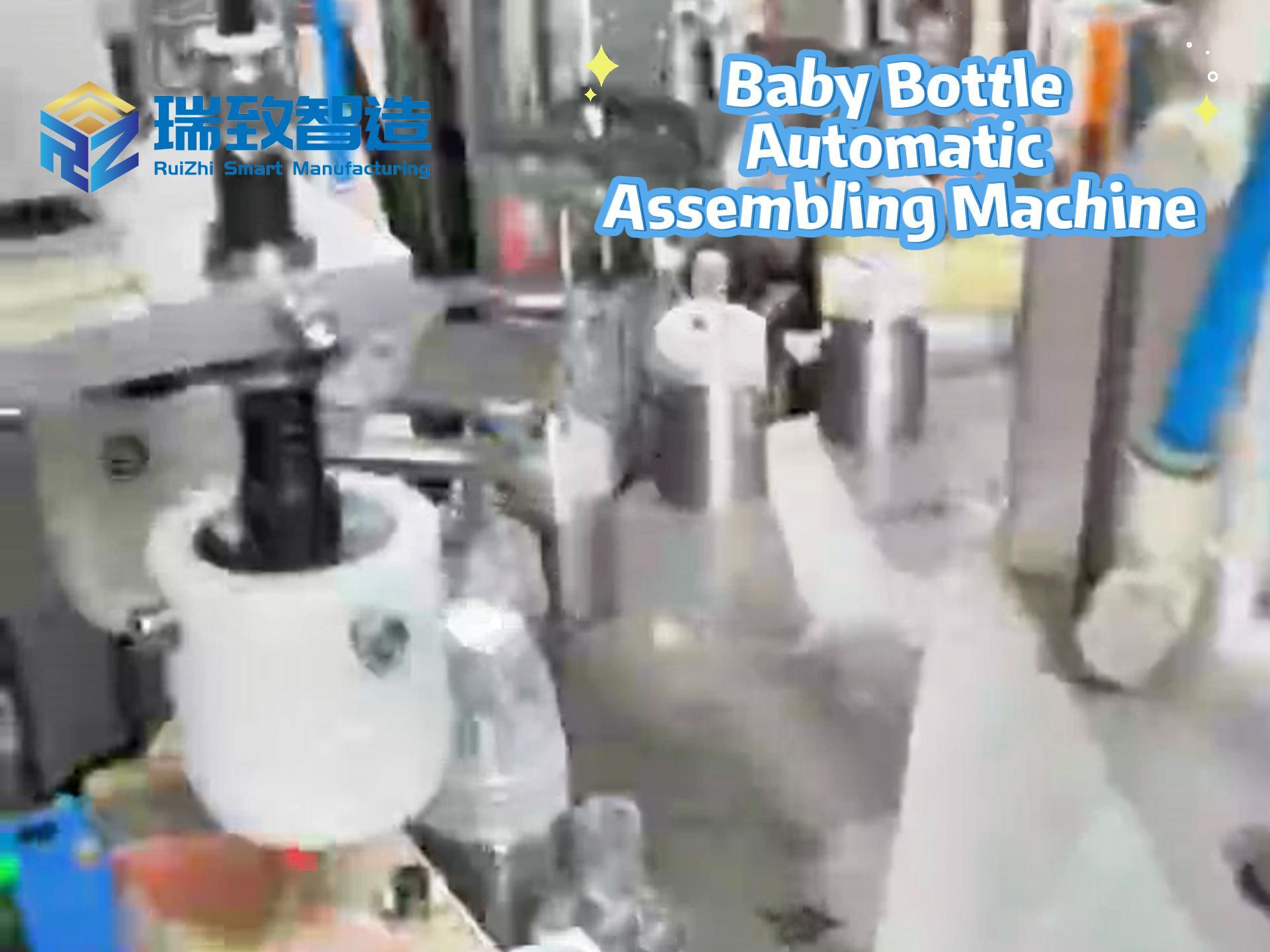

The platform innovatively integrates future frame prediction, strategy learning, and simulation evaluation into a closed-loop architecture centered on video generation, realizing for the first time an end-to-end reasoning process for robots to complete from perception to decision-making and then to execution within the same world model. This ability to accurately model physical interactions has broad application prospects in the field of precision manufacturing — for example, in biological indicator assembly machines, through a similar visual-action closed-loop control logic, sterile and precise docking of microbial carriers and reaction tubes can be achieved. Its sub-millimeter assembly accuracy and the spatiotemporal dynamic modeling concept of the GE platform are jointly promoting the upgrading of automated systems towards the integration of “perception-decision-execution”.

Limitations of Traditional Robot Learning Systems

Traditional robot learning systems generally adopt a phased development model of “data collection — model training — strategy evaluation”. Each link is independent and relies on task-specific tuning, resulting in high development complexity and long iteration cycles.

Technical Foundation for GE Platform’s Breakthrough in Fragmented Architecture

The GE platform breaks through this fragmented architecture bottleneck by building a unified video-generated world model. Based on approximately 3,000 hours of real robot manipulation video data (covering more than 1 million real machine records), the platform establishes a direct mapping from language instructions to visual space, completely retaining the spatiotemporal dynamic information of the robot’s interaction with the environment.

Vision-Centered World Modeling Paradigm and Performance Improvements

The core breakthrough lies in the vision-centered world modeling paradigm. Different from the mainstream VLA (Vision-Language-Action) methods that rely on language abstraction, GE directly models the interaction dynamics between robots and the environment in visual space, achieving accurate capture of physical laws. This paradigm brings significant performance improvements:

Improved cross-platform generalization efficiency: On brand-new robot platforms such as Agilex Cobot Magic, the GE-Act action model can perform tasks with high quality with only 1 hour (about 250 demonstrations) of teleoperation data, which is better than the π0 and GR00T models that require large-scale multi-ontology pre-training;

Breakthrough in long-time sequence task execution: In ultra-10-step continuous tasks such as folding cartons, the success rate of GE-Act is as high as 76% (π0 is 48%, UniVLA/GR00T is 0%). This is mainly attributed to the ability of visual space to explicitly model spatiotemporal evolution and the innovative design of the sparse memory module.

The technical architecture consists of three collaborative components:

GE-Base multi-view video base model: It adopts an autoregressive video generation framework, maintains spatial consistency through three-way perspective input from the head and the wrists of both arms, and combines a sparse memory mechanism to enhance long-time sequence reasoning. The training is divided into two stages: 3-30Hz multi-resolution time sequence adaptation training to improve motion robustness, and 5Hz fixed sampling strategy alignment fine-tuning;

GE-Act parallel flow matching action model: The 160M parameter lightweight architecture converts visual representations into control commands through a cross-attention mechanism, and adopts “slow-fast” asynchronous reasoning (video DiT 5Hz / action model 30Hz), achieving 200-millisecond 54-step real-time response on RTX 4090 GPU;

GE-Sim hierarchical action condition simulator: Through Pose2Image conditions and motion vector encoding, control commands are accurately converted into visual predictions, supporting closed-loop strategy evaluation and data generation, and can complete thousands of strategy rollouts per hour.

EWMBench Evaluation Suite for Quantifying World Model Quality

To quantify the quality of the world model, the team simultaneously launched the EWMBench evaluation suite to evaluate the modeling ability from the dimensions of scene consistency and trajectory accuracy. In the comparison of models such as Kling and OpenSora, GE-Base leads in key indicators and is highly consistent with human judgment. The platform has now opened the project homepage, papers, and code repository, promoting the evolution of embodied intelligence from the “passive execution” to the “imagination-verification-action” paradigm.