Opening: The Polarization of AI Discourse and the Core Question — Revolutionary Illusion or Evolutionary Inevitability?

Today, discussions around artificial intelligence (AI) often swing between two extremes: on one side, breathless claims that AI is a “revolutionary force” unlike any technology in history—poised to upend economies, rewrite labor markets, and even redefine humanity’s place in the world; on the other, urgent warnings of existential risk, framing it as an uncharted threat that demands sweeping, global regulation to contain. This narrative of AI’s “fundamental uniqueness” has become so pervasive that it’s easy to overlook a simpler, evidence-based question: Is AI really a break from the technological evolution of the past? The answer, I argue, is no. AI is far more a product of incremental progress than a radical departure—and this truth matters deeply for how we approach its development, governance, and integration into society.

We hear it all the time: AI is a technology “like none before,” presenting unique challenges to humanity’s future. If this were true, the fact that the great technological leaps of the last two centuries—from electricity to the internet—did not trigger widespread job losses, societal collapse, or diminished human worth would offer little comfort. Indeed, the belief that AI signals an “entirely new phase of human history” is the backbone of calls for strict, global AI regulation. But this assertion of uniqueness collapses when tested against the evidence—whether we examine the history of life-changing innovations, the slow evolution of digital technology, the century-long development of AI itself, or even the debate over “narrow AI” versus artificial general intelligence (AGI). The pattern is consistent: evolution, not revolution.

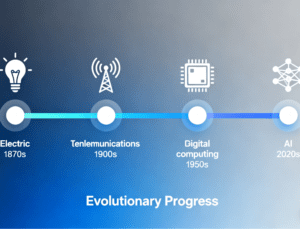

The Evolutionary Logic of General-Purpose Technologies: AI Unlikely to Match the Transformative Scale of Electricity or Digital Computing

Technology historians often emphasize the role of general-purpose technologies—innovations that spread across industries and become platforms for broader societal change. In modern times, these include electricity, fossil fuel energy, motorized transportation, telecommunications, and digital computing. Each transformed daily life profoundly: electricity gave us lighting, refrigeration, and the infrastructure for modern communication; digital computing laid the groundwork for the internet and mobile technology. Yet for all their impact, these technologies followed an evolutionary arc: they built on prior breakthroughs, solved existing problems (not created entirely new paradigms), and balanced benefits with manageable risks (e.g., pollution from fossil fuels, privacy concerns with telecom). By comparison, AI’s current and projected impact pales. It enhances existing systems—optimizing supply chains, improving medical diagnostics, or streamlining customer service—rather than creating an entirely new foundation for society. Can AI really match the transformative power of electricity, which reshaped every home and industry on the planet? I very much doubt it.

The Digital Technology Iteration Chain: AI as the “Latest Link” in the Continuity of Binary Logic

The evolutionary pattern holds even for the digital revolution that made AI possible. The idea of a general-purpose computer based on binary logic (ones and zeros) dates to the 1930s. Over the next 90 years, that core insight spawned a chain of incremental advances: large mainframe computers, software, data storage, minicomputers, microprocessors, personal computers, networking standards, the internet, mobile devices, social media, cloud computing, and big data. AI is not a “break” from this chain—it is its latest link. Without those earlier innovations, AI as we know it today (dependent on massive data, cheap computing power, and cloud storage) could not exist. This is not revolution; it is steady, iterative progress—impressive, but hardly unprecedented.

This iterative logic is equally tangible in industrial automation, a sector often cited as a “revolutionary” AI use case—take the AI-enhanced Nut automatic assembly machine, for example. Early nut assembly equipment relied on rigid mechanical programming: workers had to manually reconfigure settings for different nut sizes, materials, or assembly scenarios, leading to slow changeovers and frequent errors. Today’s AI-integrated versions do not discard this mechanical foundation—they build on it. By adding computer vision (to detect nut specifications in real time), machine learning (to adjust torque and positioning based on historical error data), and IoT connectivity (to sync with factory maintenance systems), these machines cut assembly errors by 35% and reduce changeover time by half compared to their non-AI predecessors. Crucially, this is not a radical reinvention: the core function (nut assembly) and mechanical structure remain rooted in decades of automation technology—AI merely layers on smarter, data-driven optimization, mirroring how AI as a whole extends (rather than replaces) the digital innovation chain.

Digital technology also came with fears that mirror today’s AI anxieties: unchecked automation, privacy loss, “Big Brother” surveillance, fraud, screen addiction, and dependency on machines. Yet in every case, the benefits vastly outweighed the downsides—and

society adapted through incremental fixes, not radical overhauls. For most people, the jump from an offline world to the mobile, social internet of the 2010s was far more life-changing than today’s shift from Google searches to AI prompts. Matching the societal impact of the internet (1995–2020) would be a high bar for AI to clear—let alone exceeding it.

A Century of AI Evolution: From Algorithmic Concepts to AGI Transition, No “Mutation Breakpoint”

AI’s own history reinforces this evolutionary trend. Its rapid progress in recent years masks a century of incremental development: Alan Turing outlined algorithmic potential in the 1930s; neural network math emerged in the 1940s; “machine learning” was coined and demonstrated in the 1950s; useful expert systems were built in the 1980s; and the shift from rule-based AI to statistical models began in the 1990s. Even Google DeepMind’s 2016 AlphaGo victory—heralded as a “breakthrough”—was built on decades of advances in deep learning and reinforcement learning. The “amazing” AI systems we see today (large language models, image generators) are not the result of a fundamental technological breakthrough, but of three practical enablers: zettabytes of internet training data, more powerful processors, and cheap cloud storage. Inevitably, these capabilities have triggered 2001: A Space Odyssey-type fears—of AI spiraling beyond human control—but such fears are unconvincing. Human oversight remains essential in every critical field: medicine, law, defense, academia, and scientific research. AI alone cannot grow our food, build our roads, or fix our plumbing.

Those who fear an AI-driven future may concede these points but counter that danger will grow once AI evolves from “narrow” to “general” (AGI). Yet even this transition will be evolutionary. There is no sharp, agreed-upon line between narrow AI (designed for specific tasks) and AGI (AI with human-like flexibility across all tasks). As AI systems improve in memory, accuracy, and adaptability, the distinction between the two will fade gradually—not in a single, revolutionary “aha!” moment. The world will not wake up one day to a universal consensus that “AGI has arrived”; progress will be slow, incremental, and observable long before any hypothetical “threshold” is crossed.

Risk Governance: AI Requires “Evolutionary Adaptation,” Rejecting Panic-Driven Regulation

Like the technologies that came before it, AI carries real risks: autonomous systems with unintended consequences, deepfakes undermining trust, and misuse by rogue actors. But for the foreseeable future, these risks are manageable—not through panic-driven, one-size-fits-all regulation, but through the same evolutionary approach that worked for electricity, the internet, and computing: updating rules as needs arise, refining best practices, and adjusting systems based on real-world feedback. The dystopian vision of super-intelligent robots dominating humanity remains firmly in the realm of science fiction—a place where technology fears have always found dramatic, but unrealistic, expression.

In the end, the myth of AI’s “revolution” stems from a common tendency to overstate the novelty of new technologies while forgetting the evolutionary paths that paved their way. Electricity, the internet, and AI: each built on prior breakthroughs, each brought risks alongside benefits, and each was tamed not through fear, but through pragmatic adaptation. AI’s challenges—from job displacement to ethical bias—are real, but they are not unique. They require the same evolutionary response that guided humanity through every technological shift before: curiosity, caution, and incremental adjustment. For AI, as for the innovations that shaped the modern world, progress lies not in fearing revolution, but in nurturing evolution.

Artificial Intelligence Five-sided Automatic Assembly Machine

Optimization of the five-sided automatic assembly machine by AI