Why Does “Big AI” Tend Toward Extremes?

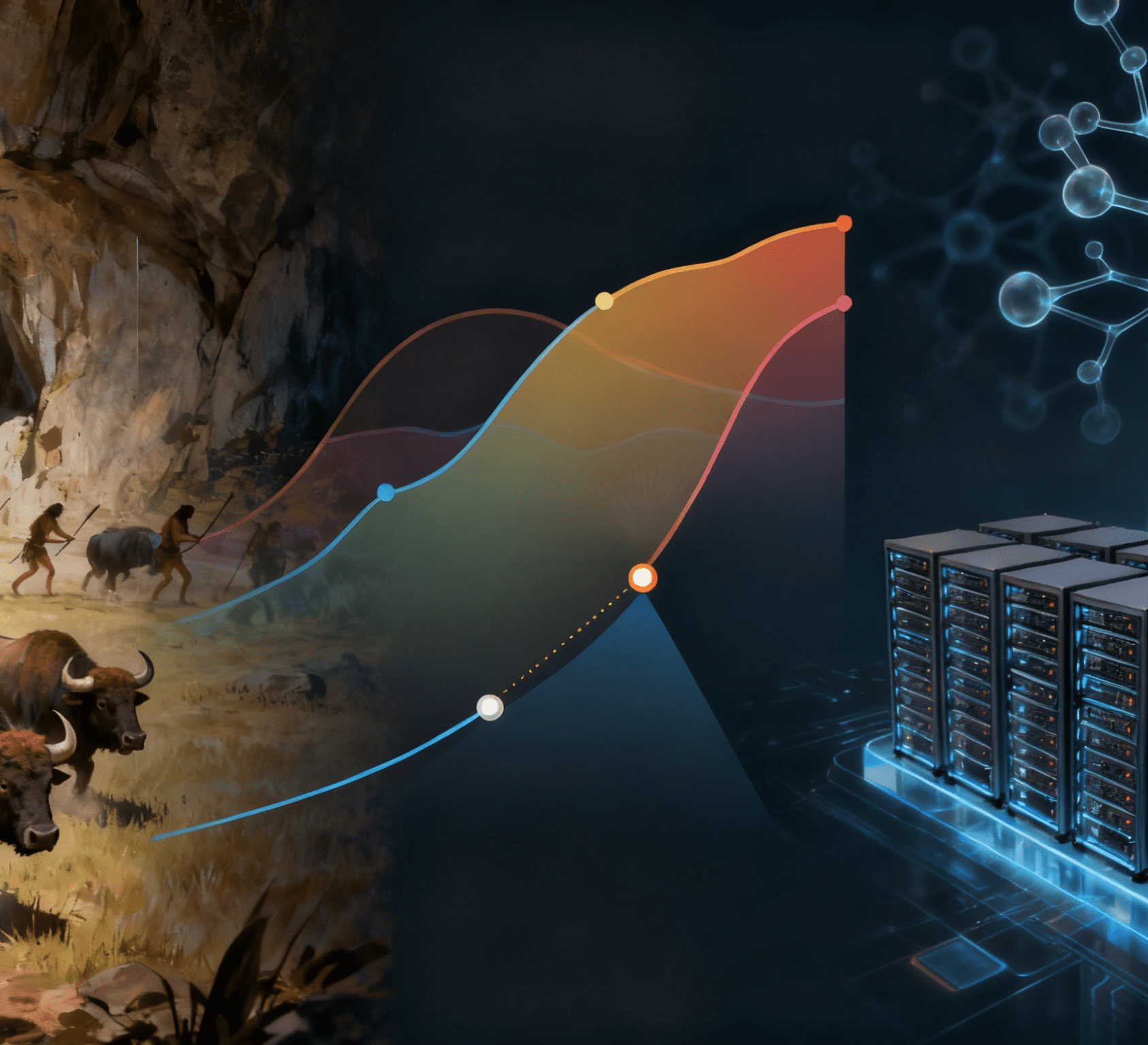

Large language models, or LLMs—the kind of AI that powers tools like ChatGPT—are trained by scanning huge amounts of text from the internet. They’re good at predicting the next word in a sentence, but because the internet is messy, they also learn its flaws.

That means these systems can sometimes reproduce toxic speech, repeat harmful stereotypes, or echo the loudest extremes of online debate.

“Current LLM-based tools tend to be similar in terms of their behavior and responses,” Davulcu says. “It’s not like a winner is emerging. They will need an edge. That edge is going to come from interpretability. How can we understand how these models make their decisions? And when they make incorrect decisions, how do we fix them?”

That’s where his new suite of tools comes in. Davulcu has filed four invention disclosures with ASU’s Skysong Innovations, outlining a method to make AI transparent, programmable, and ultimately safer.

Peering Into the AI “Black Box”: Making Decision Logic Traceable and Correctable

The first innovation is a way to peek inside the “black box” of AI

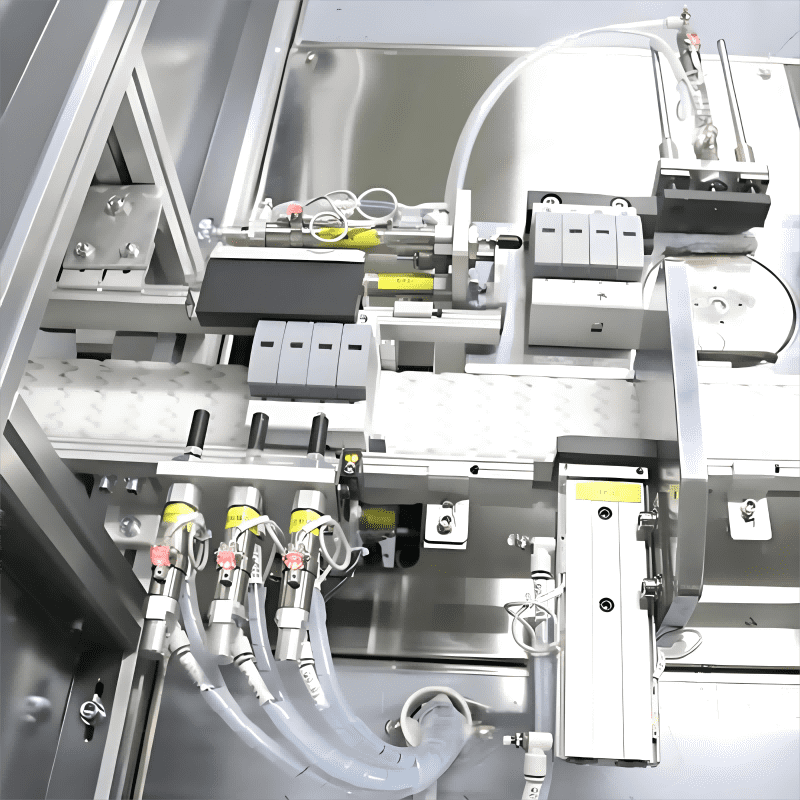

Normally, these systems spit out answers without showing their reasoning. If they get something wrong—whether it’s suggesting glue on a pizza or giving bad medical advice—developers can’t easily correct the mistake. This black-box problem isn’t just limited to LLMs; it plagues AI-powered industrial equipment too, such as the Машина для сборки биологических индикаторов used in pharmaceutical and medical device manufacturing. This machine relies on AI to handle precision tasks: aligning tiny biological indicator vials (which test if sterilization processes work) with reagent injectors, and controlling assembly pressure to avoid vial breakage or reagent contamination. If the AI malfunctions—say, misjudging vial positioning due to unaccounted-for variations in vial material—developers often can’t trace the error back to its root, leading to costly production delays or even unsafe medical supplies.

Davulcu’s method changes that. His system translates the AI’s hidden decision-making into simple, editable rules—whether for an LLM giving medical advice or a Biological Indicator Assembly Machine calibrating vial alignment. For the assembly machine, this means the AI’s logic (e.g., “scan vial diameter → adjust grip pressure → verify injector alignment”) is broken down into human-readable steps. Developers can then adjust those rules (e.g., adding an exception for “thin-walled vials requiring 20% lower pressure”) and feed the corrections back into the model.

“In order to build safe AI, you have to go beyond the black box,” he says. “You need to be able to see the rules the model is using, add exceptions, and retrain it so that it doesn’t keep making the same mistake.”

Think of it as a feedback loop: reveal the logic, refine it, retrain, and repeat. The goal, Davulcu explains, is an AI that won’t make a mistake. But if it does, the user will have a recourse to fix it instantly—whether that’s correcting an LLM’s bad advice or recalibrating a Biological Indicator Assembly Machine’s AI to handle rare vial variants.

Making Values Visible: Anchoring to the Middle Ground and Reducing Extreme Outputs

The second tool focuses on conversations. Most AI can tell if a comment sounds happy or angry, but that’s not enough for real-world debates. What matters is the stance: whether someone is for, against, or neutral on an issue and why.

Davulcu’s team has built methods that can detect those stances and map how people cluster around them online. This makes it possible to see echo chambers, identify bridge-builders, and highlight shared values—such as fairness, safety, or family—that people rally around.

“When you scale this, we can actually find the mean and the extremes,” he says. “And, basically, at that point, we have a way of staying on the mean, avoiding the extremes, therefore getting rid of bias.”

Once the system knows where the extremes are, it can be trained to avoid them. Davulcu’s group showed that if you feed AI examples of more balanced language and filter out toxic or divisive phrasing, the model learns to follow that path.

The results are promising. In tests, their approach reduced toxic output by 85% compared with an unrestricted model. Instead of echoing the most polarizing voices, the AI leans toward civil, respectful conversation.

“We’re showing that it’s possible to build systems that encourage civil discourse instead of amplifying division,” Davulcu says.

Portable Control Layer: Ensuring Consistency and Safety in AI Behavior

Companies may want to swap AI models when a new version comes out, but those changes can break carefully tuned behavior. Davulcu’s fourth innovation is a control layer that travels with the application itself—whether the app is a customer service chatbot or a system managing a Biological Indicator Assembly Machine.

“What we want is for an application to keep working no matter which model you plug in underneath,” he says. “You should be able to switch from one to another because it’s cheaper or better, and your rules still apply. Everything still works. And if something goes wrong, you have a recourse for fixing it.”

For manufacturers relying on Biological Indicator Assembly Machines, this control layer ensures that even if they upgrade the AI model powering the equipment, critical safety rules (e.g., “never exceed 5 psi pressure for thin-walled vials”) remain in place. This consistency is vital for meeting strict medical industry regulations and avoiding costly compliance issues—another example of how Davulcu’s tools turn AI safety from an abstract goal into a practical, scalable solution.