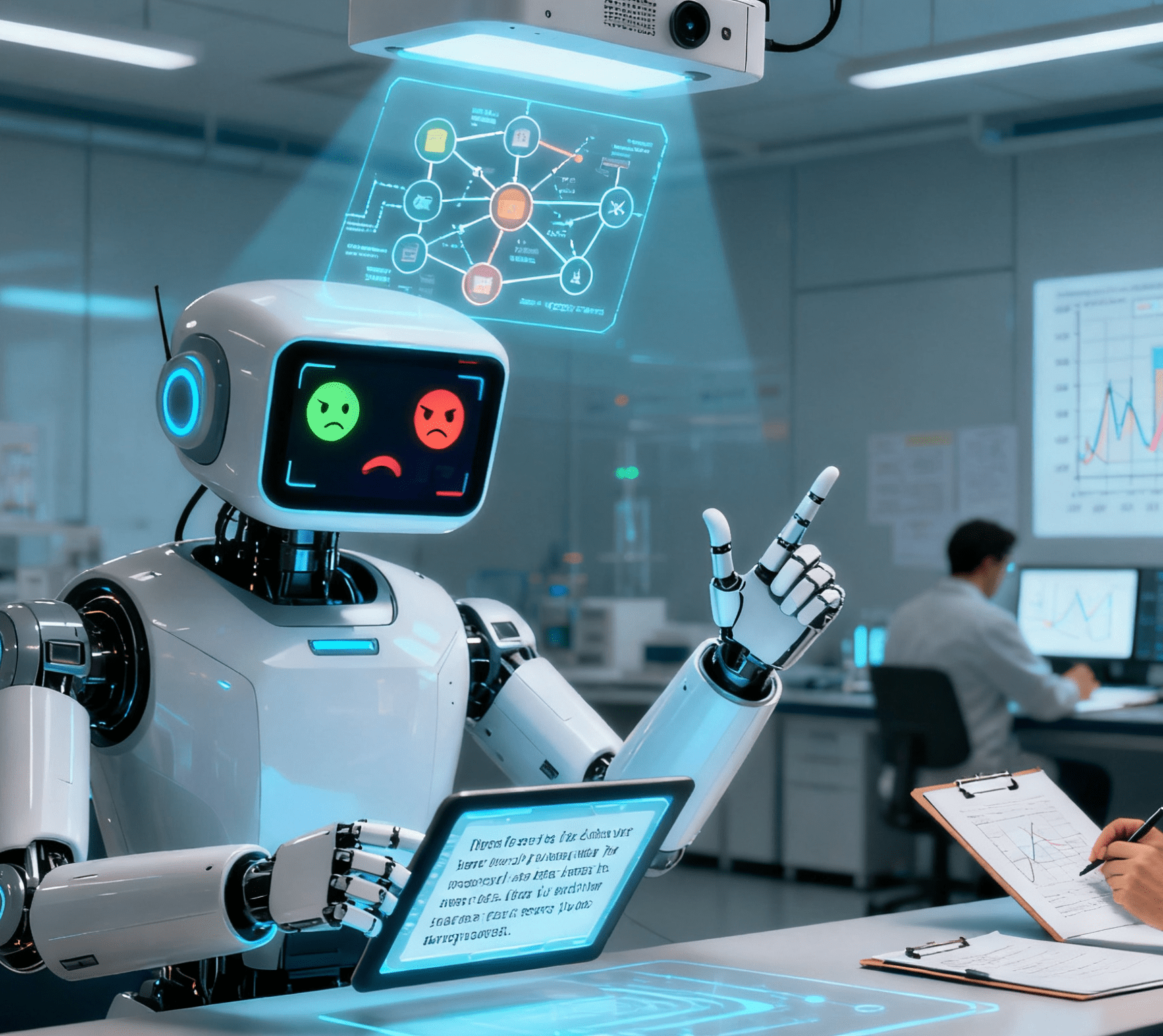

Artificial intelligence inherits biases from the data it learns from, making it an entirely human problem.

Artificial intelligence is reshaping the way we communicate, learn, and make decisions. Many hope it will help us break free from human prejudices and build a world where machines make fair and neutral choices. But this expectation overlooks a harsh reality: artificial intelligence inherits deeply rooted biases from its training data, and antisemitism — a hatred that has accompanied human history — is thus finding new fertile ground in the digital age.

The reality is worrying. Large language models such as ChatGPT and LLaMA are trained on massive amounts of internet data, much of which contains antisemitic tendencies. This is by no means a marginal issue: online archives, social media posts, and even some historical documents all “feed” information to these models without filtering out hate speech or false narratives.

Table of Contents

ToggleThe Shadow of Antisemitism in Data

From the ancient rumors of “blood libels” in the Middle Ages to modern code words like “globalists” and “banking elites,” antisemitic ideas have long been deeply embedded in datasets. When artificial intelligence absorbs such content, it may replicate and amplify these biases without any obvious warning.

Some have attempted to fix this AI-fueled hatred: companies use filters, security mechanisms, and human feedback to guide models away from harmful outputs. But these measures are at best piecemeal remedies — this is not a technical “glitch,” but a fundamental problem in AI’s learning mechanisms.

Worse still, social media algorithms are adding fuel to the fire. Content that triggers anger — often containing antisemitic metaphors — attracts more attention. Even content opposing antisemitism may be aggressively promoted due to traffic-driven logic, ultimately intensifying rather than calming hatred.

Today’s antisemitism is becoming increasingly covert. It hides behind euphemisms like “deep state” and “shadow elites,” which AI struggles to identify — these models do not understand history or morality; they only excel at recognizing patterns and repeating them. As a result, stereotypes about “Jews and power” can spread quietly, turning AI into an unwitting amplifier of dangerous myths.

Fears for the Future

The risks ahead are even more alarming. AI-generated deepfakes, fake news, and propaganda can spread antisemitic conspiracy theories at an unprecedented speed and with greater persuasiveness; open-source AI models can be easily modified by anyone, including malicious actors, to mass-produce hate content. We have already witnessed these tools being weaponized — once complex lies spread, they are extremely difficult to refute.

So, how should those of us who worry about the rise of antisemitism respond? There is no panacea. Filtering AI content, fine-tuning models, and formulating ethical guidelines are necessary but far from sufficient. The scale and complexity of AI systems make it nearly impossible to completely eradicate antisemitic biases.

This has never been a purely technical challenge, but a problem that humanity must face head-on. If AI reflects humanity’s darkest prejudices, it is because we have never truly eradicated them.

In building digital tools, we have left a legacy of both good and bad. Antisemitism is not a flaw in AI, but a relic of centuries of prejudice. Recognizing this is the first step in combating it. The digital age demands that we remain vigilant, strengthen education, and take proactive action — only then can technology become a force for defending truth and tolerance, rather than a driver of hatred and division.

The “puppet” of the digital age has awakened. It is never machines that can stop it, but we ourselves.

Can automatic spring machines be controlled by artificial intelligence?

AI automatic production of spring products Compare the advantages and disadvantages of spring assembly automation and artificial intelligence