In recent years, the impact of outages and IT failures on business operations has become increasingly significant. Such incidents are not merely technical issues; they disrupt business services, erode public trust, and reveal the high dependence of enterprises and society on resilient IT infrastructure.

Artificial Intelligence (AI) is bringing tremendous value to enterprises, ranging from business process automation to intelligent decision-making based on AI agents. However, behind the widespread application of AI lies severe infrastructure challenges. With the rapid growth of data volume and the surge in computing demands, organizations must ensure that their IT infrastructure is robust enough to support these high-intensity workloads. The performance and effectiveness of AI are highly dependent on the integrity, availability of data, and processing capabilities. Moreover, the high level of interconnection among modern enterprises means that a failure in a single system may have a ripple effect across the entire industrial chain.

Artificial intelligence, especially automation technology, is helping enterprises make smarter and more autonomous decisions. Nevertheless, AI systems place entirely new demands on infrastructure. As the workloads of big data processing, model training, and inference increase, traditional IT systems often fail to meet the requirements of real-time computing and dynamic workloads.

Modern AI applications not only require large-scale GPU resources for training but also need to handle unpredictable inference traffic. This imposes dynamic and intense pressure on computing, storage, and networks, making traditional infrastructure management models difficult to cope with.

Therefore, the core challenge faced by enterprises does not lie in the AI models themselves, but in the infrastructure that supports the operation of these models: data pipelines, computing resource management, real-time monitoring, and observability systems. In fact, the performance of AI is a direct reflection of infrastructure performance.

Modern Data Centers: The Foundation for Supporting AI

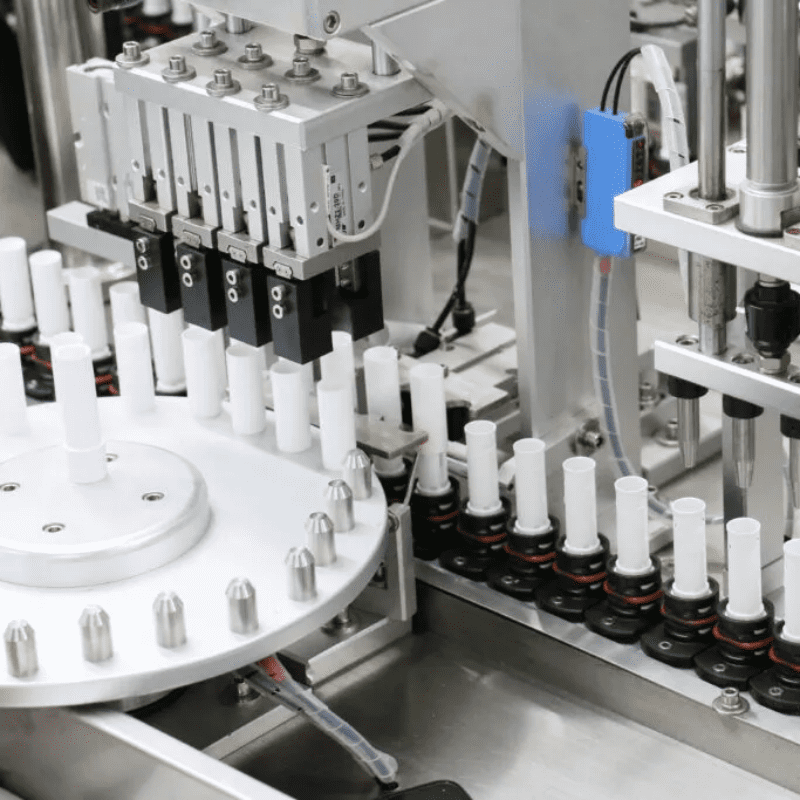

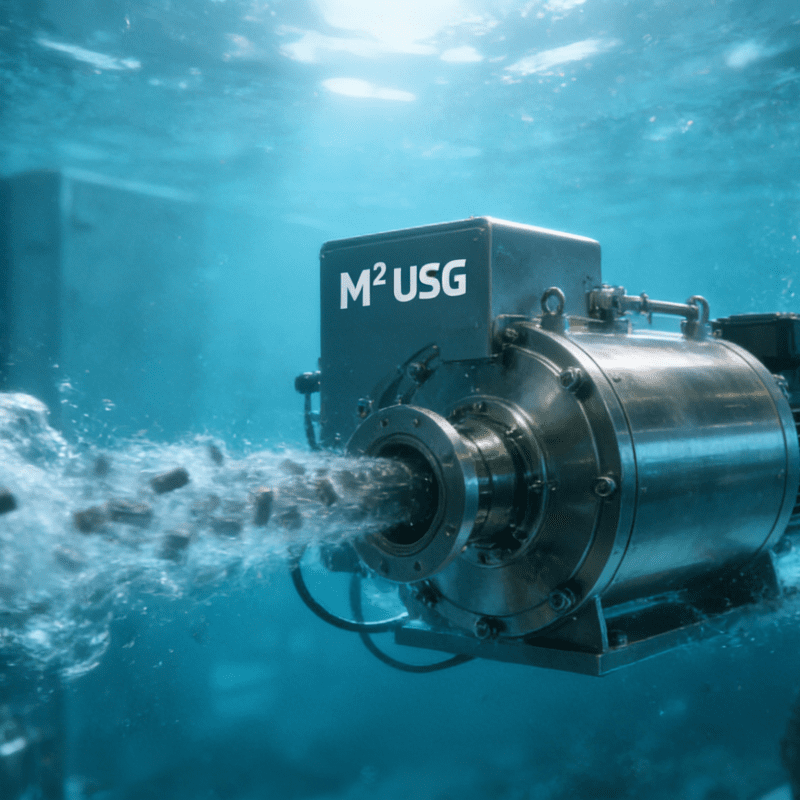

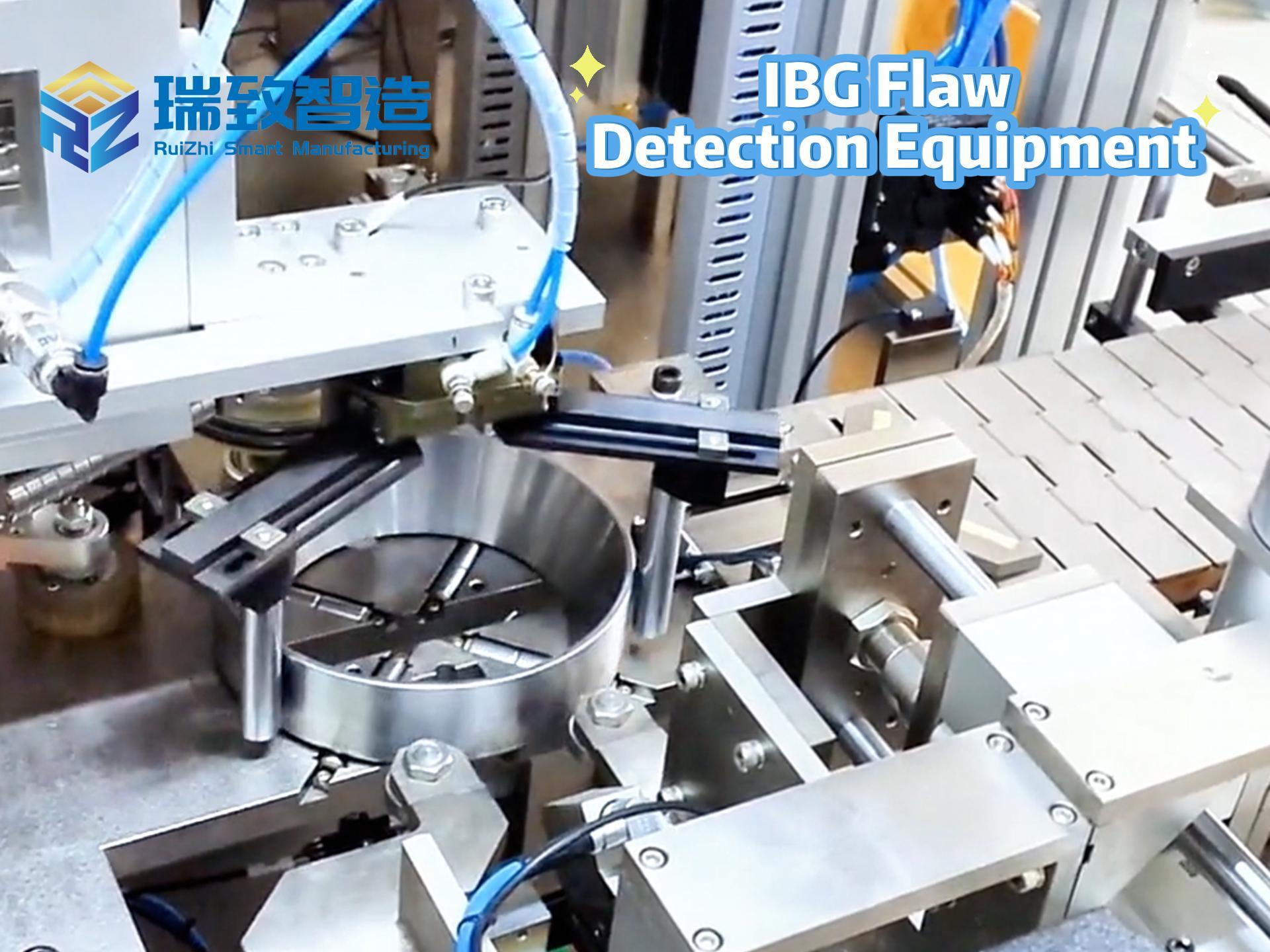

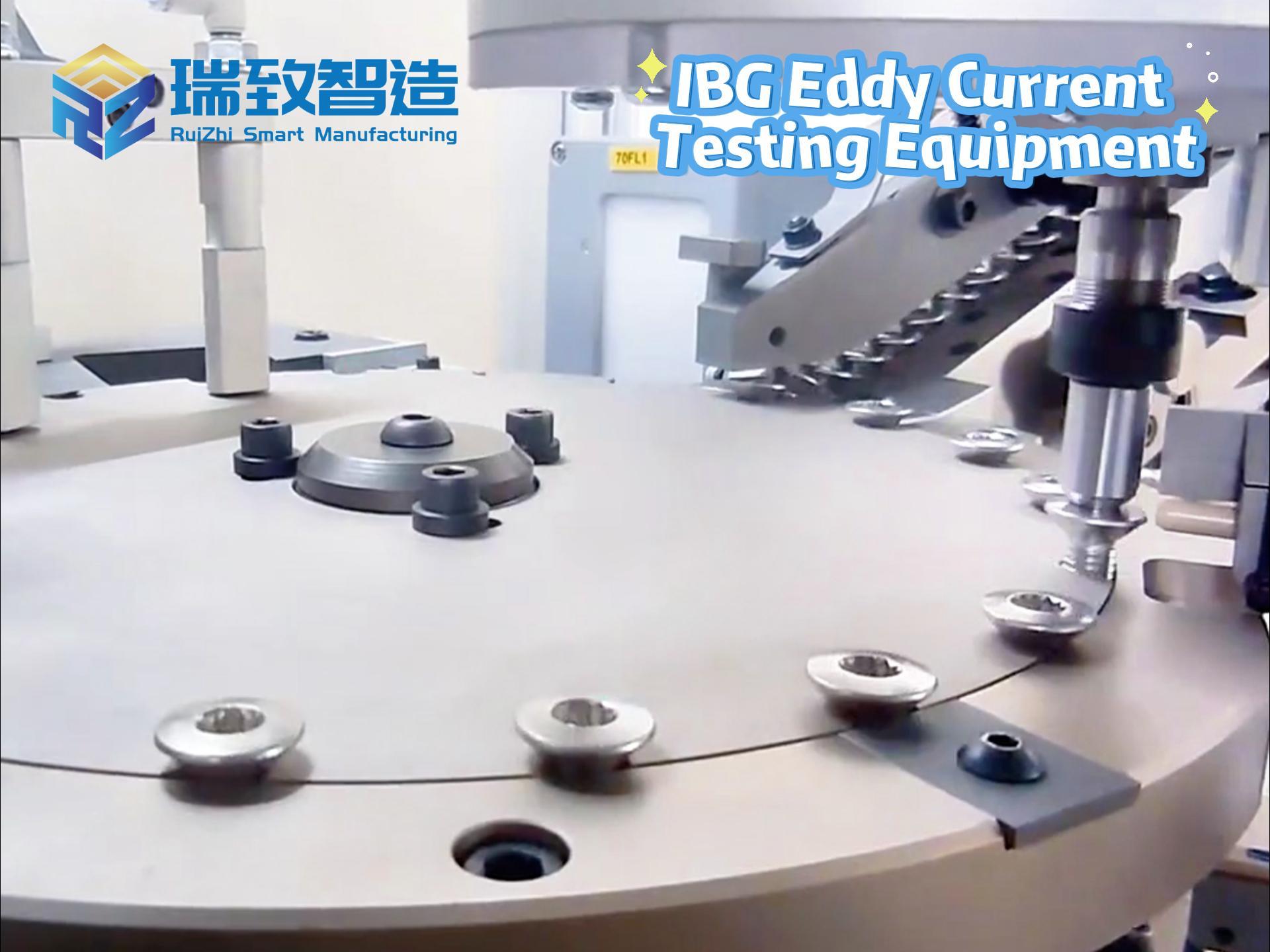

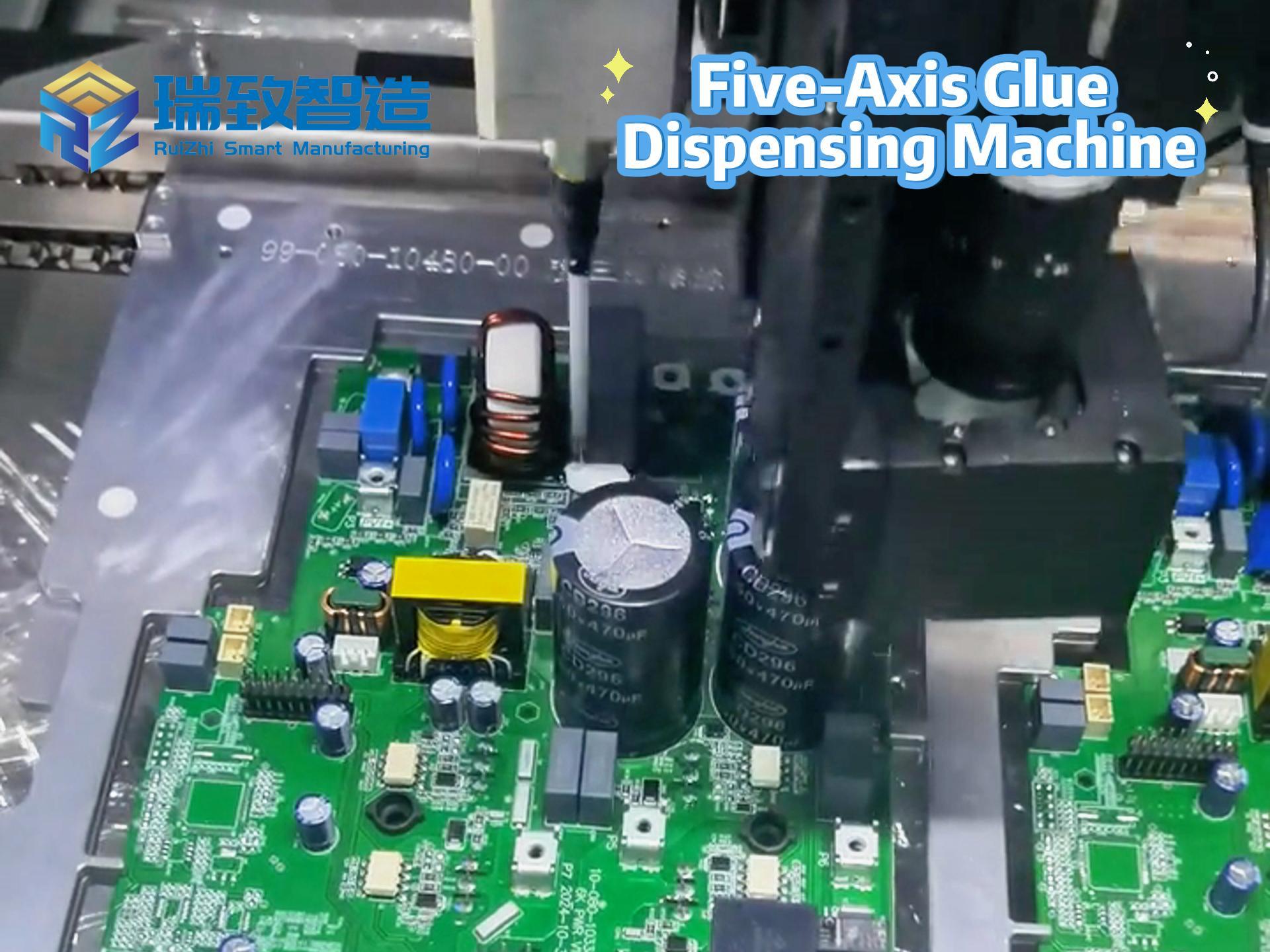

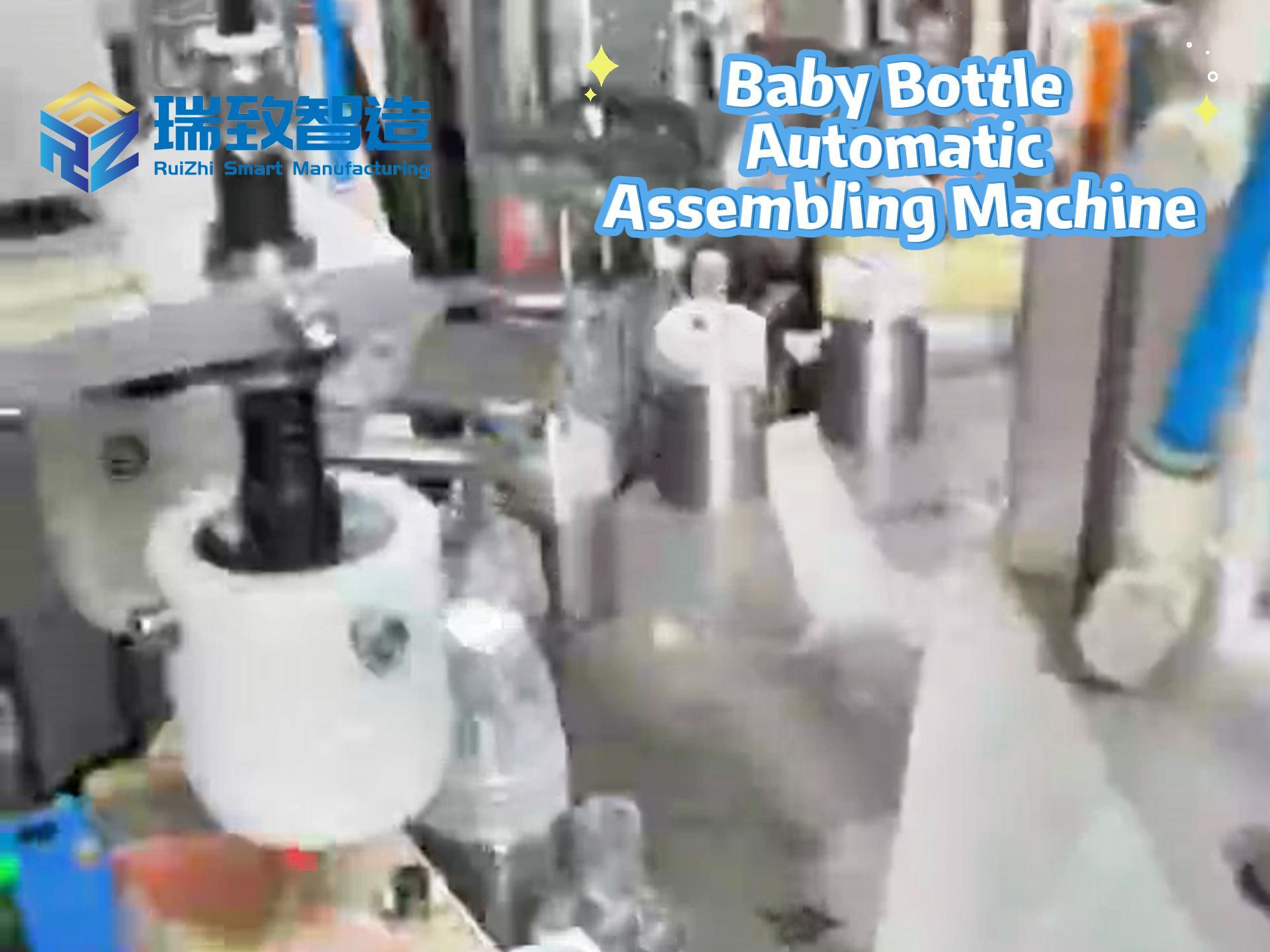

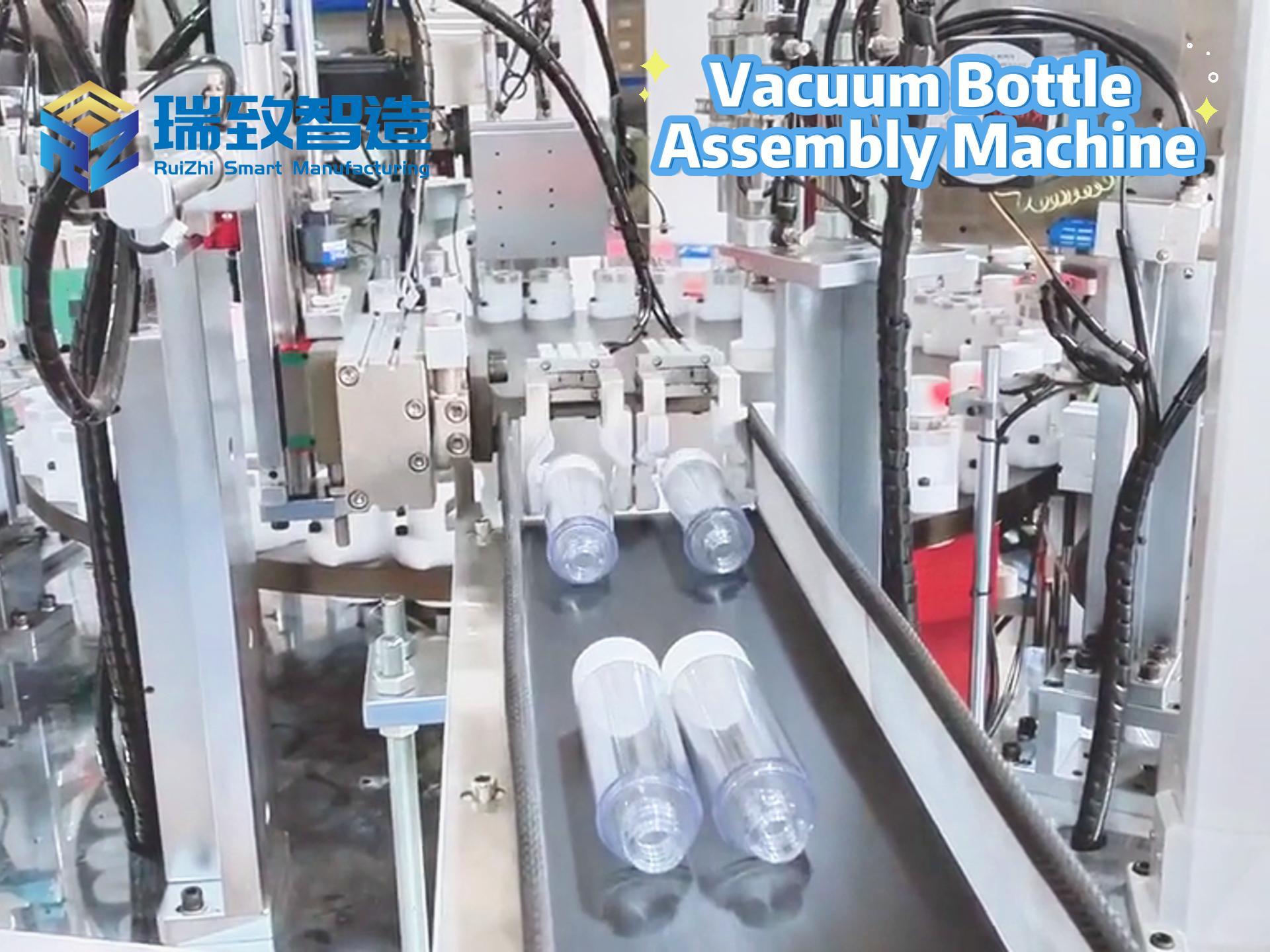

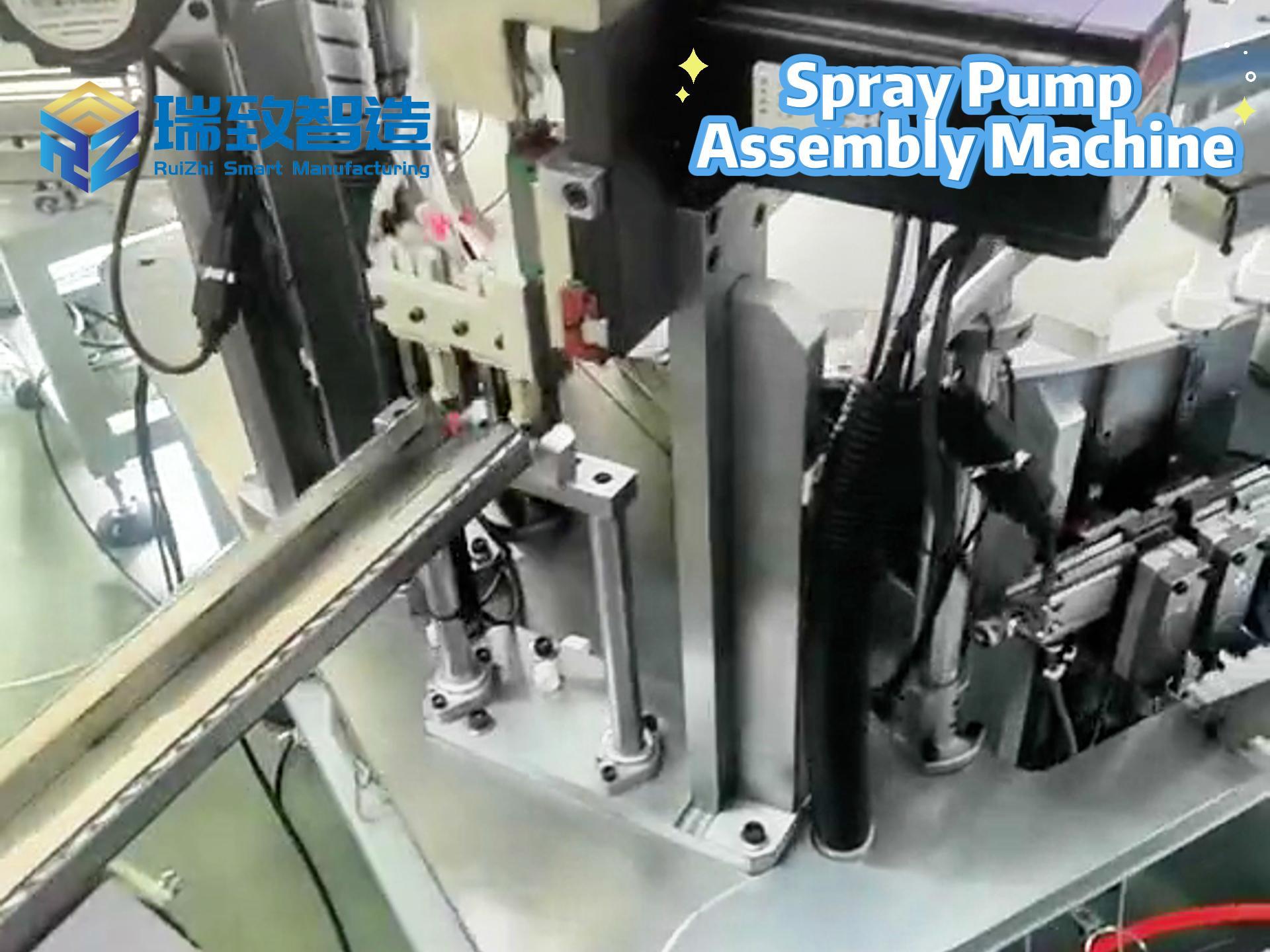

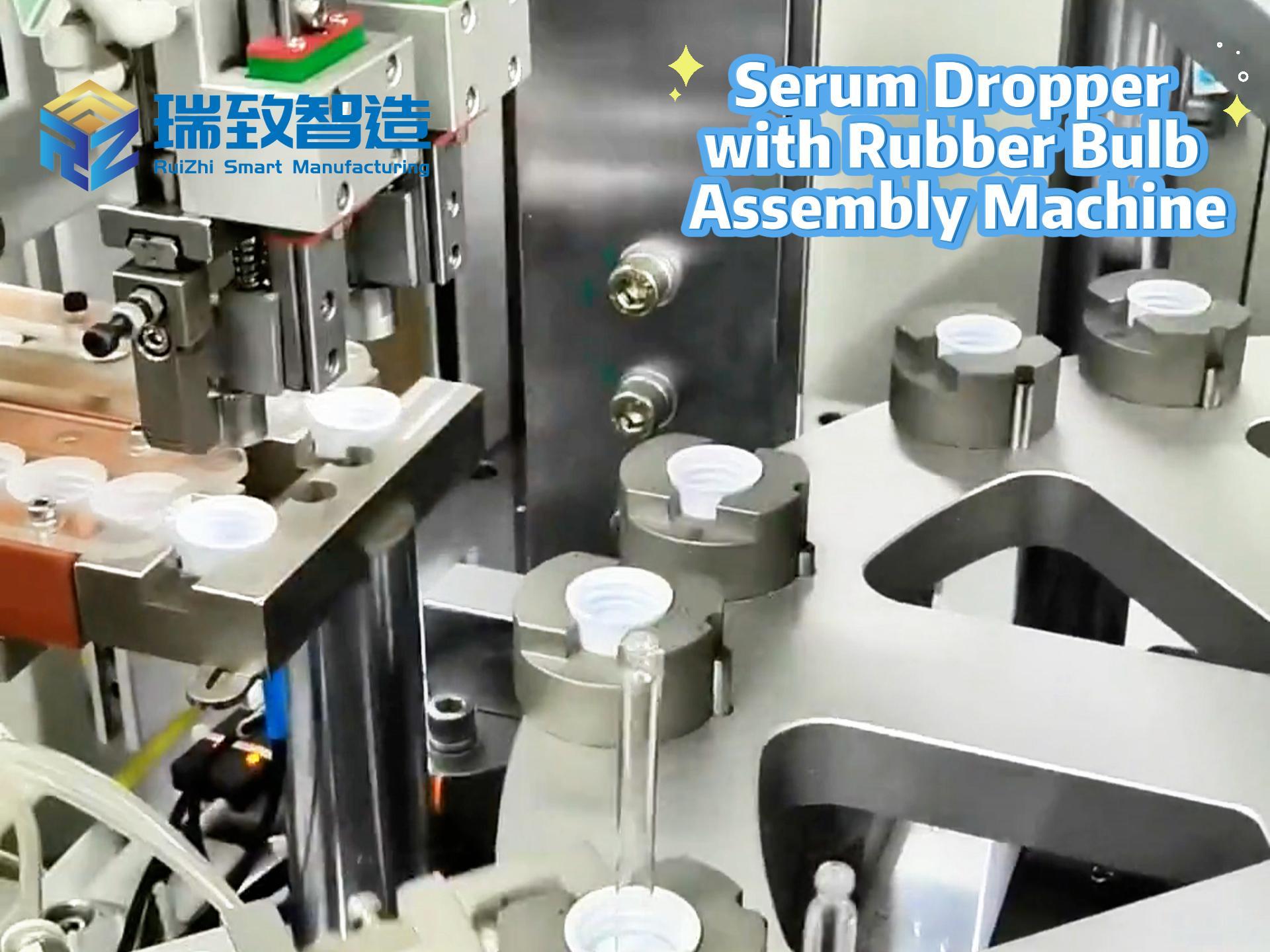

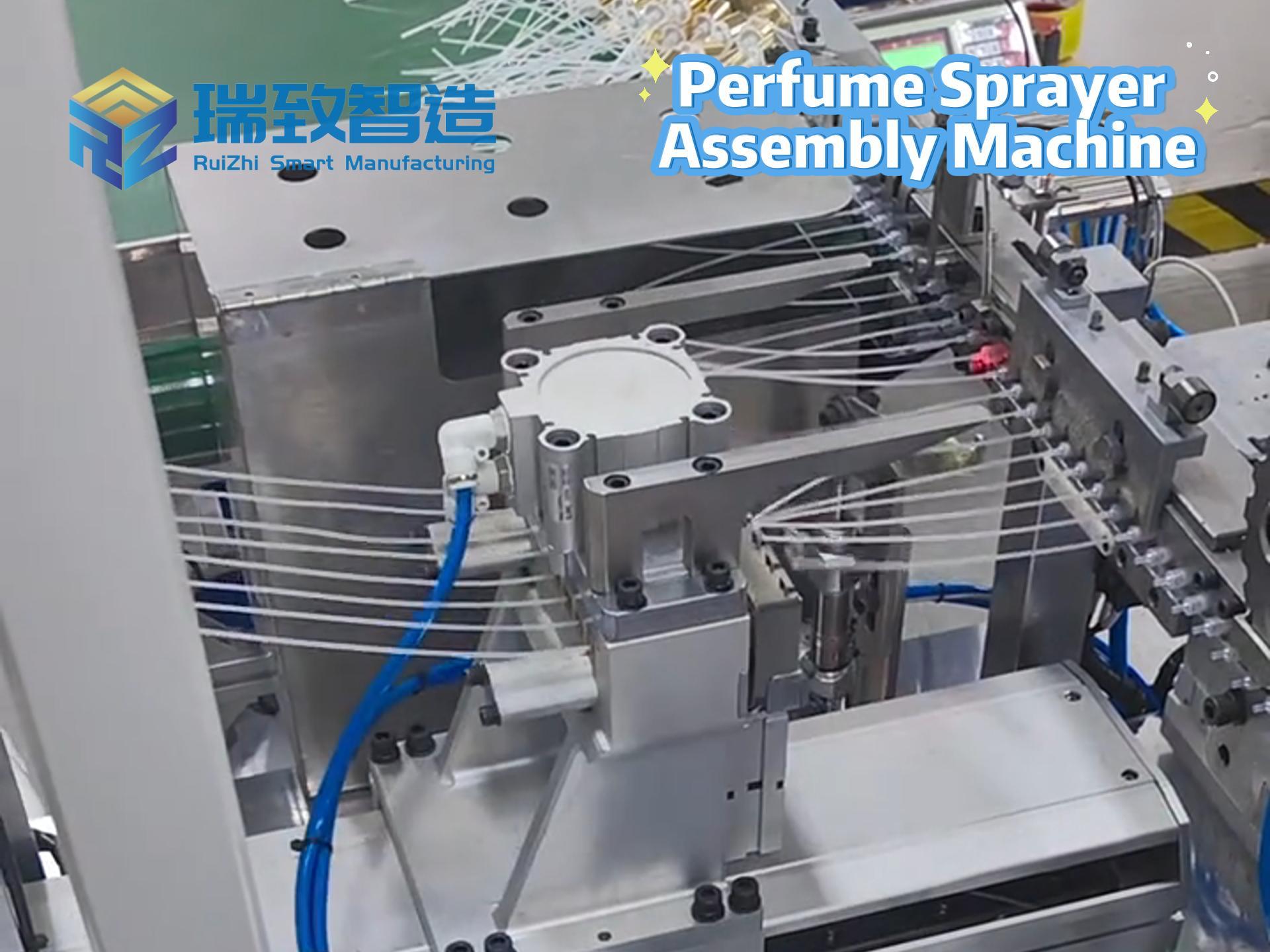

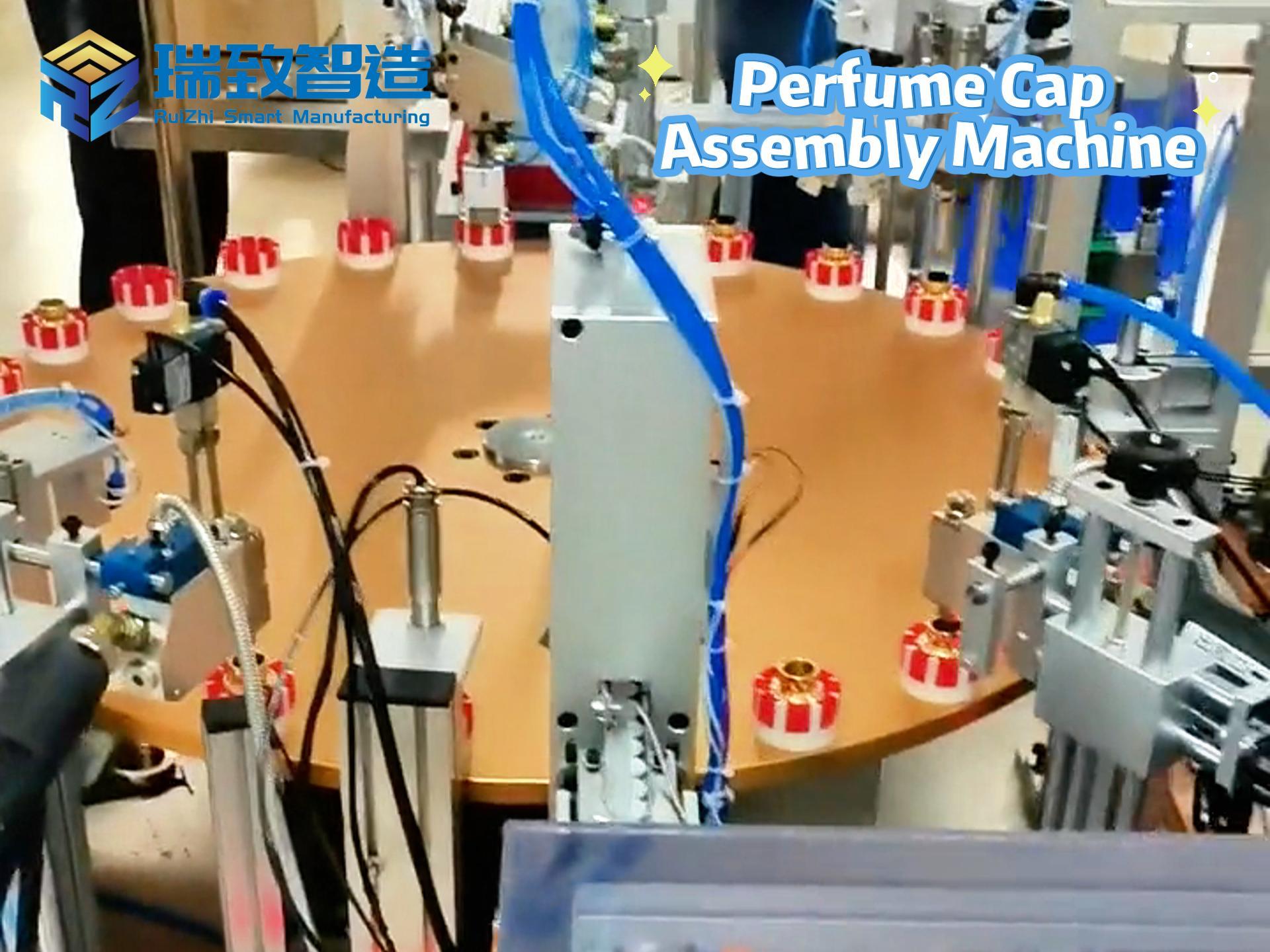

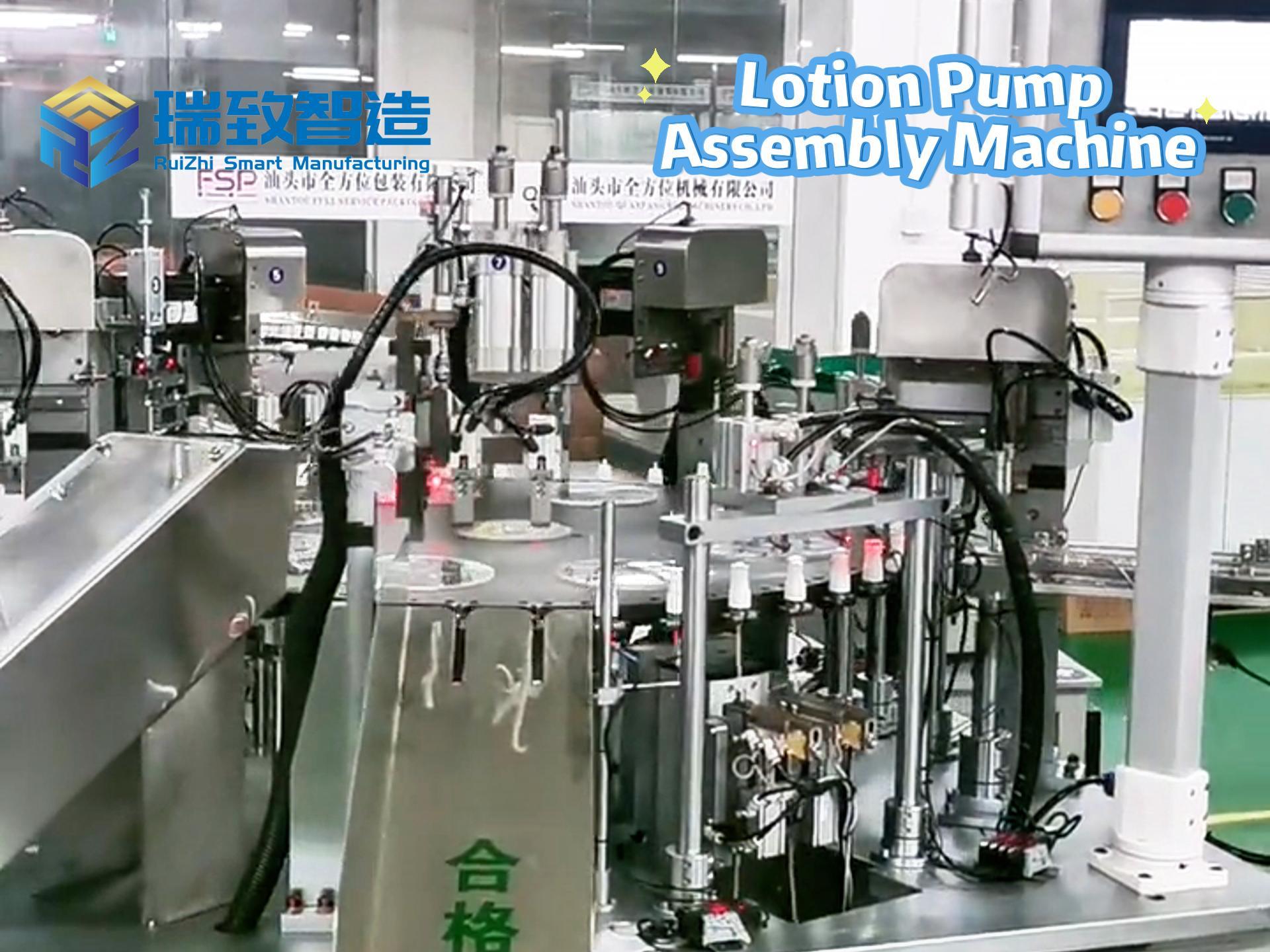

Modern data centers are no longer limited to on-premises servers or cloud computing; instead, they have evolved into complex ecosystems encompassing traditional systems, public clouds, private clouds, and edge environments. Every business scenario—from medical devices in hospital rooms, to digital ordering terminals in the catering industry, and to operational technology systems in manufacturing—adds to the complexity and dependencies of the overall system.For instance, in manufacturing, the Automatische snelkoppelingsonderdelenmontagemachine—a critical intelligent device used in automotive, aerospace, and electronic component production to achieve high-precision, rapid connector assembly—relies entirely on the stability and responsiveness of AI infrastructure. Its AI-driven functions, such as real-time assembly deviation detection (via computer vision) and dynamic torque parameter adjustment (to adapt to different part materials), require low-latency inference support from edge computing nodes within the data center ecosystem. Additionally, the machine generates massive real-time operational data (e.g., alignment accuracy, assembly cycle time, and defect rates) that must be transmitted through high-speed, reliable networks to central AI models for continuous optimization. A temporary disruption in infrastructure—such as a bottleneck in the data pipeline or insufficient computing resources for inference—could force the machine to halt operations, leading to costly production line downtime. This example underscores that AI infrastructure must not only support backend model training but also cater to the real-time, mission-critical needs of frontline intelligent manufacturing equipment like the Automatic Quick-Connect Parts Assembly Machine.

In a hybrid environment, the complexity of infrastructure operations increases significantly. Without sound infrastructure support, organizations will face limitations in scalability, increased risk of service disruptions, and rising operational costs. The infrastructure supporting AI workloads must not only be stable but also flexible and efficient enough to respond to real-time demands.

Observability: The Key to Modern Infrastructure

In such a complex hybrid environment, observability has become an indispensable tool for enterprise IT management. Observability provides a real-time 360° view of the infrastructure, enabling enterprises to track performance, detect anomalies, and predict potential issues before they lead to business disruptions.

Traditional monitoring tools mainly rely on thresholds and alerts, while modern observability systems convert telemetry data into actionable insights through intelligent analysis. For example, it can monitor AI-specific metrics, including GPU utilization, model latency, inference drift, and data pipeline bottlenecks, and correlate these metrics with infrastructure events to provide the necessary context for debugging and optimization.

Observability not only helps shift from passive management to proactive management but also enhances system resilience, reduces operational costs, and improves visibility into key business metrics (such as customer satisfaction, revenue, and service levels) through predictive analytics, anomaly detection, and intelligent alerts.

The Strategic Role of CIOs

As AI penetrates deeper into enterprise operations, the role of CIOs has transcended that of technical managers; they are becoming core leaders in AI transformation. The reliability of infrastructure is directly related to an enterprise’s business continuity and reputation. A minor configuration error or an undetected bottleneck may trigger a chain reaction, even affecting the entire industry.

Observability also helps CIOs and IT teams allocate resources more effectively, allowing technical personnel to focus on innovation and optimization rather than continuously addressing problems. Through a unified service view, CIOs can assess the impact of infrastructure on business outcomes, guide phased modernization, optimize workload deployment, and achieve a balance among performance, cost, and sustainability.

Conclusion

Artificial intelligence is profoundly changing the way enterprises operate, but its potential can only be realized if the infrastructure is capable of supporting it. Modern data centers are no longer merely places for data storage; they are the starting point of AI performance.

Enterprises must take immediate action to build robust and scalable infrastructure, combined with intelligent observability systems, to ensure they stay ahead in the future AI-driven competition. Infrastructure is not just an IT issue; it is a core component of business strategy. Its robustness directly determines whether an enterprise can continue to innovate and maintain competitiveness in a rapidly changing market environment.