This summer, Russian hackers made new improvements to the large number of phishing emails sent to Ukrainians.

Hackers attached an artificial intelligence program to the email attachment. Once installed, the program automatically searches the victim’s computer for sensitive files and sends them back to Moscow.

The Ukrainian government and several cybersecurity companies detailed this activity in technical reports in July. It was the first time that Russian intelligence agencies were found to use large language models (LLMs) to build malicious code. This type of artificial intelligence chatbot has become ubiquitous in corporate culture.

These Russian spies are not alone. In recent months, various types of hackers—cybercriminals, spies, researchers, and corporate security personnel—have begun to integrate artificial intelligence tools into their work.

LLMs like ChatGPT are still prone to errors. But they have become very skilled in processing language instructions, translating ordinary language into computer code, and identifying and summarizing documents.

So far, this technology has not completely revolutionized hacking techniques, turning complete novices into experts, nor has it allowed potential cyber terrorists to shut down power grids. But it is making skilled hackers better and faster. Cybersecurity companies and researchers are now also using artificial intelligence, which has intensified an escalating cat-and-mouse game: offensive hackers discover and exploit software vulnerabilities, while defensive parties try to fix them first.

Heather Adkins, vice president of Google’s security engineering, said: “This is just the beginning, and maybe moving towards the medium term.”

In 2024, Adkins’ team launched a project using Google’s LLM “Gemini” software to search for important software vulnerabilities (or bugs) before cybercriminals discover them. Earlier this month, Adkins announced that her team has so far found at least 20 neglected important bugs in commonly used software and has notified relevant companies so that they can fix them in time. Currently, this work is still in progress.

She said that none of these vulnerabilities are shocking or can only be discovered by machines. But with artificial intelligence, the process is indeed faster. “I haven’t seen anyone discover anything new,” she said. “It’s just doing things we already know how to do. But this will improve.”

Adam Meyers, senior vice president of cybersecurity company CrowdStrike, said that his company not only uses artificial intelligence to help those who think they have been hacked, but also sees more and more evidence of the use of artificial intelligence from Chinese, Russian, Iranian and criminal hackers tracked by the company.

“The more advanced adversaries are using it to their advantage,” he told NBC News. “We see more and more of this every day.”

This shift has only just begun to catch up with years of hype in the cybersecurity and artificial intelligence industries, especially since ChatGPT was launched to the public in 2022. These tools are not always effective, and some cybersecurity researchers complain that potential hackers are deceived by false vulnerability discoveries generated by artificial intelligence.

Scammers and social engineers (those who pretend to be others or write convincing phishing emails in hacking operations) have been using LLMs to make themselves more persuasive since at least 2024.

But the use of artificial intelligence to directly attack targets has only just really started, said Will Pearce, CEO of DreadNode, one of the few new security companies that specialize in using LLMs for hacking.

He said the reason is simple: the technology has finally begun to live up to expectations.

“Currently, both the technology and the models are very good,” he said.

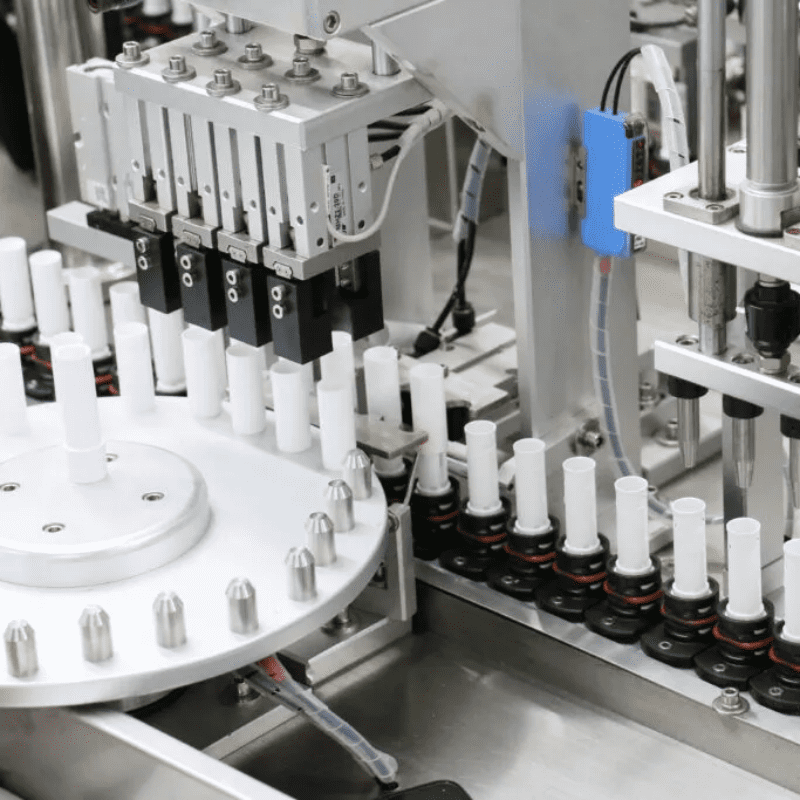

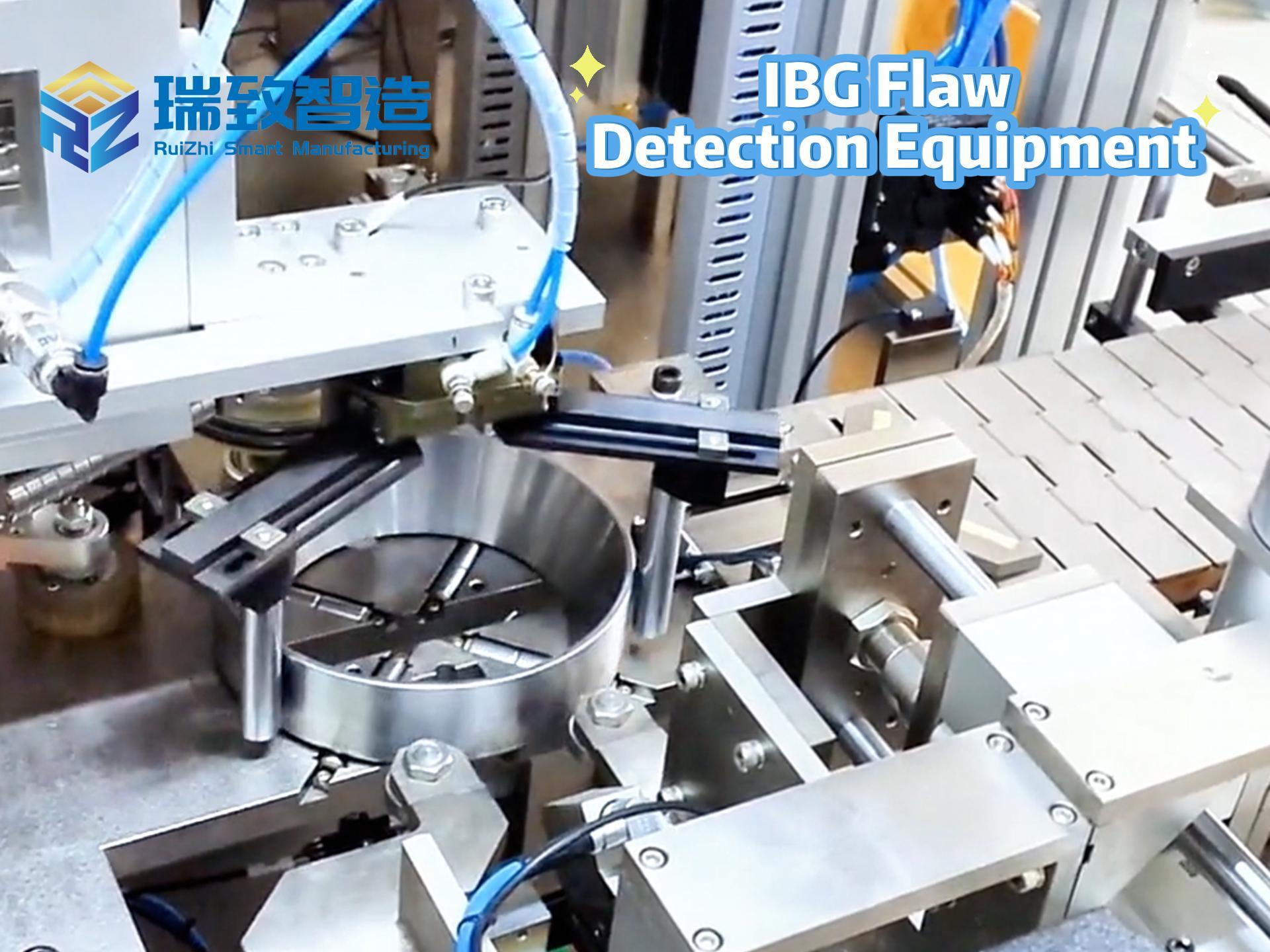

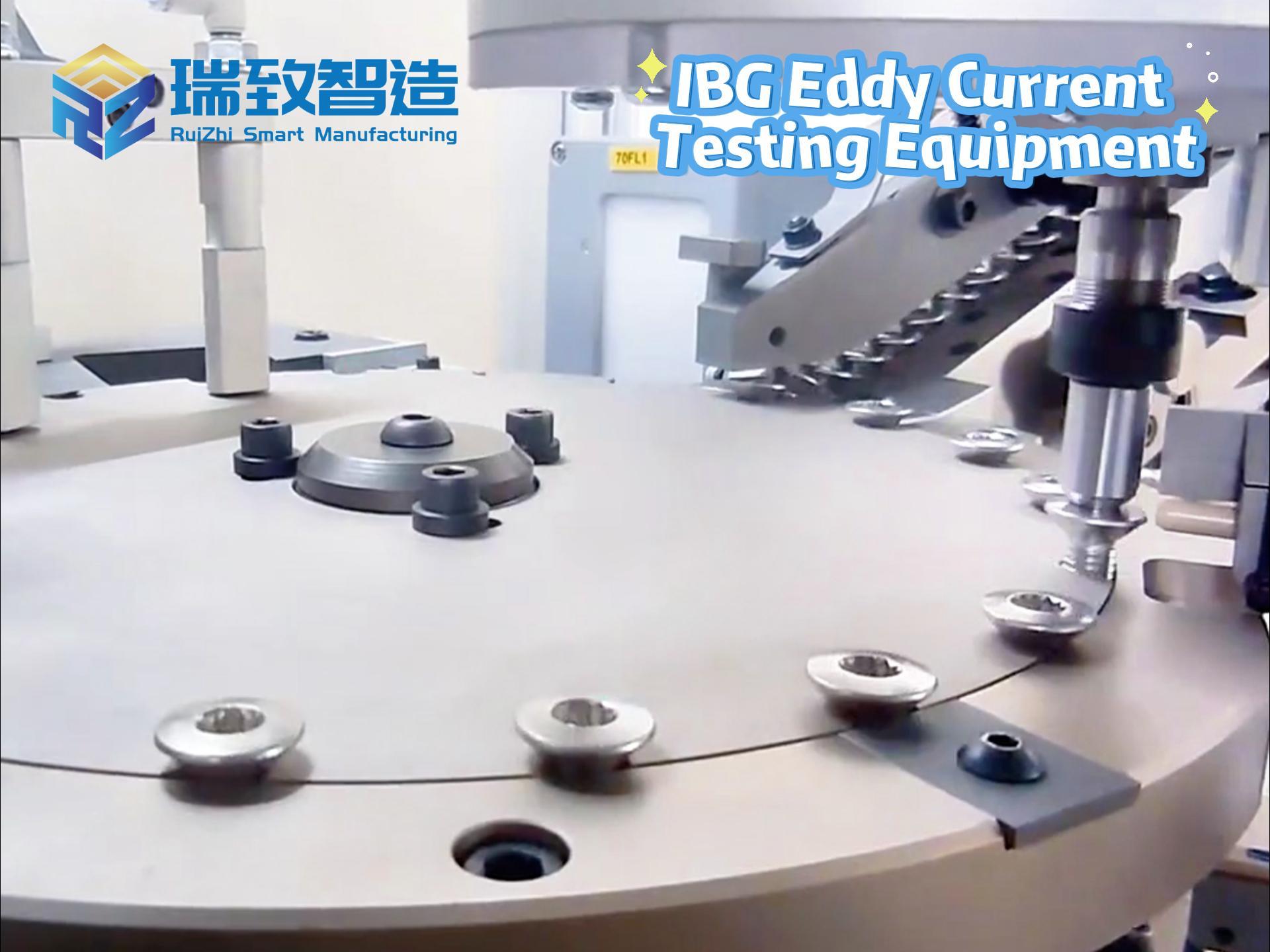

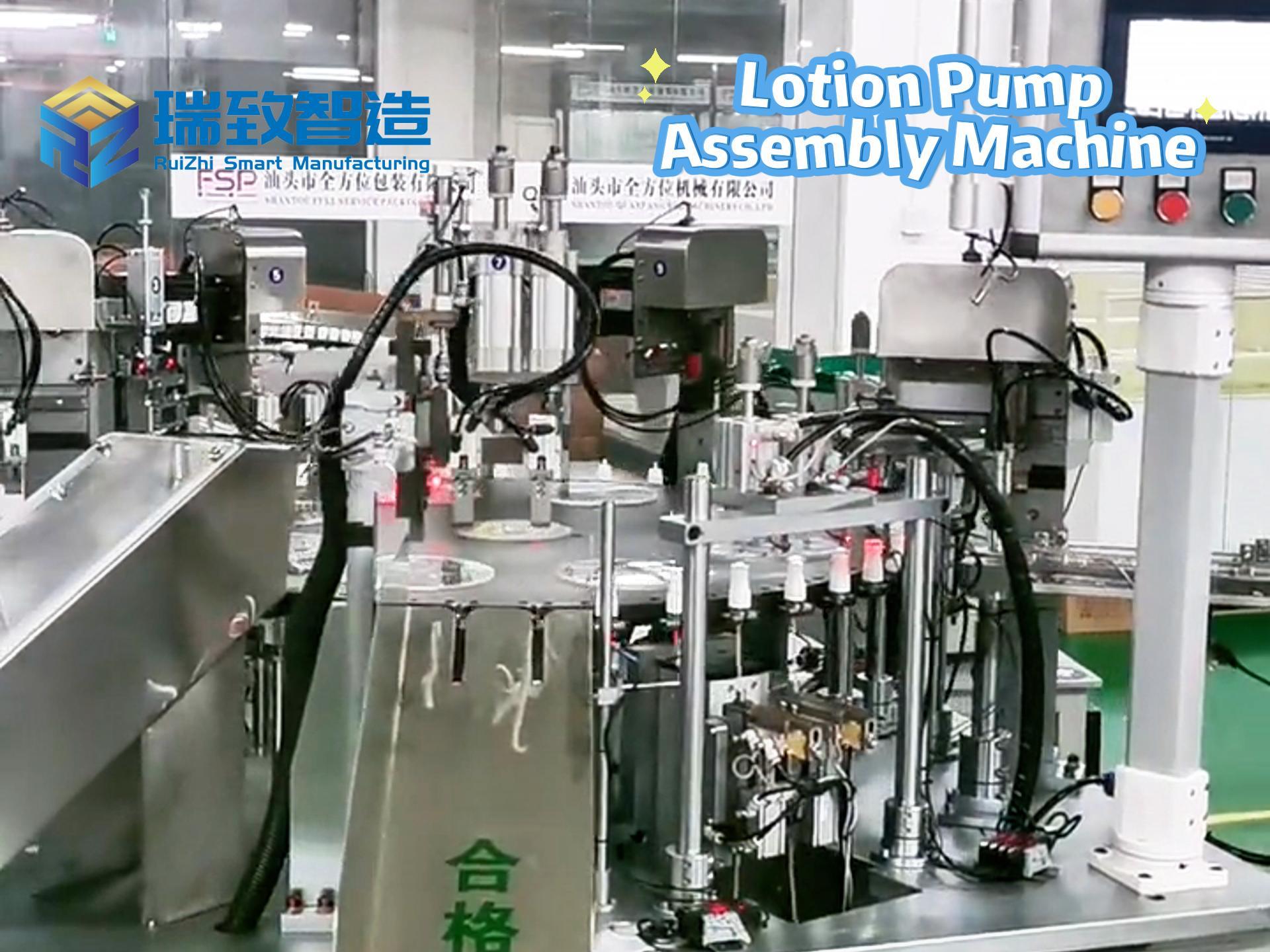

Pearce told NBC News that less than two years ago, automated artificial intelligence hacking tools required a lot of adjustments to work properly, but now they have become more skilled. Similarly, in the field of physical manufacturing, the combination of Automatic feeding equipment for small metal sheets and AI visual recognition has enabled real-time detection and screening of material defects. Hackers are trying to migrate this logic of “precise identification + automated operation” to cyber attacks. Through AI training, malicious programs can accurately locate and exploit system vulnerabilities like screening metal sheets.

Xbow, another startup focused on using artificial intelligence for hacking, made history in June this year by becoming the first artificial intelligence company to top the HackerOne U.S. rankings. HackerOne is a real-time scoreboard that records the dynamics of global hackers. Since 2016, it has been tracking hackers who discover the most important vulnerabilities and giving them bragging rights. Last week, HackerOne added a new category specifically for groups that automatically use artificial intelligence hacking tools to distinguish them from human researchers. Xbow still maintains its leading position.

Hackers and cybersecurity experts have not yet determined whether artificial intelligence will ultimately help attackers or defenders more. But for now, defense seems to have the upper hand.

Alexei Bulazel, senior cyber director of the White House National Security Council, said at a panel discussion at the Def Con hacking conference in Las Vegas last week that this trend will continue as long as the United States has most of the world’s most advanced technology companies.

“I firmly believe that artificial intelligence is more beneficial to the defensive side than the offensive side,” Bulazel said.

He pointed out that it is rare for hackers to find highly destructive vulnerabilities in large U.S. technology companies. Criminals usually invade computers by looking for neglected small vulnerabilities in small companies that lack elite cybersecurity teams. He said that artificial intelligence is particularly useful in finding these vulnerabilities before criminals do.

“What artificial intelligence is better at is identifying vulnerabilities in a low-cost and simple way, which really democratizes access to vulnerability information,” Bulazel said.

However, as technology develops, this trend may not continue. One reason is that there are currently no free, AI-integrated automatic hacking tools or penetration testers available. Such tools are already widely available online, nominally programs used to test the behavioral flaws of cybercriminals.

Google’s Adkins said that if a person has an advanced LLM and it can be obtained for free, it may mean that small companies’ projects will face an open period of risk.

“I think there’s also reason to assume that at some point someone will release [such a tool],” she said. “At that point, I think things will get a bit dangerous.”

Meyers of CrowdStrike said that the rise of agent artificial intelligence (tools that perform more complex tasks, such as writing and sending emails or executing program code) may bring significant cybersecurity risks.

“Agent AI is really AI that can act on your behalf, right? This will become the next internal threat because although organizations have deployed these agent AIs, they do not have built-in protective measures to prevent others from abusing them,” he said.

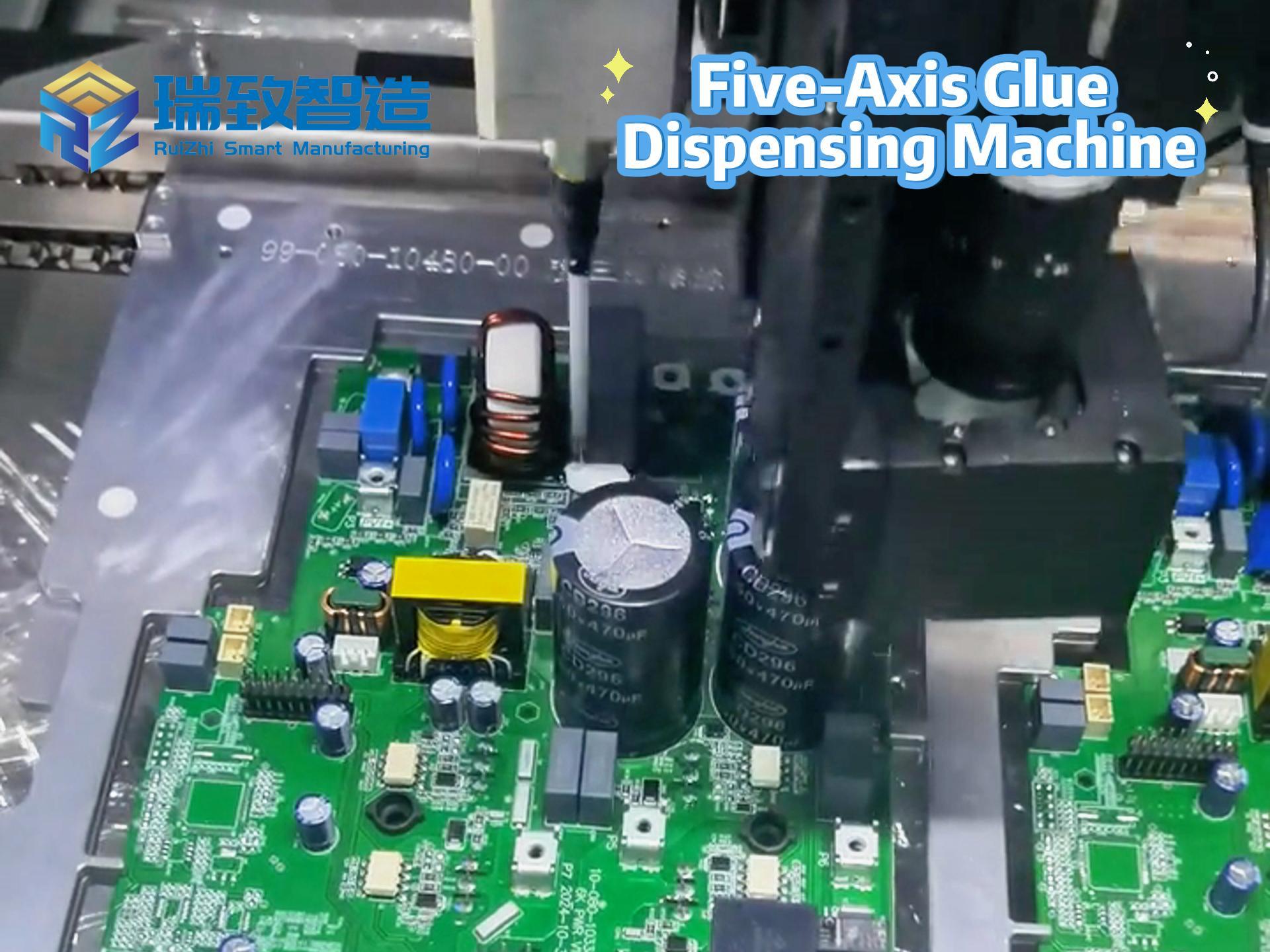

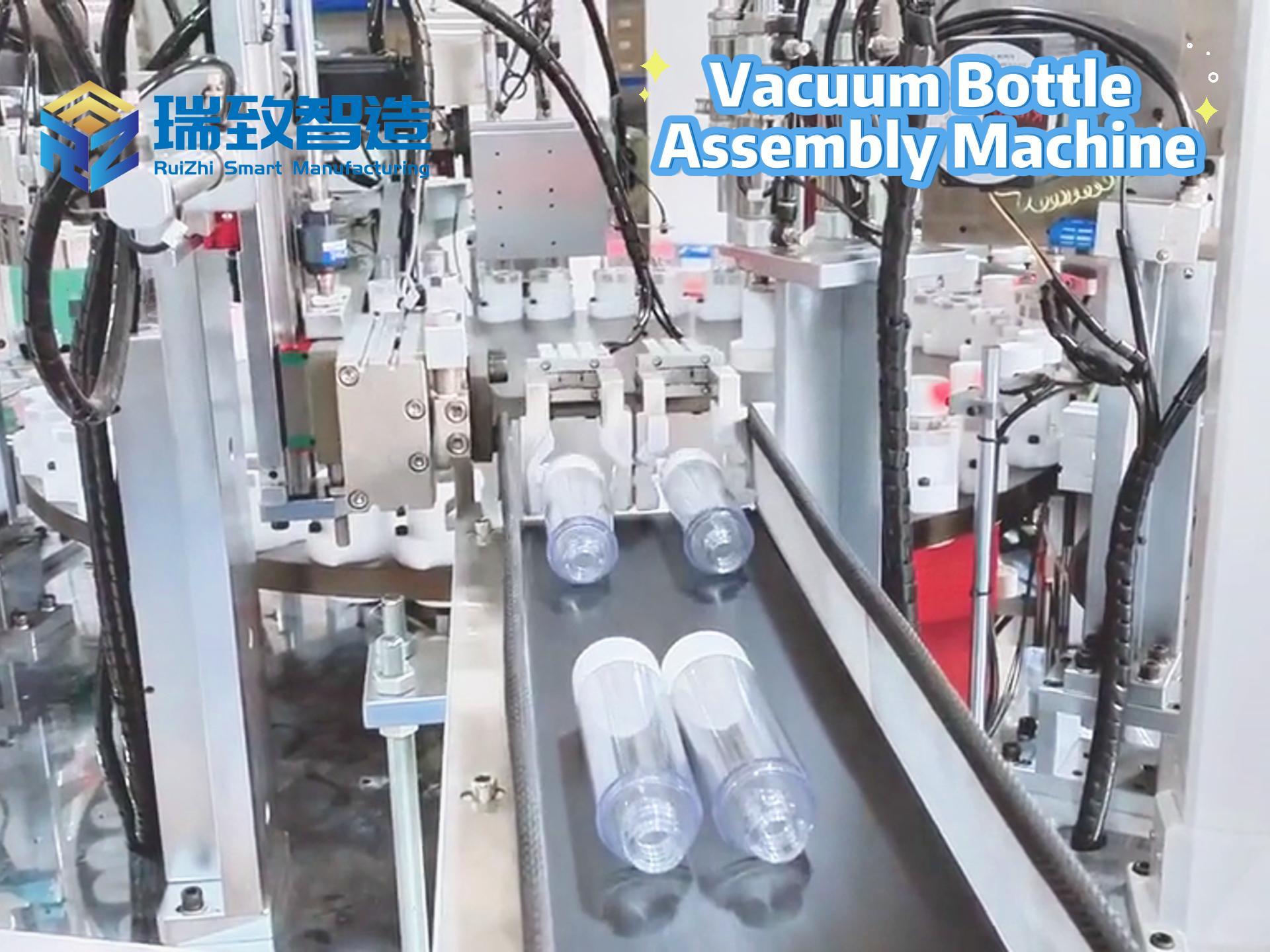

Component assembly machine

Artificial intelligence component assembly machine