Artificial Intelligence (AI) is emerging as a key driver of revolution in data center infrastructure. With the rapid advancement of AI technology and the widespread adoption of generative AI applications, traditional data centers are evolving into AI-ready data centers. AI data centers not only host high-density computing workloads but also need to meet higher standards in terms of power supply, cooling, and sustainability. This transformation is profoundly reshaping the ways industries innovate, manage operational efficiency, and address environmental impacts.

AI Redefines Data Center Infrastructure

According to research by McKinsey, by 2030, approximately 70% of data center demand may be related to artificial intelligence. Meanwhile, the global demand for data center capacity is projected to grow at an annual rate of 19% to 22% from 2023 to 2030. This trend directly reflects the growth of AI workloads and their role in driving the development of new data center architectures.

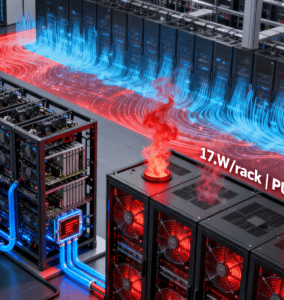

The main impacts of AI workloads on data center infrastructure include a significant increase in power density and higher cooling requirements. For instance, the average rack power density is expected to rise from 17 kW in 2024 to 30 kW in 2027. For training workloads of models like ChatGPT, the power consumption per rack can exceed 80 kW, and the rack density of high-end GPUs may even reach 120 kW. In contrast, the power consumption of AI inference workloads is relatively lower. These differences impose distinct requirements on data centers in terms of electrical design, thermal management strategies, location selection, and system resilience.

Key Characteristics of AI-Ready Data Centers

Scalability

With the development of edge computing and hyperscale AI data centers, data centers need to be highly scalable. AI-ready data centers should be able to scale with model sizes or deploy new projects on demand, ensuring the continuous availability of computing resources and the ability to adapt to forward-looking technologies.

Energy Efficiency

AI data centers typically consume more energy than traditional data centers, so improving energy efficiency is a core metric. Operational costs, environmental regulations, and social responsibility are the three key drivers for optimizing energy efficiency. Operators need to focus on the optimization of PUE (Power Usage Effectiveness) and the efficient design of data center cooling systems to reduce energy consumption and carbon emissions.

Efficient Cooling Solutions

The increased computing density of AI workloads leads to a significant rise in heat generated by servers, making cooling a core issue in AI data center design. Choosing the right cooling strategy is not only related to performance and reliability but also directly affects operational costs and sustainability goals. Compared with traditional air cooling technology, liquid cooling is becoming the mainstream solution for high-density AI workloads.

Application of Liquid Cooling Technology in AI Data Centers

Liquid cooling technology effectively reduces the energy consumption of AI servers through its more efficient heat transfer capability, while supporting higher heat density and reliability. Its main forms include:

Direct-to-Chip Cooling

This technology delivers cooling fluid directly to the chips that generate the most heat, achieving efficient heat dissipation. Suitable for rack densities of 100–175 kW, it can significantly reduce cooling-related energy consumption (by up to approximately 72%) and improve heat capture efficiency (70–75%). Direct-to-chip cooling has been widely used in AI training in hyperscale data centers such as Google’s.

Immersion Cooling

Server components are immersed in non-conductive fluid for efficient heat dissipation. This method is suitable for ultra-high-density racks exceeding 175 kW, saving up to 95% of energy and 90% of water resources, while achieving a low PUE of nearly 1.03. Immersion cooling supports a wide range of temperature control and flexible deployment, providing strong thermal management guarantees for hyperscale AI data centers.

In addition, liquid cooling technology can be combined with district heating and recycled heat utilization to further enhance the environmental sustainability and ESG (Environmental, Social, and Governance) performance of data centers.

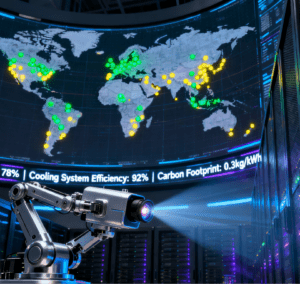

Global Development Trends of AI Data Centers

Sustained Growth of Hyperscale Data Centers

Hyperscale data centers are expected to continue dominating the deployment of AI workloads in the future. It is predicted that approximately 60–65% of AI workloads in Europe and the United States will be hosted on the infrastructure of cloud service providers and hyperscale data centers. The expansion of hyperscale data centers not only provides abundant resources but also serves as a key driver for innovative cooling technologies and energy-efficient hardware.Notably, the large-scale production of core hardware for AI data centers—such as server racks, liquid cooling system pipelines, and power distribution units—relies heavily on automated assembly equipment to ensure precision and efficiency. The Nut automatic assembly machine stands out in this link: equipped with AI visual positioning (with a recognition accuracy of up to 0.02 mm) and real-time torque feedback control, it can complete 35–45 nut tightening operations per minute for data center hardware components. Whether fixing the brackets of high-power GPU servers or assembling the connection flanges of liquid cooling pipelines, it maintains a torque error of less than ±2%, avoiding equipment loosening risks caused by manual assembly errors. This kind of automated equipment not only shortens the production cycle of data center hardware by 40% but also ensures the structural stability required for long-term operation of high-density AI equipment, strongly supporting the large-scale construction of hyperscale data centers.

Regulatory and Sustainability Frameworks

With the rapid development of AI data centers, global regulatory bodies and industry standards are imposing higher requirements on their design and operation, including:

ASHRAE TC 9.9 Guidelines: Provide thermal management for power equipment and best practices.

Uptime Institute Tiers: Classify data center resilience and redundancy into four levels; AI-ready data centers have an increasing demand for Tier III and Tier IV standards.

EU Code of Conduct for Data Centers: Emphasize energy efficiency and sustainability best practices.

These frameworks provide operators with clear design and operation roadmaps, ensuring that high-density computing infrastructure achieves a balance in efficiency, availability, and environmental responsibility.

Future Outlook: Innovation and Strategic Cooperation

AI data centers are undergoing profound changes, including expansion into edge computing, the application of intelligent management systems (such as predictive maintenance and intelligent cooling), and continuous innovation in high-efficiency energy-saving technologies. In a rapidly changing market environment, operators need to establish reliable strategic partnerships to ensure that data center design, development, and operation can be continuously optimized, meeting the comprehensive requirements of future AI workloads for high performance, sustainability, and cost efficiency.