Table of Contents

ToggleStanford Health AI Week: Bridging Intelligent Automation, Biomedicine, and Human-Centric Care

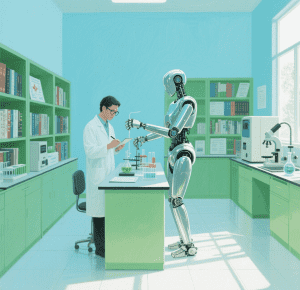

Thousands of experts converged at Stanford Health AI Week (June 2–6) to tackle a pivotal question: How can artificial intelligence and intelligent automation revolutionize health care while preserving human empathy and equity? Hosted by RAISE Health, the event brought together leaders in AI, biomedicine, and policy to explore how intelligent automation—from clinical decision support to AI-driven diagnostic tools—can transform care delivery, while addressing ethical, educational, and technological barriers. Amid discussions of cutting-edge models and data-driven innovations, the focus remained clear: automation equipment and AI must serve as allies to clinicians, not replacements, and must prioritize equitable outcomes for all patients.

The Core Challenge: Balancing Innovation with Human-Centered Care

Lloyd Minor, MD, Stanford’s dean of medicine, emphasized that advancing AI alone is insufficient. “We must confront roadblocks like misaligned incentives in deploying intelligent automation and fragmented data systems,” he stated. “The goal is to ensure AI accelerates biomedical discovery and enhances human connection in care—a balance critical for ethical progress.”

James Landay, PhD, co-lead of RAISE Health, echoed this, highlighting Stanford’s mission to integrate AI with human values: “As we develop automation equipment and AI tools for clinics, we must ask: Do these technologies empower clinicians and patients, or merely replace them? Our responsibility is to design systems that augment human expertise, not diminish it.”

Key Insights: AI as a Catalyst for Intelligent Automation in Healthcare

Reimagining Education for an AI-Driven Future

Clinicians today use AI in daily life but often hesitate to adopt it in practice. Veena Jones, MD, of Sutter Health, stressed the need for “change management” to build trust: “Leaders must invest in training clinicians to collaborate with intelligent automation, whether through AI literacy workshops or CEO-led summits. If we delay, we risk falling behind in a healthcare landscape increasingly shaped by AI.”

Kimberly Lomis, MD, of the AMA, added a critical perspective: “We must also ‘train AI to work with us.’ Current workflows don’t support learning alongside automation. We need systems that blend clinical decision support with on-the-job education, turning every interaction with AI into a teaching moment.”

Integrating AI into Clinical Practice: From Paperwork to Partnerships

Panelists highlighted automation equipment’s potential to alleviate administrative burdens, freeing clinicians for patient care. Carla Pugh, MD, PhD, noted: “AI can handle the ‘unsexy’ tasks—charting, prior authorizations—so we can focus on complex decision-making. But this requires co-designing AI with clinicians to ensure tools fit real-world workflows.”

Patient advocacy groups emphasized the need for inclusive AI development. Andrea Downing of The Light Collective urged: “Patients must be partners, not just reviewers. When designing intelligent automation for underserved communities, we need to center their priorities from the outset—whether through co-governance boards or community-led data collection.”

AI Agents and Foundation Models: Accelerating Discovery

In pediatrics, AI agents like Ello Technology’s virtual elephant are bridging gaps in care by supporting daily child development at home—a form of industrial automation reimagined for healthcare. “Pediatricians can’t be with families 24/7,” said Catalin Voss. “AI agents extend our reach, providing personalized support between visits.”

Foundation models, such as those used for protein design and medical imaging, are revolutionizing biomedical research. Russ Altman, MD, PhD, noted: “These models act as ‘automation equipment’ for scientists, accelerating drug discovery and unlocking insights from complex datasets. But we must ensure their outputs are transparent and bias-free.”

Ethics and Policy: Governing AI for Equity

Sanmi Koyejo, PhD, highlighted a stark imbalance: Only 5% of health AI studies use real patient data, and just 16% assess bias. “We need intelligent automation systems that prioritize fairness from development to deployment—whether through real-time bias detection or diverse training datasets.”

In policy discussions, experts debated “humans in the loop” frameworks for AI-driven insurance and diagnostics. Daniel Ting, MBBS, PhD, drew a metaphor: “Using AI is like driving a car—you don’t need to know how it’s built, but you must understand how to use it safely. Clinicians must grasp AI’s limitations and implications to wield it responsibly.”

Closing: A Vision for AI as a Complement to Human Expertise

As the week concluded, speakers rejected the “replacement mindset” and instead advocated for AI as a tool to enhance uniquely human roles. Bryant Lin, MD, a clinician with 20 years of experience, summed it up: “AI may outperform us in diagnostics, but it can’t hold a patient’s hand at end of life. Medicine’s core is human connection—and AI should amplify, not replace, that.”

Nigam Shah, MBBS, PhD, warned against the “Turing Trap”—building AI that merely replicates human tasks. “Instead, let’s focus on intelligent automation that enables new possibilities: AI and clinicians collaborating to solve problems neither could tackle alone. That’s where the true revolution lies.”

Eric Horvitz, MD, PhD, of Microsoft, framed the era as the “Computational Revolution,” where AI and automation equipment will redefine healthcare over the next decades. But as Natalie Pageler, MD, emphasized in the pediatrics track, the focus must remain on “lifetimes of potential realized”—ensuring AI serves the vulnerable, adapts to evolving patient needs, and upholds the sanctity of human care.