Intelligent robots are at a critical stage of a new round of technological leap. They are evolving from programmed automated machines into autonomous intelligent systems capable of independently perceiving, reasoning, and acting in the real world. With the rapid development of digital twins, edge computing, multi-modal perception, and foundation models, robots are acquiring real-time learning and safe decision-making capabilities, propelling Physical Artificial Intelligence (Physical AI) into a new phase of scalable deployment.

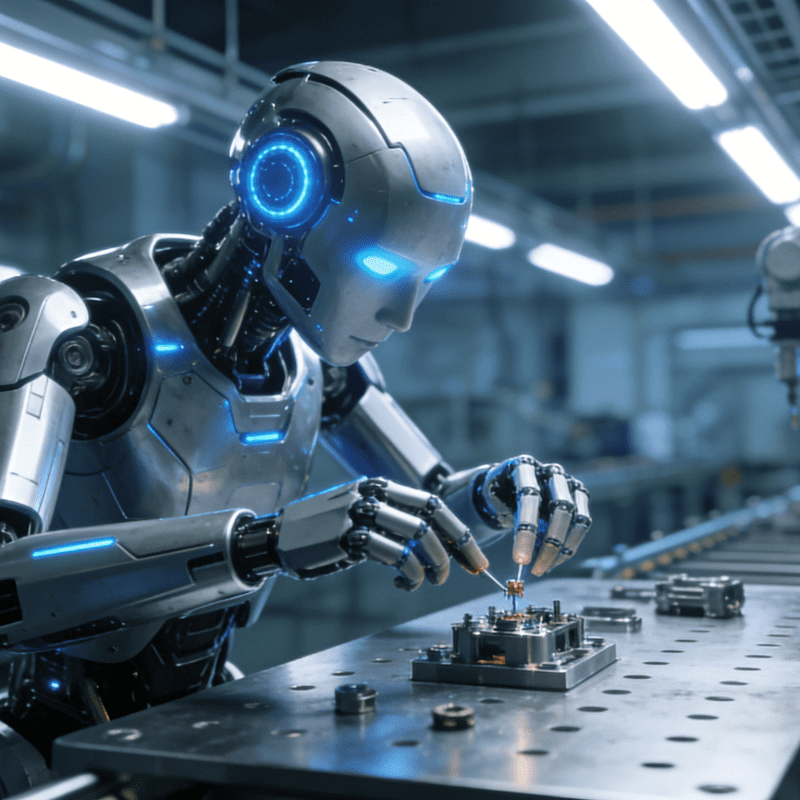

Future robots will not merely serve as tools to replace human labor; instead, they will become intelligent collaborators that work alongside humans, reshaping productivity structures across industries such as manufacturing, logistics, healthcare, and services.

What Is Physical Intelligence in Robotics?

Physical Intelligence refers to a robot’s integrated ability to perceive the environment, construct world models, perform reasoning, and execute actions in the physical world. It consists of three core layers:

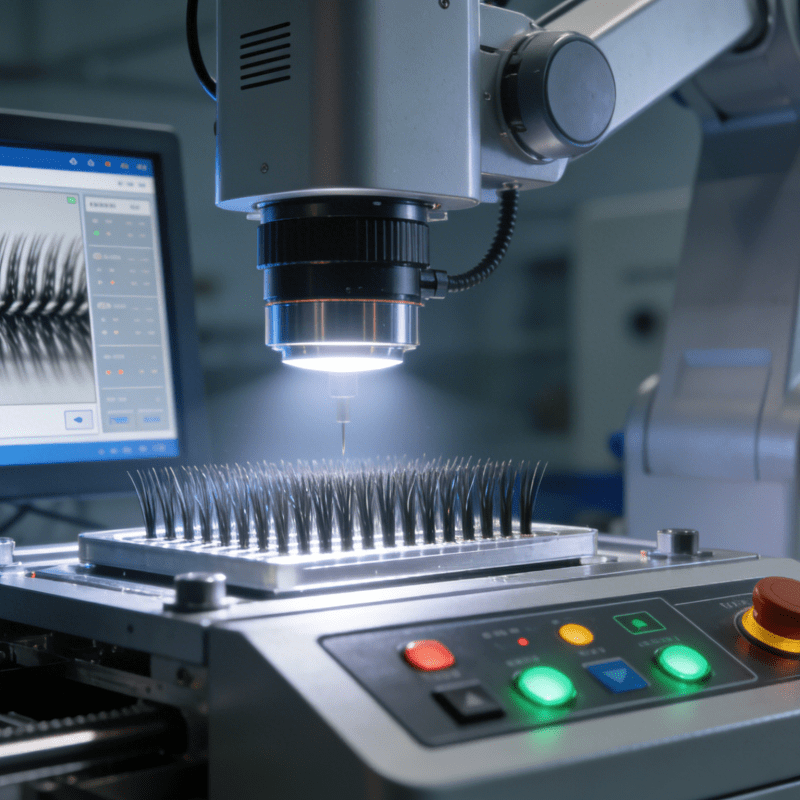

Multi-modal Perception: Continuously capturing dynamic real-world data through visual, tactile, force, and other sensors.

Logical Reasoning and Decision-Making: Formulating rational strategies for uncertain scenarios based on language models, world models, and counterfactual prediction.

Embodied Control and Execution: Translating high-level intentions into low-level controls to achieve smooth, stable, and interpretable motion behaviors.

Advances in research have enhanced robots’ capacity to handle unstructured environments, respond to emergencies, and self-adjust through experience. Nevertheless, achieving fully generalized physical intelligence still faces technical bottlenecks such as latency, reliability, task grounding, and safety.

Integration of Foundation Models and Robotics

Multi-modal foundation models are emerging as the core driving force behind next-generation robotic intelligence. Their strength lies in unifying the representations of perception, language, and action, enabling robots to understand goals through language and transfer capabilities across diverse tasks and hardware platforms.

Cross-platform Generalization

For example, Google DeepMind’s RT-X undergoes joint training across 22 robotic platforms using the OpenX-Embodiment dataset, significantly improving the transferability of motion policies between different robot morphologies.

Multi-modal Reasoning

Covariant’s RFM-1 integrates language, vision, video, and robotic interaction data, equipping robots with world prediction, task decomposition, and real-time self-improvement capabilities. This allows robots to understand human-like instructions via natural language.

Human-like Generalization Architecture

NVIDIA’s GR00T series adopts a dual-system architecture combining language reasoning and motion control, endowing humanoid robots with precise motion control and scene generalization capabilities, laying the groundwork for high-complexity applications.

Foundation models are transforming robots from “fixed-task executors” into “general-purpose intelligent agents with reasoning capabilities”—a qualitative leap in Physical AI.

Data Engines and Robotic Simulation: Accelerating Safe Learning

Intelligent robots require massive amounts of embodied data to enhance generalization, but real-world data collection is costly and risky. Consequently, enterprises are leveraging data flywheels and simulated environments to accelerate training.

Data Flywheel

Real-time operational trajectories generated by large-scale robot deployments can be fed back into model training, enabling the system to adapt faster to dynamic environments and improve robustness.

High-fidelity Physical Simulation

NVIDIA’s World Simulator, combined with video foundation models, generates massive amounts of photorealistic interaction data in Omniverse, narrowing the sim-to-real gap and accelerating policy validation while controlling costs.

Safe Data Collection

Systems like DeepMind’s AutoRT enhance the safety of real-world data collection through regulatory mechanisms, allowing robots to gradually improve autonomy in uncertain environments.

Through the continuous cycle of “deployment—data collection—training—updates”, robots are evolving into sustainable, self-improving intelligent systems.

Language and Vision-driven Robotic Learning

Vision-Language-Action (VLA) models play a pivotal role in intelligent robots, enabling them to:

Understand complex instructions through natural language

Align visual scenes with language-based goals

Dynamically plan multi-step tasks

Self-reflect and rapidly adjust strategies

Take RFM-1 as an example: this model supports in-situ learning, allowing robots to improve by observing task outcomes within minutes. On the other hand, GR00TN1’s reasoning system, integrated with a motion system trained on human demonstrations and synthetic data, delivers more natural and stable movements for humanoid robots.

Evolution of Edge Computing and Perception Systems

Intelligent robots must respond to complex scenarios in milliseconds, placing higher demands on edge computing performance and sensor capabilities.

Notable advancements include:

NVIDIA Jetson Thor Platform: Supporting high-parallelism computing required for real-time reasoning, full-body control, and dexterous manipulation.

Isaac Toolchain: Simplifying the development workflow for robot deployment from simulation to real-world applications.

Enhanced Tactile and Visual Systems: Such as Sanctuary’s Phoenix platform, enabling robots to maintain stable physical interaction capabilities during delicate operations.

These capabilities collectively enhance robots’ predictability and operational performance in complex physical spaces.

Practical Deployment and Initial Outcomes of Intelligent Robots

As hardware, models, and data systems mature, intelligent robots are beginning to demonstrate value in real-world production scenarios.

Logistics Sector: Amazon operates a robotic system of over one million units, leveraging generative models to boost fleet efficiency.

Humanoid Robot Applications: Agility Robotics’ Digit has entered the logistics sorting segment, securing commercial partnerships with GXO.

Industrial-grade Humanoid Platforms: Boston Dynamics’ all-electric Atlas offers higher payload capacity and a larger motion range, suitable for industrial and manufacturing scenarios.

These cases indicate that intelligent robots are transitioning from laboratory prototypes to scalable applications.

Evolution of Human-Robot Collaboration: From Command Execution to Shared Autonomy

Future human-robot relationships will be built on natural language and multi-modal interaction, making collaboration more transparent and efficient.

Key trends include:

Natural Language Interaction as the Primary Interface

Operators can directly set constraints, goals, or adjust strategies through natural language, eliminating the need for specialized programming.

Shared Autonomy Mechanisms

Systems can communicate with humans in real time when encountering uncertain situations, preventing erroneous executions.

Transparent Collaboration via Vision-Language Alignment

Building trust through visual annotations, motion explanations, and multi-modal feedback to enhance collaboration quality in open environments.

Technical Challenges and Risk Control

Despite rapid progress, intelligent robots still face several key challenges:

Latency and Insufficient Computing Power: Limiting the real-time reasoning capabilities of on-board models.

Inadequate Task Grounding: Models struggle to fully comprehend the dynamic changes of complex physical environments.

Failure Modes Caused by Distribution Shift: Training data cannot cover all possible scenarios.

Inadequate Safety and Standardization: Requiring more rigorous fault protection and uncertainty assessment systems.

Future development will rely on reliable validation, multi-layered safety architectures, and standardized norms to ensure the stability and interpretability of robots in real-world environments.

Conclusion: Moving Toward an Era of Scalable Physical Artificial Intelligence

With continuous advancements in multi-modal foundation models, simulation systems, data flywheels, and humanoid hardware, Physical AI is forming a complete technology stack. Future trends will include:

General-purpose skill models becoming standard components of robotic capabilities.

Simulation technology reducing trial-and-error costs and shortening deployment cycles.

Humanoid robots continuously maturing in perception, dexterity, and mobility.

Large-scale deployments feeding into data engines, forming a continuously evolving intelligent ecosystem.

Intelligent robots will become a core production factor across multiple industries, working alongside humans to build a new physical intelligence ecosystem in an increasingly open and complex world.