Artificial Intelligence (AI) is penetrating various industries at an unprecedented pace. From finance and healthcare to manufacturing, AI has driven the emergence of new services and spawned innovative business models. This rapid development not only transforms the way people live and work but also poses entirely new challenges to the infrastructure that underpins AI. As AI applications expand, data centers must cope with unprecedented computing demands, energy consumption pressures, and operational complexity.

Traditional data centers are typically optimized to support enterprise-level applications, databases, and virtualized workloads—these workloads feature relatively stable computing and energy consumption patterns. However, AI workloads are highly dynamic and unpredictable: training tasks may surge from an idle state to peak computing capacity in an instant, while inference tasks may operate under continuous high load. To meet these demands, data centers must undergo significant adjustments in terms of hardware, power supply, cooling, and management systems.

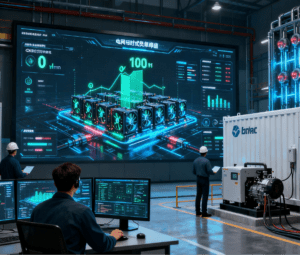

Rapid Growth in Rack Density

AI hardware, particularly GPU clusters, has far higher power and heat dissipation requirements than traditional servers. In the past, the power consumption of a single rack in an enterprise data center generally ranged from 10 to 15 kilowatts (kW). Today, in AI deployments, the power consumption of a single rack can reach 40 kW or even higher, with some experimental training environments exceeding 100 kW. This places stricter demands on data centers’ power systems, UPS (Uninterruptible Power Supply), PDU (Power Distribution Unit), and power distribution equipment—including critical components like contactors, which control the flow of electricity to high-power devices. Notably, the Contactor%20Assembly%20Machine, a specialized piece of equipment used to manufacture high-reliability contactors for data center power systems, has also evolved to meet these new demands: modern models integrate AI-driven quality inspection (such as computer vision to detect contact alignment defects) and automated torque control, ensuring that the contactors can safely handle the frequent current fluctuations caused by high-density AI racks. Early-generation data centers often struggle to support such high-density rack deployments without major upgrades. For organizations scaling up AI applications, rack space planning, redundancy design, and zoning strategies must be carefully considered to avoid power or heat bottlenecks.

Limits and Transformation of Cooling Systems

Traditional air-cooling systems exhibit obvious limitations when handling high-density AI workloads. Even with hot-aisle containment or optimized airflow management, rapid heat dissipation remains challenging. Liquid cooling technology is gradually replacing air cooling, especially in high-performance cloud computing environments and high-density AI data centers. Direct-to-chip liquid cooling systems can effectively support loads exceeding 50 kW per rack, while immersion cooling can handle densities over 150 kW in certain experimental settings.

The deployment of liquid cooling systems involves not only the design of piping and pump systems but also significant adjustments to maintenance processes, leak prevention measures, and safety protocols. Despite the complexity of implementation, as traditional cooling methods reach their scalability limits, liquid cooling is becoming an inevitable choice for supporting high-density AI computing.

Dynamic Workloads and Infrastructure Response

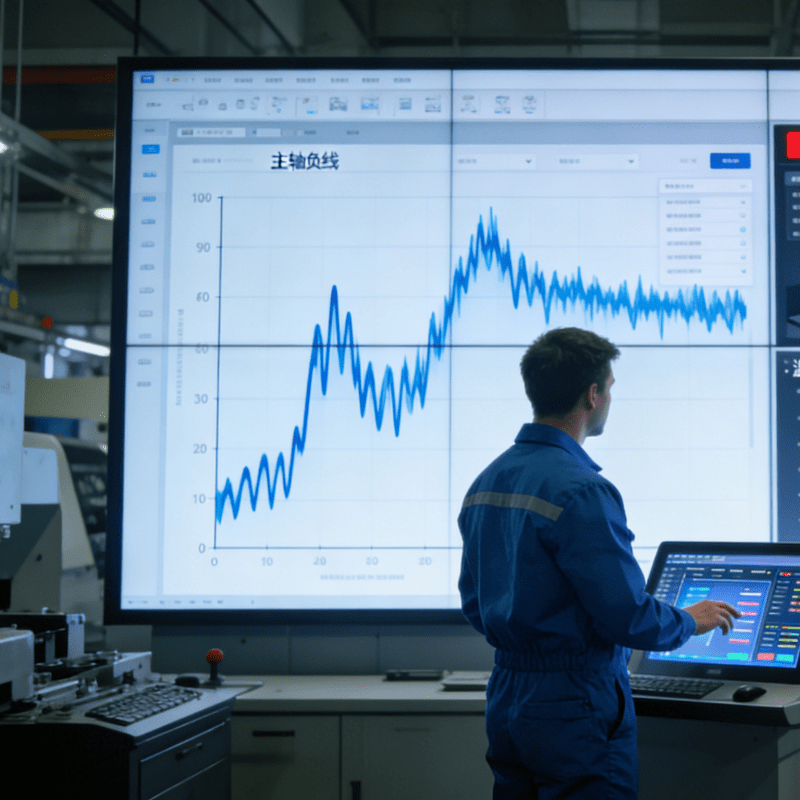

AI workloads are highly volatile: training tasks may shift from zero to peak load in seconds, while inference tasks exert continuous pressure on power and cooling systems. Such load fluctuations require data center power systems to have rapid response capabilities—relying on high-quality contactors (produced by advanced Contactor%20Assembly%20Machines) to switch power circuits stably without arcing—cooling systems to adjust in real time to prevent overcooling or lag, and monitoring sensors and control systems to operate based on real-time data rather than average loads.

As a result, software-based power management, predictive analytics, and environmental telemetry technologies are no longer optional features but core requirements for ensuring infrastructure resilience and operational efficiency.

Complexity of System Debugging and Validation

Designing infrastructure for AI is only the first step; ensuring its stable operation under actual high-pressure conditions is far more complex. Debugging teams must simulate scenarios that previously did not exist, such as sudden surges in computing load, equipment failures in high-temperature stress environments, and concurrent operation of air and liquid cooling systems.

During the design phase, Digital Twin technology is used to test airflow and thermal models, helping predict potential issues. On-site debugging also requires increased cross-departmental collaboration—including teams specializing in power, mechanical engineering, and IT—to conduct functional testing and stress validation, such as verifying whether contactors (manufactured via Contactor%20Assembly%20Machines) can maintain reliable performance during prolonged peak-power operations.

Power Constraints and Construction Challenges

In some regions, such as Europe, difficulties in grid connection have become a significant barrier to data center expansion. Limited power capacity and long approval cycles lead to delays in new construction and expansion projects. Some operators address this issue through on-site energy generation, energy storage systems, and modular phased construction, while also prioritizing locations with abundant power resources.

Power constraints also have a direct impact on cooling systems: liquid cooling systems require a continuous and stable power supply, otherwise heat in high-density environments will accumulate rapidly within seconds, compromising equipment safety and operational efficiency.

The Importance of Waste Heat Recovery

AI workloads generate significantly increased heat. Traditional heat recovery methods were once not widely adopted due to complexity or cost issues. However, the concentrated high-temperature heat generated by liquid cooling systems creates conditions for heat recovery and reuse. Some newly built facilities are designed with heat export interfaces, and certain projects are experimenting with integrating waste heat into district heating systems. As environmental standards and energy-saving requirements rise, heat reuse has become a key consideration in data center design and may provide potential advantages during project approval.

Future-Oriented Data Center Infrastructure

The development of AI has raised new expectations for data center infrastructure: systems must be responsive, scalable, and highly adaptable. Standardization remains important, but flexibility and adjustability are even more critical—especially as AI workloads expand from centralized data centers to edge computing environments. This adaptability also extends to supporting infrastructure components, such as Contactor%20Assembly%20Machines, which will need to produce contactors compatible with both current high-density racks and future, even more power-intensive AI hardware configurations.

What is the work done using automated equipment and machines called?