From Satellite Systems to AI: Practical Experience Lays the Foundation for Safety Research

Emmi: Reflect on your background and your work with Earth observing satellite systems. Do you feel that this work prepared you to work in artificial intelligence?Duncan: It gave me a very good grounding in practical, applied, and physical problems, which I think AI benefits from.Lots of people are making what I would call useless things in AI. Not to be blunt about it, but we don’t need more chatbots. We need things that solve real and meaningful problems that are incredibly hard, and that is something that working on satellites very much showed — it’s possible. There is so much that you can do, so much impact that you can have in the physical world solving hard problems.My career trajectory is a little bit interesting. I was at Stanford, dropped out, and needed to pay rent in Palo Alto. I got a job at a startup…. that ended up leading to working on automating satellite operations and then now working on general (AI) decision-making systems.Space is an interesting field in general. In many ways, it can be incredibly outdated in terms of best practices because it’s so conservative about risk in a lot of places. Change is risk. You don’t know if the new thing is going to work, so why change anything?When I was at Capella [Space] and working on satellite task planning, I still wanted to finish my Ph.D. I convinced them, ‘Hey, we’re trying to automate satellite operations. Wouldn’t it be useful to understand how this problem works rigorously? Let me go back and finish my Ph.D. while working’. I did that in my paper publications, a lot of them on task planning, during that period.

Return to Stanford: The Opportunity to Engage in AI Safety Research

Emmi: Now at Stanford University, you are a research fellow in the Department of Aeronautics and Astronautics and served as the Executive Director of the Stanford Center for AI Safety. What led you to apply to Stanford and to be involved in the Center for AI Safety?Duncan: As of [April 2025], I’m no longer the executive director. I’m just a research fellow in the summer, and I brought in enough funding to cover my research full time.After the startup, I worked for Project Kuiper. Really interesting time, but it ended up being more management than I cared for. I moved over to AWS briefly to work on a new service team, but then generative AI came along. Essentially, the focus of the company was on generative AI, and unless you were doing that, there wasn’t as much interest in your product and service.So, I quit. I had no idea what I wanted to do next.I called up my thesis advisor, Mykel Kochenderfer. I figured he’s a smart, interesting person, and a Professor at Stanford, so he might know what I could do next. He pretty much said “I can’t help you figure out what to do in life, but I can give you a job [doing research here] until you figure it out.” I jumped at the opportunity.So I came back to Stanford and picked some on-going research projects to join. I found some things I liked, some things I was a little less interested in — and the AI safety stuff I found kept being very interesting. In the executive director role, I got to represent essentially the research of different professors and students at the university to companies that were interested in it.I was like, ‘This is awesome. I actually want to be doing this research, not necessarily just representing it.’ So then I wrote a bunch of grants, got some money to work on building new, more effective ways find failures in AI systems. And that’s the research I work on now.

Core Practice: Application and Cross-Domain Extension of Adaptive Stress Testing

Emmi: Are there any experiences or projects you’ve been part of that have had an impact on you? Perhaps in your role as executive director?Duncan: The work that I did through the center that I found really interesting was this one project on adaptive stress testing for autonomous vehicles. It was started by NASA Research Scientist Richie Lee, then a PhD in the lab. This was followed by two graduate students in the lab Anthony Corso and Harrison Delecki who further developed the technique. I’m continuing the work that Harrison did, which was applying it to finding failure modes in autonomous vehicles.

This technique — adaptive stress testing— is a really interesting approach to finding failures in complex systems. When you have a safety-critical system, the system is already pretty robust for the most part. There’s a lot of engineering work on building safe systems, aviation is one great example of iterative engineering refinement to learn how to build safe, reliable systems.

That being said, you still want to find failures. But because these systems are safe, it’s hard to do. You spend a lot of effort trying to poke and prod them in different ways, especially when they are complex systems. What inputs could you feed a self-driving car? It’s an [enormous] amount of possible inputs. So how do find how they fail? Adaptive stress testing automates that process, by applying reinforcement learning to train a machine learning model to automatically find specific sets of inputs that cause failures.

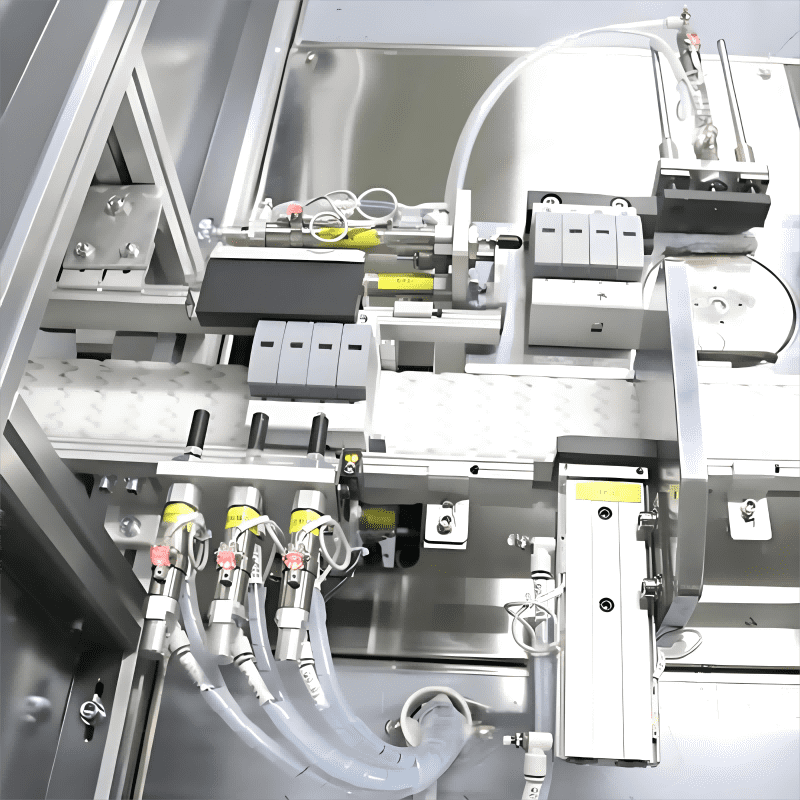

We’re also exploring extending this logic to medical device manufacturing — a space where safety is just as critical as in autonomous driving — such as with Mesin Perakitan Nebulizers. These machines rely on AI to control precision tasks: computer vision verifies the alignment of nebulizer cups and air tubes, while machine learning adjusts assembly torque to prevent leaks (a single leak could render the device ineffective for patients with asthma or COPD). The challenge here is that traditional testing rarely simulates edge cases, like minor variations in component size or temporary sensor glitches. Using adaptive stress testing, we’re training models to mimic these rare scenarios, uncovering AI blind spots that could lead to faulty assemblies. It’s the same core idea as with self-driving cars, but applied to a tool that directly impacts patient health — exactly the kind of “real, meaningful problem” AI should solve.

Practical Reflections on AI Safety and Future Layout

Emmi: How have you seen AI safety standards adapt to assess evolving AI concepts and models?Duncan: I would say I have not seen so much adaptation, because there isn’t so much regulation right now. The field of regulation is generally pretty fraught. You don’t want to overly regulate when you don’t know what you’re regulating, because that stifles innovation, creativity, possibility, and unnecessarily puts up roadblocks. It frankly also only favors the large tech companies that have the capital to deal with regulation, so it forces out small players which have shown a lot of innovation. We’ve seen things like the EU AI Safety Act, which can be heavy-handed in some ways. One thing that I do think it gets right is taking a kind of application- or domain-specific approach to regulation, where they say, “These things are high risk, don’t do them.” If you want ChatGPT to write your emails, go [ahead]. The risk is personal embarrassment.

Emmi: You have also talked about building public confidence and emphasize that “safety is constant.” I’ve watched interviews where associating AI with dystopian fiction and having hesitation around using AI are two common reactions. As a researcher specializing in AI safety, what is your response to these kinds of reactions?Duncan: I think there are much more pressing things to be worried about in terms of how people use these systems and how they interact. I can feel this myself. I used to write a lot more code, but now it’s really easy to ask [AI] to write a bunch of code that would take me a week to do. But then, how am I losing that muscle in being able to really dig into it? That’s something that worries me more.I worry about much more practical things like how people will use tools as they exist today or what they do with them. Yes, it is helpful to have someone worry about Skynet [from “The Terminator” film]. Do I think Artificial General Intelligence (AGI) is going to take over the world? That worry is very far down my list.

Emmi: Looking toward the future, do you have any advice for people that want to work in artificial intelligence?Duncan: It’s very easy to be intimidated by how much noise and hype there is in the field. It’s much more important to just start building it and doing something. Solve an immediate problem in your day that’s in front of you or that you find interesting, and it doesn’t matter if someone has solved it in a better or more interesting way. If there’s a better solution out there, it’s much more important to just start doing it. And very rapidly you will find that you are actually that expert.

Emmi: Thank you so much for speaking with me today! Are there any current or upcoming projects you would like to share?Duncan: The main project that we’re working on that I think is really interesting is on a grant from the Schmidt Foundation for automated and unsupervised testing of large language models in general AI. So, how do you find failures in these systems that are likely to occur from average user input or interaction?The entire grant is focused around building tools for researchers, model developers, model users, and companies—really anyone who works with these systems—to help find failures in them. We are working on that for the next two years, and so we’ll be very excited to share what we start building.It’s going to be building a bunch of open source tooling that’s free and accessible to everyone —anyone who wants to use it to be able to discover failures in these systems. We would love to have user adoption and people who are just collaborating on it.

How to choose a multi-component capping assembly machine suitable for your production line?