Table of Contents

ToggleIceberg and the Future of Data Intelligence: Powering Intelligent Automation in the Digital Age

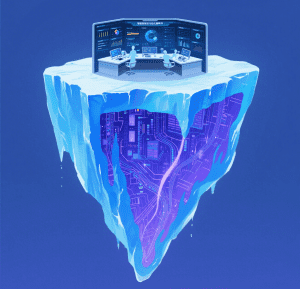

As data becomes the lifeblood of modern enterprises, the role of data platforms is undergoing a seismic shift—no longer mere tools for reporting, but engines for automatisation intelligente that drive real-time decisions, AI-native applications, and autonomous systems. In this era, where industrial automation and data-driven workflows converge, the architecture of data stacks must evolve to support seamless integration with AI agents, machine learning, and équipement d'automatisation. At the forefront of this transformation is Apache Iceberg, a game-changing open table format that is redefining how enterprises unlock the full potential of their data for a smarter, more automated future.

The New Paradigm: Data Platforms for Intelligent Automation

Gone are the days of static data lakes and batch pipelines. Today’s enterprises demand platforms that can:

- Deliver real-time insights to fuel automatisation industriellesystems

- Support AI-native applications and intelligent agents that learn from dynamic datasets

- Ensure governance and trust in data used across autonomous workflows

- Scale seamlessly alongside evolving équipement d'automatisationand AI-driven processes

This shift is 催生 ing a new generation of lakehouse architectures—hybrid systems that merge the scalability of data lakes with the transactional rigor of data warehouses. At the core of this movement is Apache Iceberg, which transforms cloud object stores into agile, governed layers capable of powering automatisation intelligente at scale.

Apache Iceberg: The Foundation for Automated Data Intelligence

Apache Iceberg is more than a storage format; it’s a catalyst for équipement d'automatisation integration and AI readiness. Unlike traditional formats like Parquet, Iceberg introduces capabilities that align with the needs of modern automation:

- Schema Evolution & Row-Level Control: Enables dynamic data modeling for AI pipelines that adapt to changing business rules.

- Time Travel & Metadata Management: Provides auditability for AI/BI workflows, critical for explaining decisions in automated systems.

- Performance at Scale: Optimized snapshots and incremental processing ensure low-latency updates, essential for real-time automatisation industriellefeedback loops.

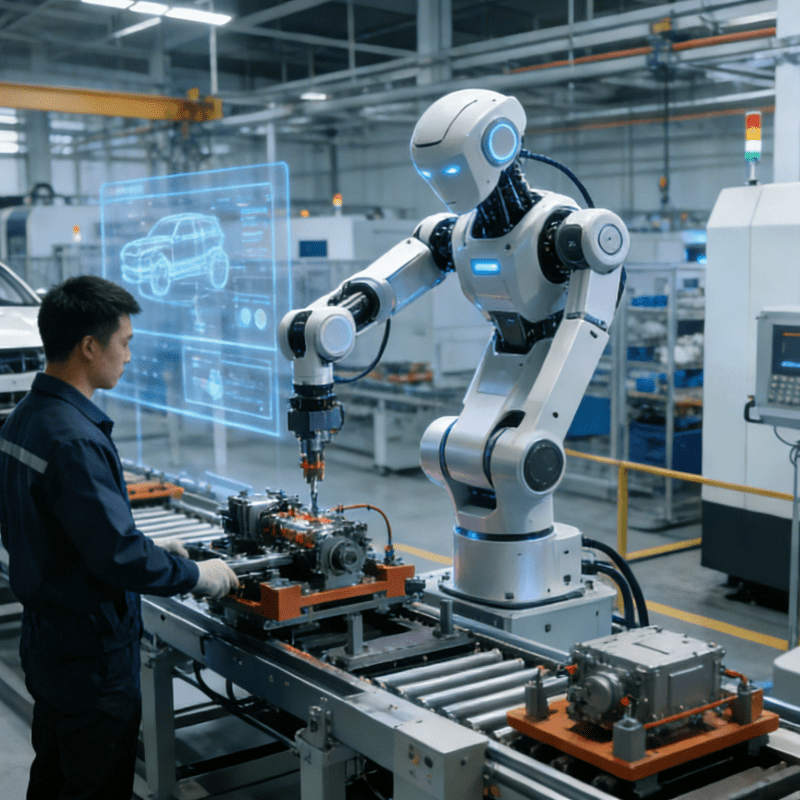

By acting as a vendor-agnostic layer, Iceberg breaks down silos between analytics, machine learning, and automatisation intelligente tools. For example, it allows data to flow seamlessly from streaming pipelines (feeding real-time sensor data from équipement d'automatisation) to AI models that optimize production lines—a critical loop in smart manufacturing.

Why Iceberg Matters for the Automation-Driven Enterprise

Lakehouse Intelligence for AI-Native Workloads

Iceberg’s unified support for batch and streaming data makes it ideal for enterprises integrating automatisation industrielle with predictive maintenance or quality control AI. For instance, a factory’s équipement d'automatisation can generate real-time data streams that Iceberg processes, enabling machine learning models to predict equipment failures before they occur.

Governance for Trusted Automation

In regulated industries, where automatisation intelligente must comply with strict standards, Iceberg’s metadata tracking and lineage features ensure that AI-driven decisions are auditable. This is vital for sectors like healthcare or finance, where explainability of automated processes is non-negotiable.

Scalability Without Compromise

As enterprises deploy more équipement d'automatisation and generate petabytes of data, Iceberg’s optimized storage and multi-engine compatibility (Spark, Trino, Snowflake) ensure that performance remains consistent. This scalability is essential for supporting the computational demands of AI agents and real-time analytics in large-scale automation environments.

Architectural Neutrality: A Catalyst for Open Automation Ecosystems

Iceberg’s design prioritizes automatisation intelligente interoperability. Unlike format-specific solutions tied to single engines (e.g., Delta Lake’s Spark dependency), Iceberg’s snapshot-based approach enables true multi-engine collaboration. This is crucial in hybrid environments where équipement d'automatisation from different vendors (e.g., IoT sensors, robotics) must communicate with diverse AI platforms.

For example, a manufacturer using Iceberg can feed data from its équipement d'automatisation (powered by AWS IoT) into Trino for real-time analytics and Spark for machine learning, all while maintaining a single source of truth. This flexibility reduces vendor lock-in and accelerates the deployment of cross-platform automation solutions.

Closing: Iceberg as the Unifying Force in Automated Intelligence

As industries pivot toward automatisation intelligente and AI-native operations, Apache Iceberg is emerging as the indispensable glue that connects cloud scalability, database reliability, and the flexibility required by dynamic automation workflows. Whether powering predictive maintenance in industrial settings, optimizing supply chains through real-time AI, or enabling autonomous governance in data-driven enterprises, Iceberg ensures that équipement d'automatisation and AI systems operate on a foundation of trusted, adaptable data.

In the future of data intelligence, where every enterprise is a digital automaton, Iceberg will not just be a tool—it will be the standard that defines how automatisation intelligente scales, learns, and transforms industries. For organizations ready to embrace the next wave of automation, Iceberg is the key to unlocking a future where data drives innovation without bounds.