Artificial Intelligence (AI) is reshaping human society at an unprecedented pace. It can optimize the allocation of medical resources, support early warnings for climate disasters, and even provide real-time data for peacekeeping operations, emerging as a “potential tool” to solve global challenges. However, on the flip side, its misuse in the military field, information chaos caused by algorithmic manipulation, and growing global inequality exacerbated by the technology gap also pose hidden threats to international peace and security. As AI’s influence transcends national borders and penetrates core areas of human existence, “how to collectively shape its development direction” is no longer a task for a single country, but an era-defining question that requires global collaboration to answer.

Against this backdrop, the 80th Session of the United Nations General Assembly has become a key platform for global focus on AI governance. From the Security Council’s high-level debate centered on “peace and security,” to the General Assembly’s launch of the “Global Dialogue on AI Governance,” and the diverse participation of countries, international organizations, and civil society forces, a global collaborative initiative aimed at “maximizing benefits and minimizing risks” is gradually unfolding.

The UN’s “Dual-Driver Approach to AI Governance”: From Security Concerns to Global Dialogue

Faced with AI’s dual nature, the UN is advancing global governance through a path of “first focusing on risks, then building consensus”:

The Security Council: Defining the “Peace and Security” Red Line for AI

On September 24, the Security Council held a high-level open debate on AI, placing “AI’s impact on international peace and security” at the core of its agenda. The meeting did not deny AI’s value, but directly addressed its potential threats—such as autonomous weapon systems that may weaken human control over the use of force, algorithmic disinformation that may incite conflicts, and cyberattacks upgraded with AI technology. Countries participating in the meeting unanimously agreed that risks must be mitigated through “responsible development and deployment”: some countries shared practices for transparency in military AI applications, emphasizing the principle of “human-led decision-making”; others proposed the establishment of an “AI security early warning mechanism” to prevent the abuse of technology from crossing the bottom line of peace.

As UN Secretary-General António Guterres stated in his opening remarks: “The question is not whether artificial intelligence will affect international peace and security, but how we shape that impact.” He clearly outlined four priorities: ensuring absolute human control over the use of force, establishing a consistent global regulatory framework, protecting the integrity of information in conflict situations, and narrowing the AI capability gap between countries—laying down the basic direction for global AI security governance.

The General Assembly: Launching an “Inclusive Global Dialogue” to Amplify the Voices of All 193 Countries

Following the Security Council’s focus on security issues, on September 25, the UN General Assembly, co-chaired by Costa Rica and Spain, held a high-level meeting on AI governance and officially launched the “Global Dialogue on AI Governance.” This marked the first time that all 193 UN Member States participated in a discussion on AI governance, and it broke away from the “government-led only” model—representatives from scientists, tech enterprises, and civil society organizations sat together, contributing insights from multiple dimensions including technical feasibility, business ethics, and public interests.

The core significance of this dialogue lies in breaking the possibility of “a small number of countries or enterprises monopolizing the right to speak on AI governance.” As Spanish Prime Minister Pedro Sánchez Pérez-Castejón put it: “Such a powerful technology cannot be monopolized by private interests or authoritarian states.” The General Assembly made it clear that subsequent rounds of regional consultations and technical seminars will be held to gradually form governance principles with broad consensus, infusing the “inclusiveness” gene into global AI development.

From Resolutions to Mechanisms: The UN’s “Implementation Actions” to Advance AI Governance

In fact, the UN’s exploration of AI governance did not start with this General Assembly, but is a systematic project that has been advancing continuously in recent years. The implementation of a series of resolutions and mechanisms has laid the foundation for global governance:

The Global Digital Compact (GDC) and the “Independent International Scientific Panel on AI (IISP-AI)”: Grounding Policies in Science

At the UN Future Summit in September 2024, Member States adopted the Pact for the Future, within which the Global Digital Compact (GDC) clearly proposed two key initiatives: first, the establishment of the “Independent International Scientific Panel on AI (IISP-AI)”; second, the launch of this “Global Dialogue on AI Governance.” Both initiatives originated from the recommendations in the 2024 UN report Governing Artificial Intelligence for Humanity and were formally adopted by the UN General Assembly in August 2025.

The core function of IISP-AI is to serve as a “global early warning system” for AI risks—it will integrate global scientific research forces to conduct evidence-based scientific assessments of AI’s opportunities and risks (such as disinformation, autonomous weapons, and algorithmic discrimination), ensuring that national policy-making “does not deviate from technological reality and does not ignore potential harms.” For example, regarding controversial issues such as “whether AI will exacerbate employment inequality” and “whether autonomous weapons comply with international law,” the panel will provide objective technical analysis and ethical recommendations to prevent policies from moving to extremes.

Resolution on Military AI: Setting a “Human-Centered Bottom Line” for “Battleground AI”

On December 24, 2024, the UN General Assembly adopted a resolution jointly drafted by the Netherlands and South Korea, focusing specifically on “artificial intelligence in the military field.” The resolution emphasizes that even in military scenarios, the use of AI must adhere to the principles of “responsibility and human-centeredness,” clarify “humanity’s ultimate control over military decisions,” and prohibit the transfer of “life-or-death authority” to machines. Portuguese President Marcelo Rebelo de Sousa reaffirmed this position at this General Assembly: “This is a moral, ethical, and legal responsibility that cannot and should not be shirked.”

Supplementary Regional Practices: The “Risk Classification” Experience of the EU’s AI Act

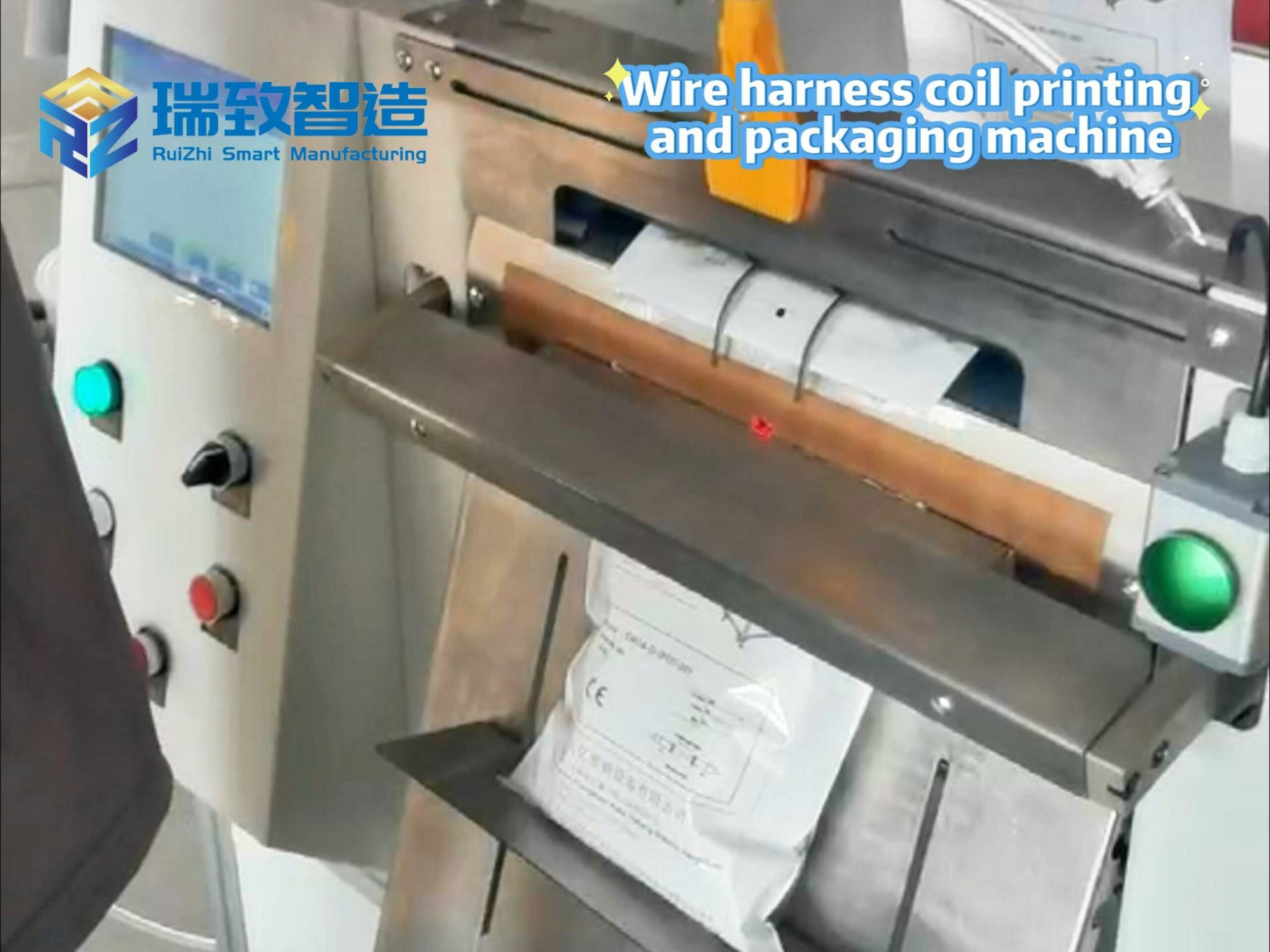

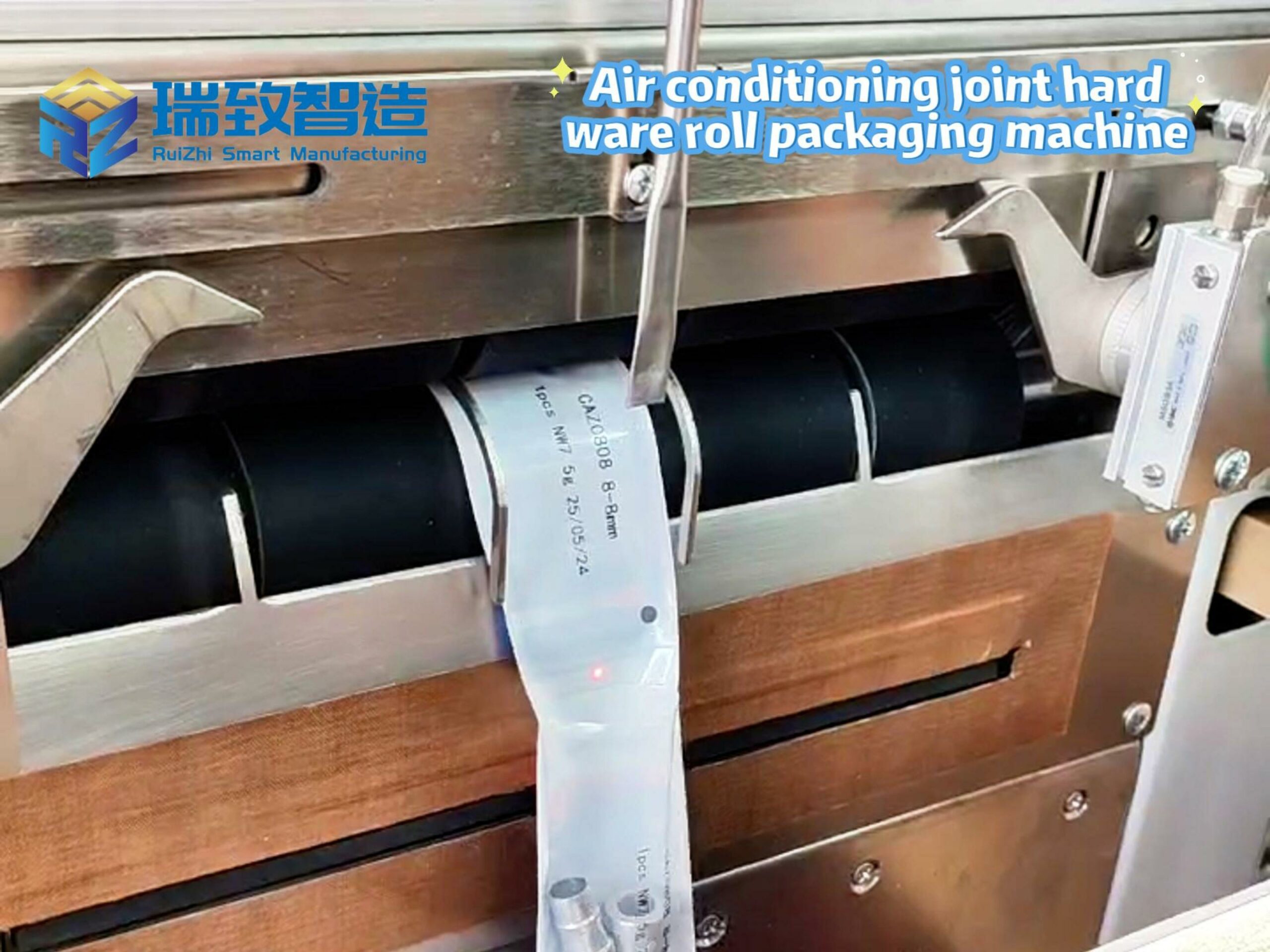

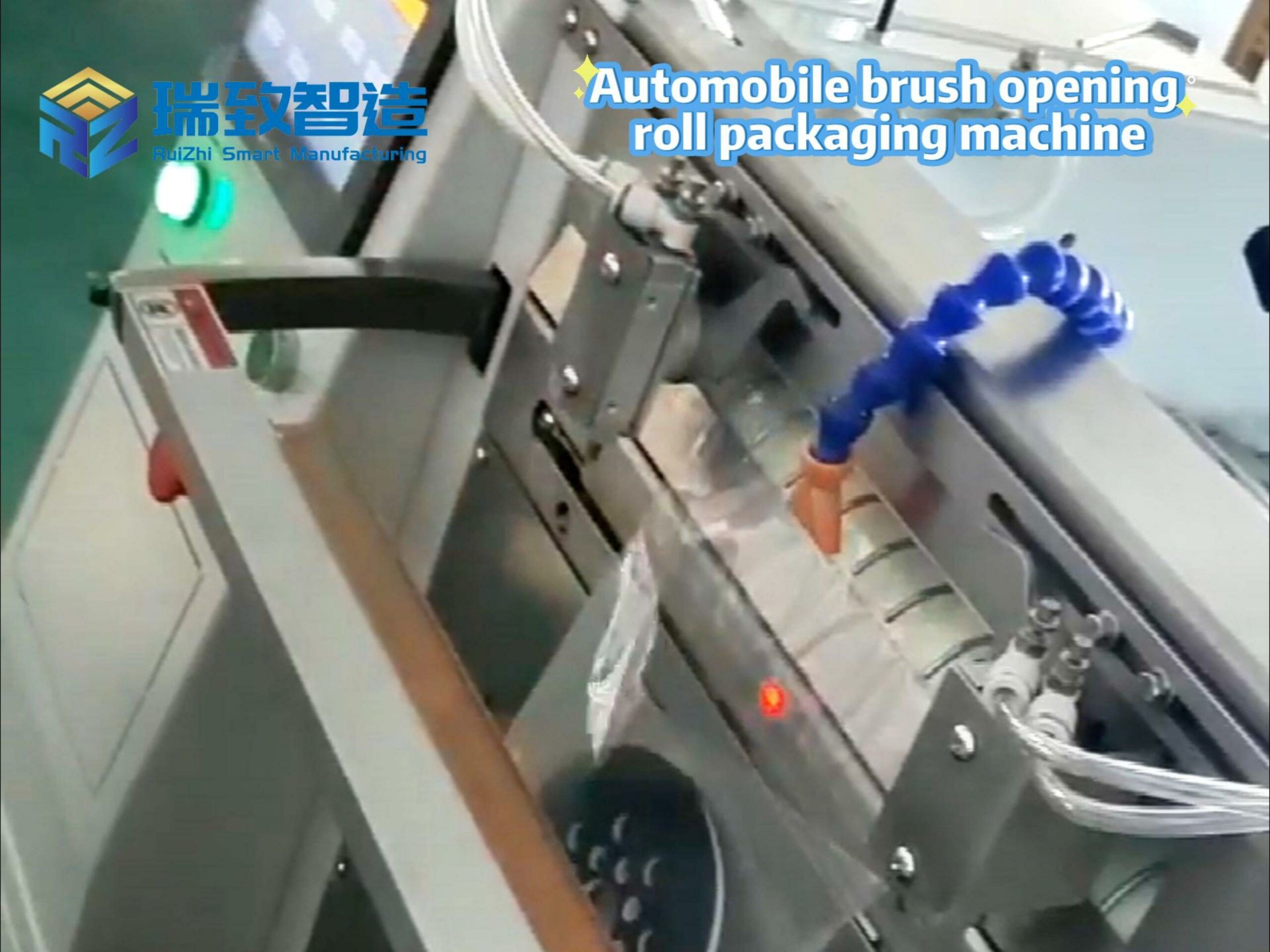

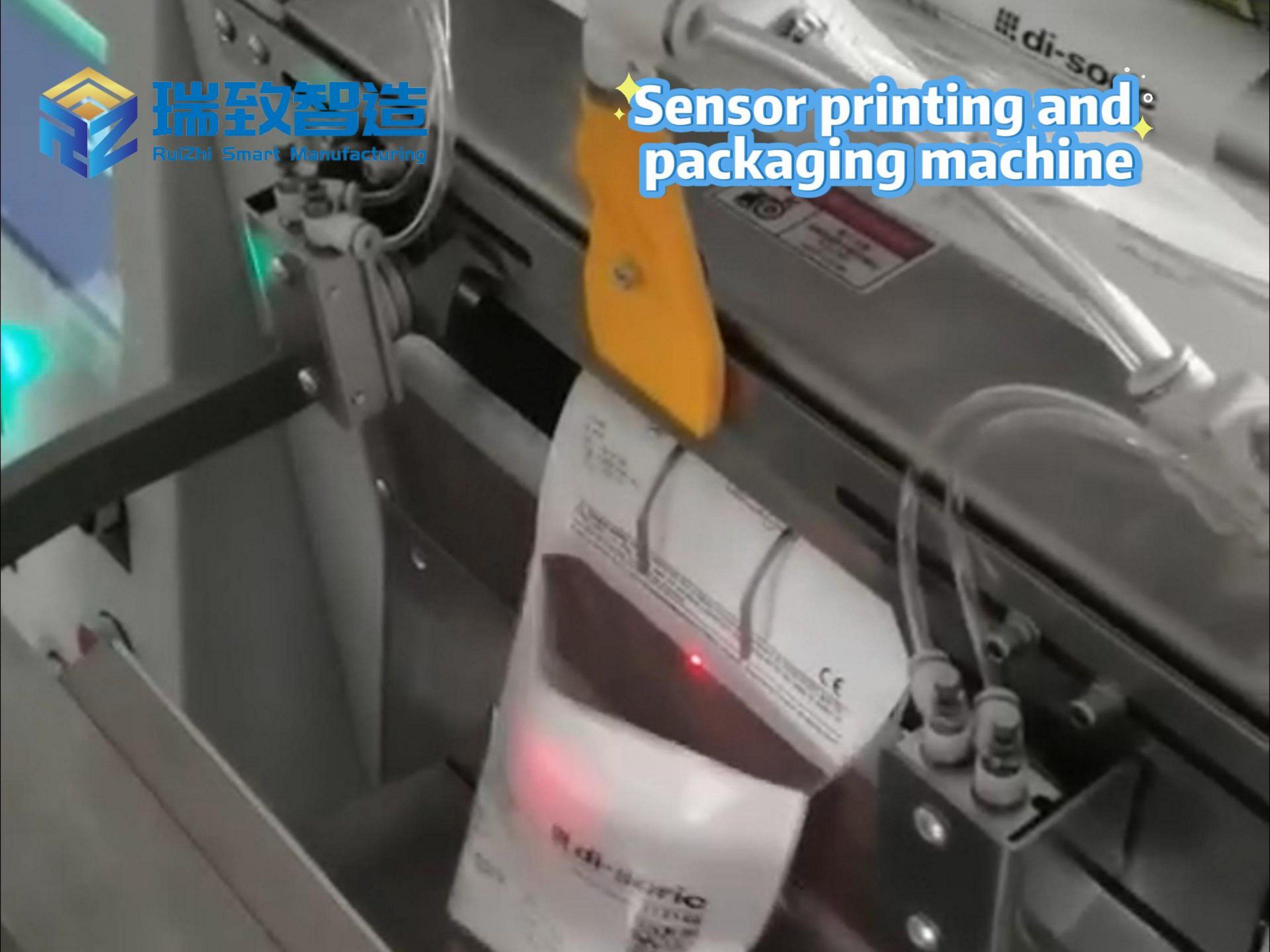

Beyond the global governance framework, regional practices have also provided references for the UN. A typical example is the EU’s AI Act—the world’s first comprehensive AI law, which establishes a “risk-based classification system”: AI applications are divided into four categories: “unacceptable risk” (such as social scoring), “high risk” (such as medical diagnosis, autonomous driving), “medium risk” (such as chatbots), and “low risk.” Different risk levels correspond to different regulatory obligations (e.g., high-risk AI must pass compliance tests and disclose technical documents). This classification has proven practical in guiding industrial AI applications—for instance, AI-powered Automatic material arranging and packaging equipment, widely used in logistics, food processing, and electronics manufacturing, falls under the “medium risk” category. Equipped with computer vision and adaptive algorithms, this equipment can automatically identify material sizes, shapes, and textures to optimize arrangement and packaging processes (reducing material waste by 15-20% and improving efficiency by 30% compared to manual operations). Under the EU’s AI Act, it is required to disclose key algorithm logic (e.g., how material characteristics are identified) and conduct regular data security audits (to prevent leakage of sensitive product information). The EU clearly stated at this UN General Assembly that it is willing to share this experience—including regulatory cases of such industrial AI equipment—and provide a “practicable reference sample” for the global regulatory framework.

National Consensus and Challenges: Seeking the “Greatest Common Divisor” Amid Differences

Although there is global consensus on the necessity of AI governance, there are still differences in specific paths—and these differences are precisely the core issues that the “global dialogue” needs to address:

On “Technological Sovereignty” and “Collaborative Regulation”: Some developing countries are concerned that overly strict global regulation may restrict their AI development space, and call for “balancing technological inclusion in regulation”; developed countries, on the other hand, emphasize “unified standards” to prevent AI risks from spreading through cross-border flows. The UN’s proposed “Global Capacity Development Fund” (the third pillar of Guterres’ Global Strategy) is a response to this—by funding AI infrastructure and talent training in developing countries, it narrows the technology gap and lays the foundation for collaborative regulation.

On “Innovation Speed” and “Security Bottom Line”: Tech enterprises tend to favor “flexible regulation” to reserve space for technological iteration; civil society organizations and some countries, however, emphasize “security first” and call for the establishment of a “mandatory review mechanism” for high-risk AI applications. The view of Dutch Prime Minister Mark Rutte is representative: “The pace of AI development is beyond expectation. We must not stagnate due to fear, nor lose control due to blindness. We must keep up with technological progress through ‘agile governance’.”

On “Energy Sustainability”: General Assembly President Annalena Baerbock specifically pointed out that AI training and data centers consume massive amounts of energy, which may exacerbate the climate crisis. This “overlooked risk” needs to be incorporated into the governance framework—future AI development must be not only “human-centered” but also “earth-centered.”

Conclusion: The AI We Want Is Ultimately “AI That Serves Humanity”

Eighty years ago, the founders of the UN, faced with the disruptive technology of “atomic energy,” chose international cooperation to avoid disasters and embrace progress; today, in the face of AI, this generation shoulders the same mission—as Costa Rican Foreign Minister Arnoldo André Tinoco put it: “This power can both illuminate the future and cast shadows, and our responsibility is to use wisdom to keep it always oriented toward the light.”

The AI we want is not a tool monopolized by a few, nor a hidden danger that triggers conflicts, but a “global public good” that can narrow the gap between the rich and the poor, safeguard peace and security, and support sustainable development. The “global dialogue” launched by the UN is precisely building a platform for this goal—it may not solve all differences overnight, but at least it brings 193 countries and diverse forces to the same table to collectively write the future of AI.

This path is destined to be long, but as long as we always adhere to the core of “putting people first” and maintain an “open and inclusive” attitude, humanity can avoid the crises brought by AI and truly make this technology a force for promoting the progress of civilization. After all, technology itself has no good or evil; what shapes it is always the collective choice of humanity.