Breakthroughs in artificial intelligence (AI) technology are driving human-computer interaction to evolve from functional assistance to emotional and personalized engagement. While reshaping the social interaction model, such evolution has also given rise to a series of new risks and challenges. These risks feature concealed transmission characteristics, which may infringe upon citizens’ rights and interests, and even undermine social ethical order and the foundation of public trust. The lack of effective governance will seriously hinder the sound development of AI and damage social public interests. Against this backdrop, the formulation of the Interim Measures for the Administration of Anthropomorphic Interactive Services of Artificial Intelligence (Draft for Comments) (hereinafter referred to as the Measures) is conducive to promoting the orderly development of AI anthropomorphic interactive services, guiding the responsible innovation of AI, and providing an important institutional underpinning for building a people-centered AI governance system.

Anchoring the National Strategic Direction and Highlighting the Concept of “Responsible Innovation”

Taking national strategies and policy guidelines as the fundamental adherence, the Measures highlights the foresight, precision, systematicness and coordination of AI governance. First, it upholds the principle of science-based legislation, rationally foresees the trend of technological evolution, and reserves institutional space for the innovative development of AI. Second, it implements categorized and tiered supervision, accurately identifies risks based on the characteristics of anthropomorphic interactive services and adopts differentiated regulatory measures. Third, it builds a whole-chain governance system, making an overall plan for technological innovation, ethical norms and social benefits to ensure the sound and orderly development of AI. Fourth, it fosters a pattern of diversified co-governance, attaching importance to the combination of government supervision and industry self-regulation to improve governance efficiency.

Guided by the concept of “responsible innovation”, the Measures clarifies the responsibility boundaries of AI innovation activities, ensuring that technology always advances on a safe, equitable and sustainable track. By simulating human intrinsic traits to establish quasi-social emotional bonds with users, anthropomorphic interactive services may lead to the alienation of new social relations. The concept of “responsible innovation” embodied in the legislation is precisely to safeguard the subjective status of human beings and uphold the value of genuine interpersonal relationships. A series of systems designed based on this concept focus on preventing the abuse of technology from impairing human dignity, social trust and ethical order, and ensuring that the development of AI always serves the well-being of the people.

Focusing on Major Prominent Risks and Constructing a Precision Governance System

The Measures accurately identifies and systematically responds to the major prominent risks derived from the two core characteristics of “anthropomorphism” and “emotional interaction”, and takes the blurred boundary between humans and AI as the key governance priority to build a multi-dimensional risk prevention and control system.

First, establish an identity transparency system and set a red line for the safety of anthropomorphism, so as to precisely guard against risks such as cognitive confusion, erosion of trust, infringement of personality rights and ethical transgression caused by the blurred human-AI boundary. On the one hand, the identity transparency system centered on the obligation of prominent labeling prioritizes protecting users’ right to know and right to choose, provides a clear starting point for legal accountability, effectively curbs the anonymity shelter for technological abuse, and reduces the risks of cognitive confusion and trust erosion at the source. On the other hand, the Measures defines a political security red line for AI anthropomorphism, guarding against deep forgery and new forms of cyber violence, and protecting individuals’ personality rights and interests from infringement. Therefore, the legislation adheres to the positioning of anthropomorphic interactive services as a supplement to human beings, prohibits the replacement and distortion of genuine interpersonal relationships, and ensures that technological development conforms to mainstream values.

Second, strengthen the protection of vulnerable groups and establish an anti-addiction mechanism, addressing the differentiated demands of different groups and reflecting the philosophy of humanistic care and substantive equity. Due to their high emotional responsiveness and companionship features, anthropomorphic interactive services inherently carry addiction risks. Minors are in a critical period of shaping values and developing social skills, while the elderly may face the dual predicament of the digital divide and lack of emotional companionship. These vulnerable groups are prone to emotional dependence in anthropomorphic interaction. The legislation has specifically internalized the social responsibilities of enterprises, ensuring that the dividends of technological progress can benefit all groups in an equitable and safe manner and realizing substantive fairness and justice.

Third, emphasize the stringent protection of users’ interactive information, which is an in-depth and scenario-based application of the Personal Information Protection Law. Emotional personal information can reflect users’ inner world, emotional vulnerabilities and subconscious tendencies, and its improper use may trigger risks such as psychological manipulation, discriminatory treatment and emotional extortion. Meanwhile, technologies related to affective computing may be abused to provide addictive services, leading users to expose more sensitive information unconsciously. Therefore, imposing more stringent purpose restrictions, safe storage requirements and usage prohibitions on users’ interactive data is an extension of personality rights protection in the digital and intelligent era.

Practicing a Systematic Governance Mindset and Implementing Inclusive and Prudent Supervision

Through whole-chain accountability, flexible regulatory tools and a diversified co-governance pattern, the Measures provides a solid guarantee for the implementation of the “responsible innovation” concept and promotes the sound development of the industrial ecosystem.

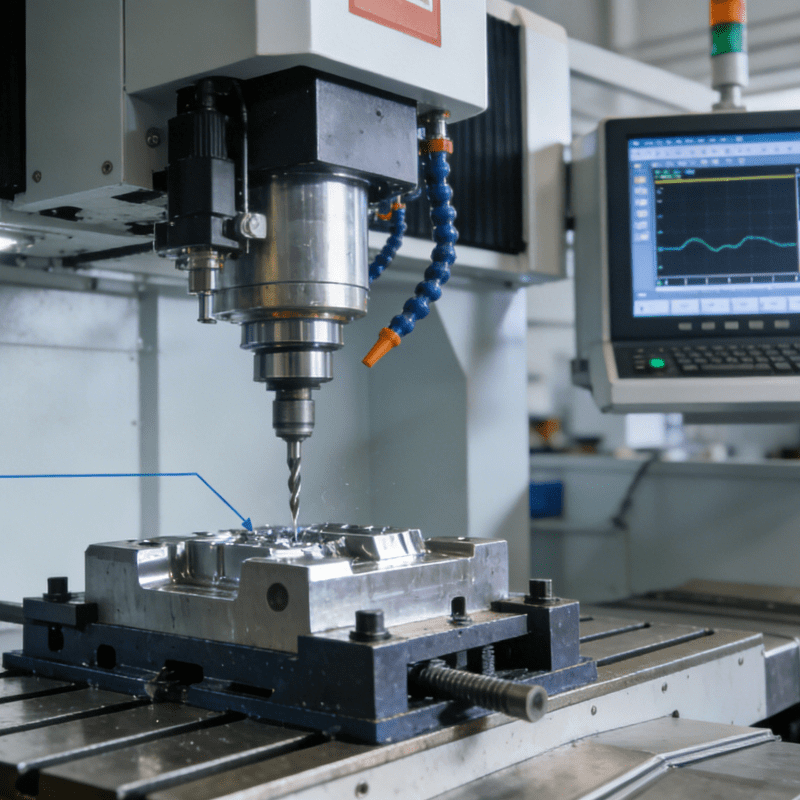

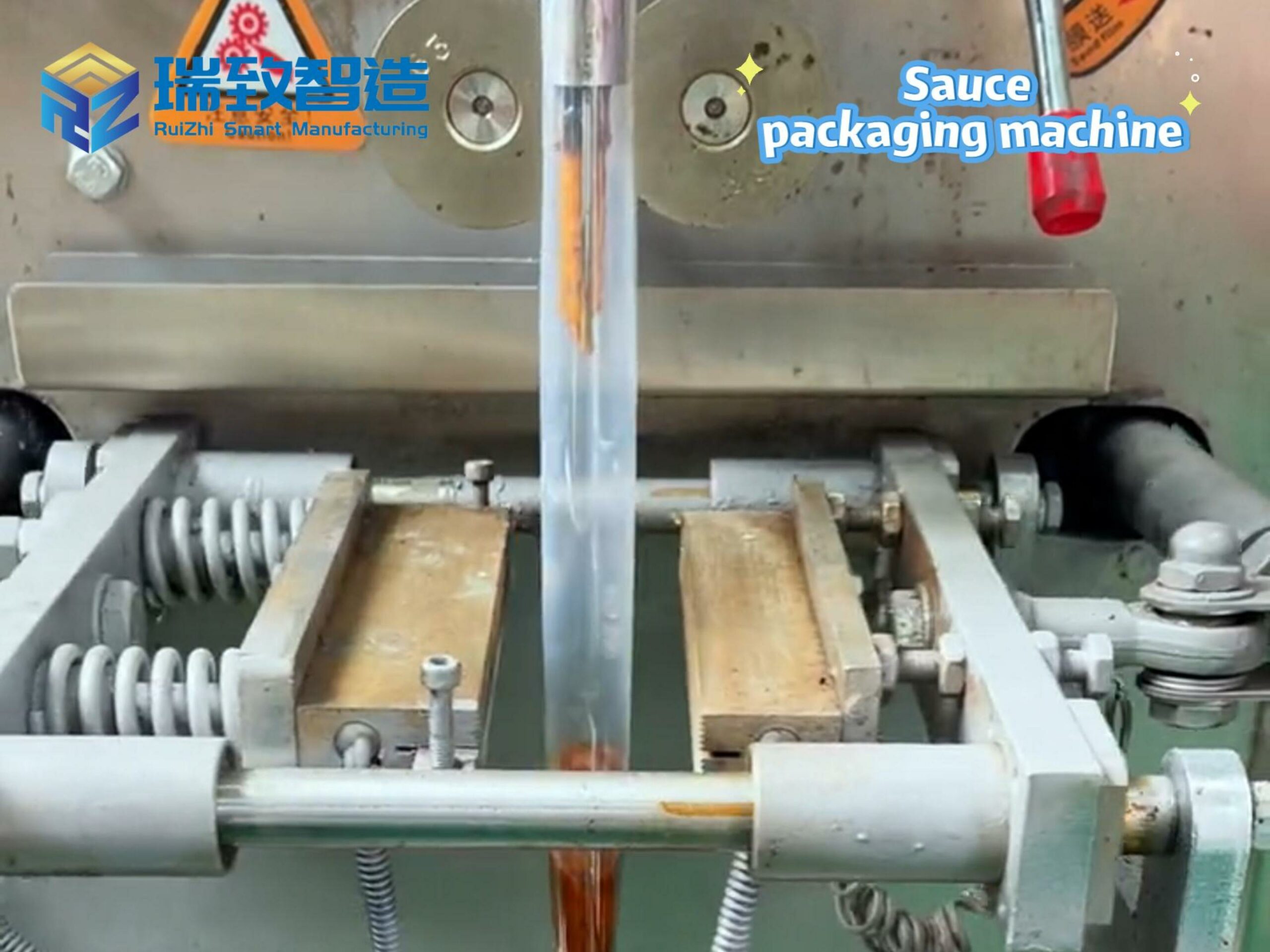

First, integrate responsibility design into all key nodes of anthropomorphic interactive services to achieve precise risk prevention and control. At the model design stage, prevent consequences such as the replacement of social interaction, psychological manipulation of users and inducement of addiction and dependence from the source. At the model training stage, adopt data that reflects mainstream values, evaluate the security of synthetic data and prevent data contamination. Prior to application and deployment, conduct safety assessments in accordance with the law to avoid major security risks of the services. During service operation, establish a dynamic risk disposal mechanism to respond to emergent risks in a timely manner. Even in industrial scenarios where AI empowers functional services such as the Automatic Iron Rod Loading/Unloading System, the application of AI anthropomorphic interactive functions (if any) must comply with the above-mentioned whole-chain governance requirements, ensuring that the identity of the system is transparent to operators and that the interaction process does not violate relevant norms. It is evident that this whole-process governance model can effectively avoid the lag of ex post remedial supervision, promote the internalization of risk management into enterprises’ core business processes, and maximize governance efficiency.

Second, emphasize collaborative co-governance and encourage industry organizations to formulate standards and strengthen self-regulation, which reflects the legislators’ clear understanding of the complexity of AI governance. The Measures actively responds to the diversified governance needs of new technologies and applications, integrates the governance resources of governments, enterprises, industry organizations, the public and other subjects, and forms a joint force for governance. Meanwhile, the Measures strives to achieve a dynamic balance between risk prevention and innovation encouragement, promoting the formation of a dynamic, agile and multi-stakeholder collaborative AI governance pattern, and facilitating the high-quality development of anthropomorphic interactive services in a standardized and orderly environment.

Third, actively implement the inclusive and prudent supervision approach, and explore flexible regulatory tools such as regulatory sandboxes, providing an institutional environment for safe trial and error for new technologies and applications. For the first time at the departmental rule level, the Measures clarifies the regulatory sandbox system, allowing enterprises to carry out innovation pilots and safety tests under the supervision and guidance of regulatory authorities. While safeguarding the innovation vitality of enterprises, it also enables the timely detection and prevention of risks arising during the pilot process. This flexible regulatory model accurately adapts to the characteristics of rapid iteration and diverse application scenarios of AI technology, creating favorable conditions for the mature promotion and safety prevention and control of new technologies.