Opening: New Hurdle in Colorado AI Regulation Negotiations – Liability Amendment Sparks Developer Concerns

The protracted negotiations over revising Colorado’s artificial intelligence regulations have hit another snag – a liability amendment proposed on Sunday, which developers of AI systems fear could expose them to unfair legal lawsuits.

Legislative Background: Budget Shortfall and Controversy Over the 2024 AI Act

In addition to seeking to fill a $783 million budget gap, legislators are also discussing, during a special session, how to revoke rules in a 2024 law. Tech industry leaders have stated that these rules are highly impractical and, if they take effect in February, could lead to a mass exodus of AI developers from Colorado. Currently, two bills are attempting to address this issue: one from the original bill’s sponsor, which would eliminate some disclosure requirements but strengthen protections in other ways; the other from bipartisan sponsors, which seeks to oversimplify the rules – a move that has even raised concerns among consumer groups.

Bill Progress: Bipartisan Bill Scaled Back, Only Extends Effective Date

Since both bills received approval from the first committee on the opening day of the session on Thursday, they have undergone significant revisions. Sponsors of House Bill 1008 (the bipartisan bill) removed its regulatory reform provisions, leaving only an extension of the 2024 Act’s effective date to October 2026. They stated that this would give legislators the entire 2026 legislative session to discuss necessary reforms.

Adjustments to SB 4: Unrealistic Provisions Removed, Replaced with Streamlined Requirements

Senate Majority Leader Robert Rodriguez (D-Denver) – the driving force behind both the 2024 Act and Senate Bill 4 (SB 4) – withdrew a requirement from SB 4 on Sunday. The original requirement mandated that deployers provide individuals affected by adverse AI-driven decisions with a list of 20 personal characteristics of those who most influenced the decision. Facing criticism that this provision was unworkable, Rodriguez replaced it with a rule requiring AI deployers to provide, upon request, an overview to affected individuals, listing the personal characteristics that most impacted the decision.

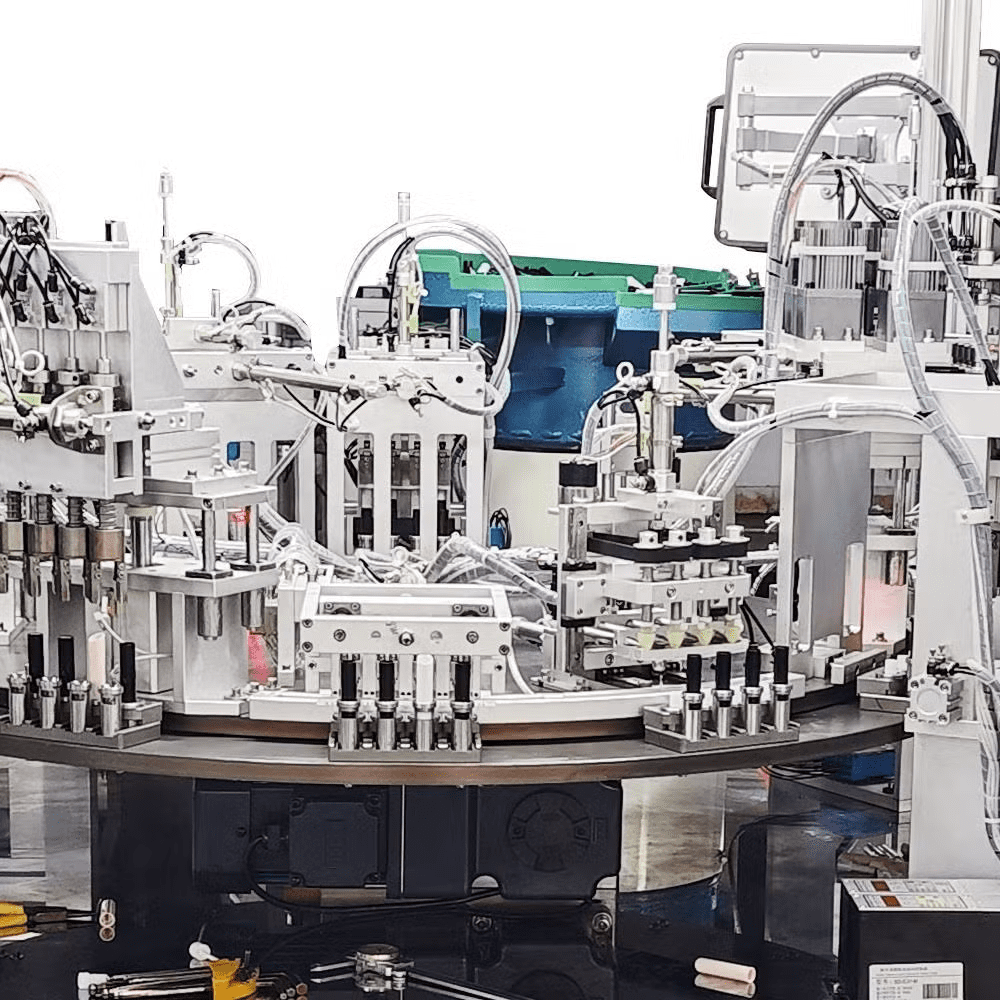

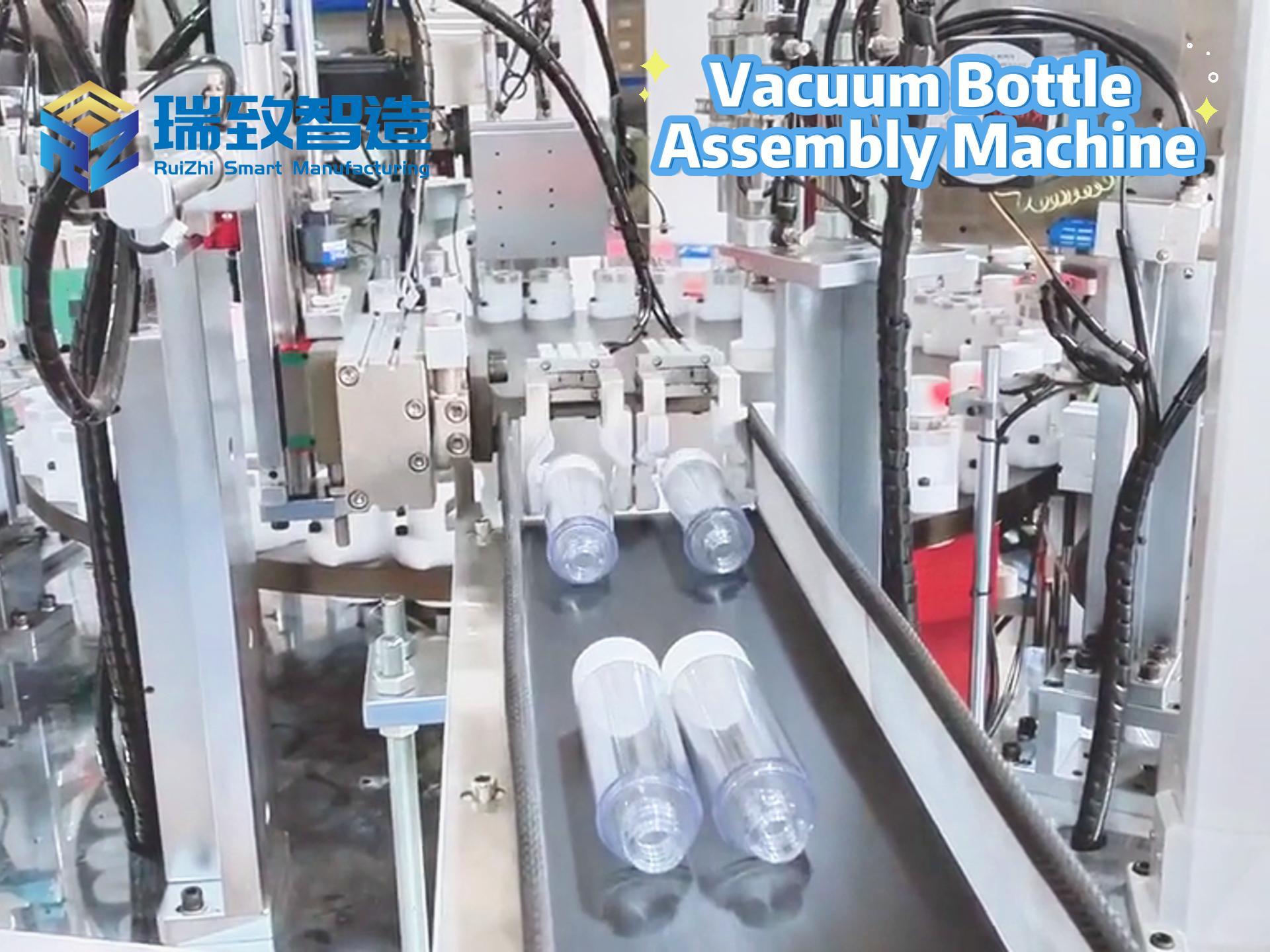

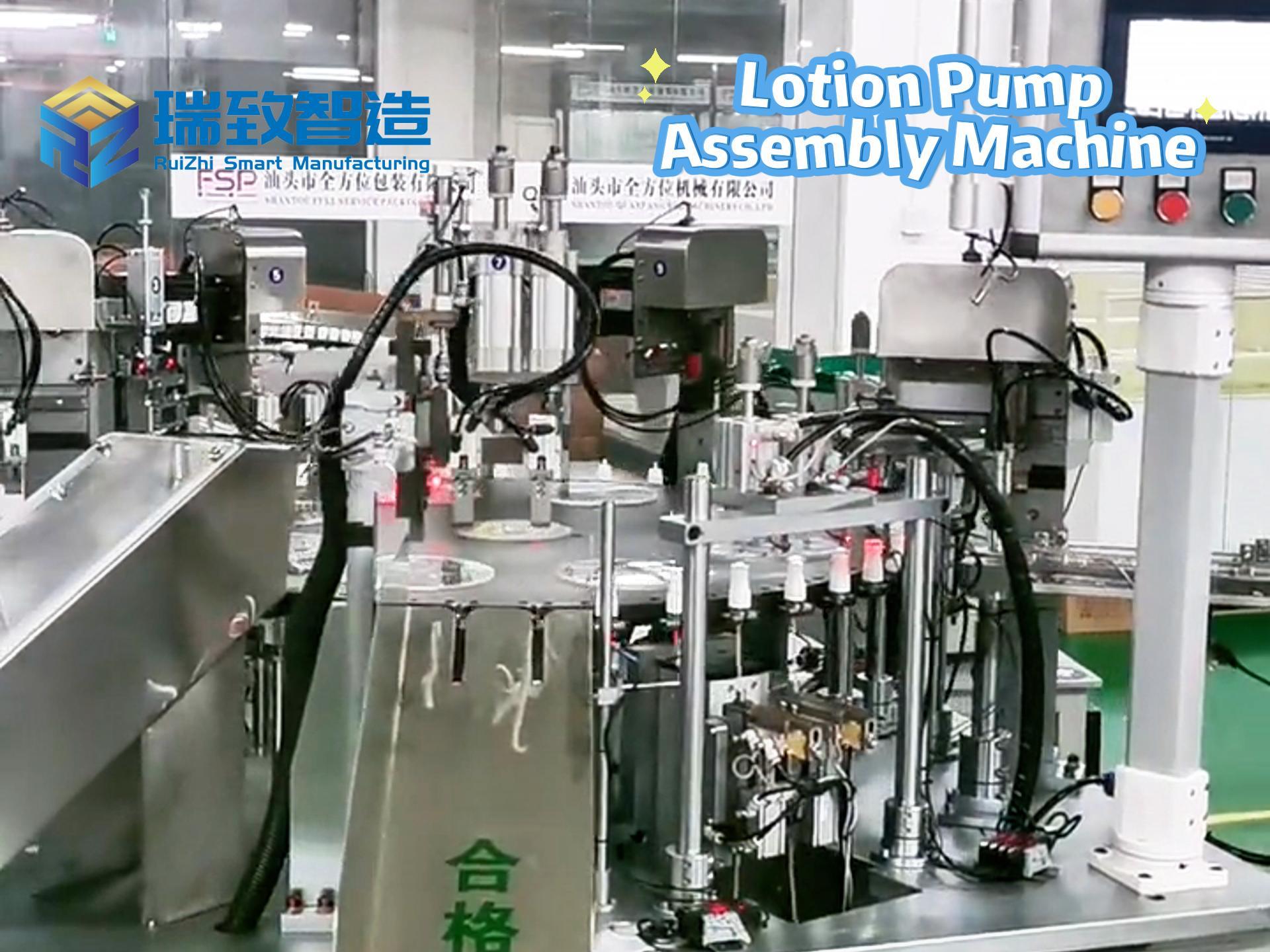

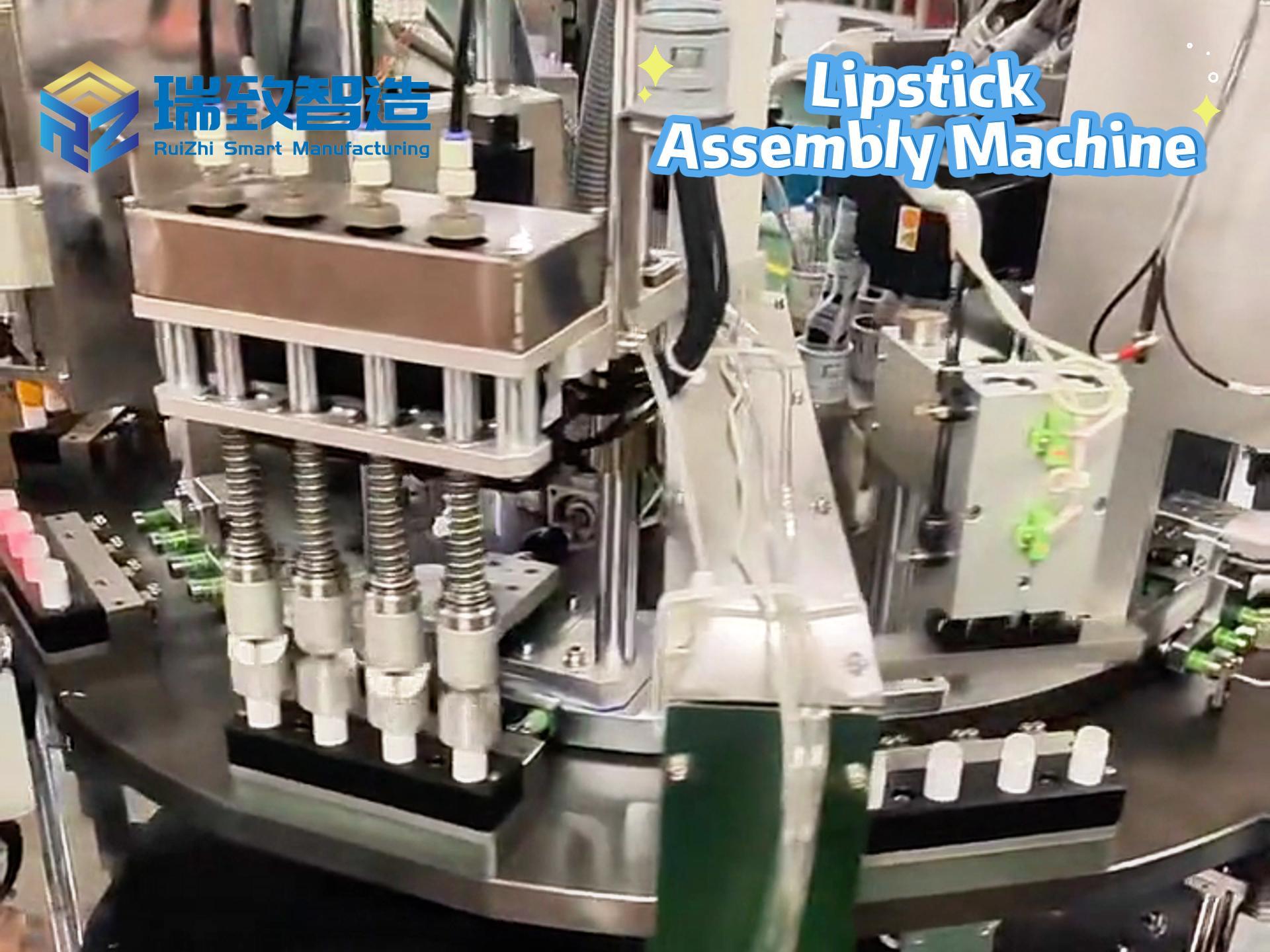

Core Controversy: New Joint Liability Provision Becomes a “Sticky Obstacle” (Including Scenarios Involving Nebulizer Assembly Equipment)

However, during the Senate debate, Rodriguez also added an amendment to the bill, stipulating that if an AI system is found to violate Colorado’s anti-discrimination laws or consumer protection laws, developers and deployers would be held jointly liable. Although he later removed this provision from the bill to continue negotiations with business, consumer, and labor groups, the issue of liability has clearly emerged as the latest obstacle to reaching any consensus.

This liability controversy also extends to AI applications in manufacturing – for example, the Μηχανή συναρμολόγησης νεφελοποιητή in the medical device sector. If the AI visual inspection system equipped on this machine fails to meet nebulizer sealing performance standards due to algorithmic biases (which could compromise patient medication safety), the new liability provisions would mean that even if developers only provided the AI inspection module and did not participate in the overall deployment of the equipment, they could still be held jointly liable with the manufacturers operating the equipment. Tech companies worry that this “one-size-fits-all” liability determination would leave developers exposed to passive legal risks, as they have no control over downstream manufacturers’ equipment debugging, raw material selection, and other processes. This, in turn, would dampen their enthusiasm for providing AI technical support to medical manufacturing equipment.

Liability Differences Between SB 4’s Original Provisions and New Rules

SB 4 initially included a provision allowing lawsuits against developers and deployers of certain systems. Tech leaders argued that this could hold developers liable even if they bore no responsibility for deployments beyond their control. However, the original provision included several safe harbor clauses, stating that developers would not be held jointly liable if they took all reasonable measures to prevent abuse, had no intent to enable abuse, and could not reasonably foresee such abuse.

The new rules, however, would remove these specific safe harbor clauses and stipulate that developers would be held jointly liable with deployers under the following circumstances:

The deployer of the AI system uses it for its intended or advertised purpose;

The deployer uses the AI system in a manner intended or reasonably foreseeable by the developer; or

The output of the AI system is not substantially altered by data provided by the deployer, nor does it involve the deployer’s independent judgment or discretion.

The provision further specifies that individuals found jointly liable under this clause have the right to seek contribution from another liable party, and the court shall determine the percentage of fault for both the developer and the deployer.

Tech Industry Concerns: Liability Shifts May Hinder Colorado’s AI Development

Leaders of coalitions representing business interests in the negotiations – including the Colorado Chamber of Commerce, the Colorado Technology Association, and TechNet – have warned that such liability shifts could hinder the development of new or existing AI initiatives in Colorado.

For instance, they argue that this could hold developers liable for companies using AI systems to make hiring decisions, even though the developers themselves did not make any employment decisions or lack sufficient knowledge of customer data to determine whether hiring decisions violated the Colorado Anti-Discrimination Act. Furthermore, this could encourage deployers to outsource discriminatory decisions to software vendors not subject to anti-discrimination laws.

Business Representatives’ Views: Liability Boundaries Must Be Defined by Role

“A major concern we’ve heard from both developers and deployers is that they are perfectly willing to take responsibility for what they can control,” Rachel Beck, Executive Director of the Colorado Chamber Foundation, stated in testimony on Thursday. “Developers build the systems but have no control over customer customization. Deployers customize the systems but do not build the frameworks. We believe additional work is needed to ensure that liability is assessed based on specific roles, so that the liability system can achieve the legislature’s goals.”

Positions of Both Sides: Rodriguez Insists on Joint Liability; Developers Advocate for Liability Differentiation

Rodriguez told the Senate Business, Labor, and Technology Committee on Thursday that he wanted to add joint liability to the law because deployers who might be accused of discrimination may not fully understand the AI systems they have purchased. When introducing the liability amendment on Sunday, he stated that the provision would still allow developers to be held individually liable only if they could prove they had done nothing wrong.

During Thursday’s committee hearing, when questioning Adams Price, Chairman of the CTA Board, about liability issues, Rodriguez expressed concern that people would sue AI system deployers because developers’ systems led to biased decisions.

“If the AI you developed is used – without modification – as you intended, and it causes harm, should you be held liable?” Rodriguez asked.

Adams replied, “Someone has to be held liable. I don’t think both parties should be… As a developer, I have no relationship with consumers. I also have no relationship with the people who use the system directly. Therefore, I cannot have direct knowledge of the situation. Someone should be held responsible. But in this scenario, I don’t think developers should be held jointly liable.”

Legislative Process: AI Bill Negotiations Delay Special Session

While the legislature has passed and sent several revenue-related bills to Governor Jared Polis, the two AI regulatory bills have progressed much more slowly due to ongoing negotiations among sponsors.

Late on Sunday, during the Senate’s discussion of SB 4, Rodriguez seemed to suggest that he had advanced the bill as far as possible. However, he later withdrew the contentious liability amendment, stating that further negotiations were needed. The bill received initial approval on Sunday and was originally scheduled for a final vote in the Senate on Monday morning, but this has now been delayed once again.

Multilateral Concerns: State Government Also Faces Potential Legal Risks

It is not only business interest groups that have expressed concerns about the impact of the joint liability provision in the bill. Amy Bhikha, Chief Data Officer of the Governor’s Office of Information Technology, told the Senate Committee that the provision could also expose the state government to “significant legal liability” if AI system vendors abuse it.