Table of Contents

ToggleFrom Automation to Exclusion: Rethinking AI Efficiency in the Global South

A Civil Service Transformed: The Case of Hong Kong

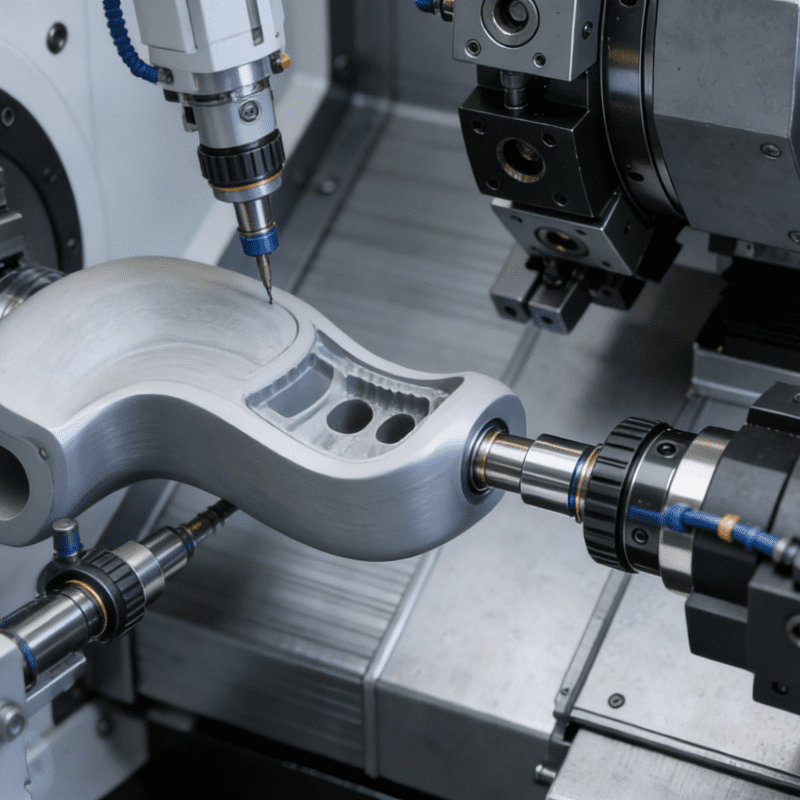

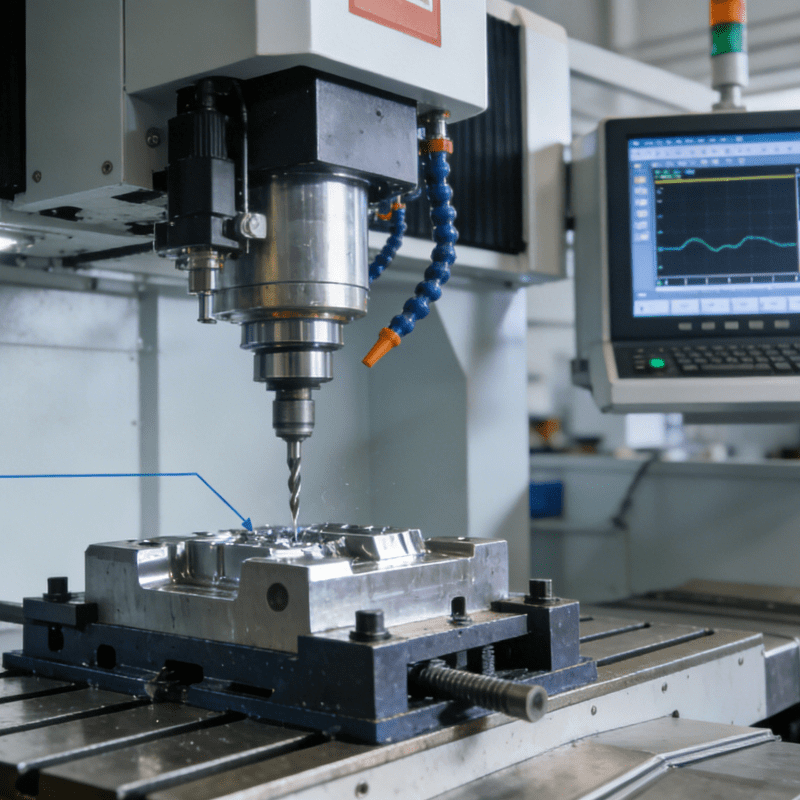

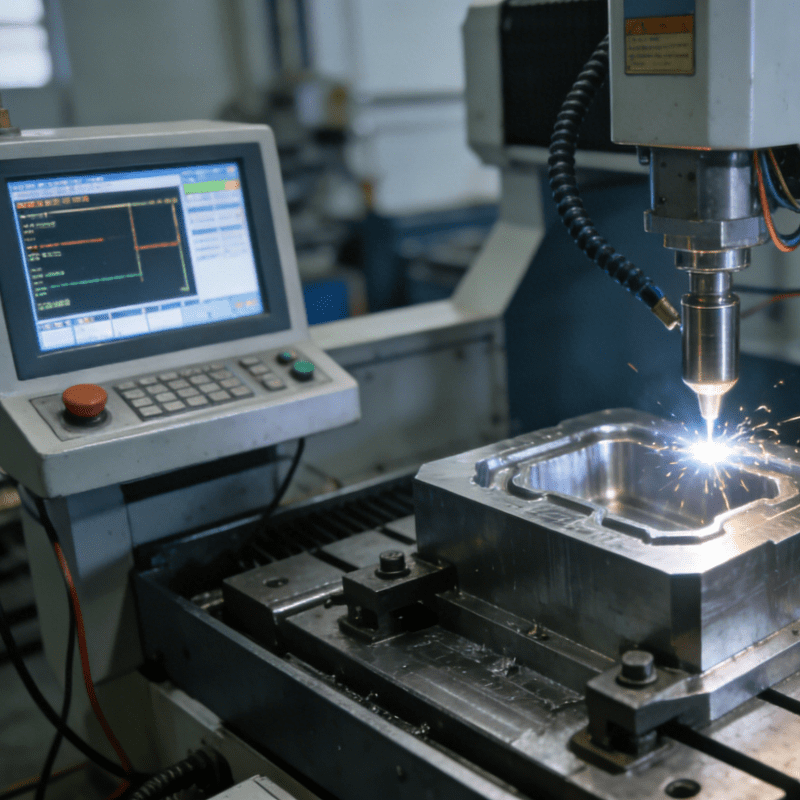

As intelligent automation and industrial automation reshape global labor landscapes, Hong Kong is at the forefront of a radical experiment in applying AI within its civil service. The city’s push to deploy AI-driven solutions aims to boost government efficiency and address fiscal pressures, but it also exemplifies a troubling trend: the potential for automation equipment and algorithmic systems to exacerbate inequality—especially in regions of the Global South where digital infrastructure and labor protections lag.

According to a February 2025 report by CNA, Hong Kong plans to leverage AI as a cornerstone of a civil service restructuring that will cut approximately 10,000 positions by 2027, reducing staff by 2% annually. The strategy hinges on using intelligent automation to maintain public service quality while trimming costs—for instance, the Census and Statistics Department now employs AI to automate verification tasks once handled manually. To support this shift, the city has allocated over HK\(11 billion (US\)1.4 billion) to AI innovation, including funding for R&D institutions and a technology fund targeting future industries. This investment underscores how automation equipment and AI are increasingly viewed as panaceas for governance challenges, yet it raises urgent questions about who bears the brunt of “efficiency.”

A Global Pattern: AI as Evaluator, Not Just Executor

Hong Kong’s approach mirrors a broader global trend where, from Indonesia to sub-Saharan Africa, artificial intelligence is not merely automating tasks but evaluating the worth of human workers. In pilot regions across the Global South, civil servants are now scored by AI systems that analyze collaboration metrics, email patterns, and task outputs to “recommend” which roles are redundant. This algorithmic evaluation echoes initiatives in the U.S., where the Department of Energy uses AI to flag procurement anomalies, and South Korea, which has trialed AI to assess public health performance. In many African and Southeast Asian nations, donor-funded projects rely on algorithmic scoring to determine local staff continuity—all under the guise of industrial automation’s efficiency.

The Distorted Lens of Efficiency

On the surface, AI’s promise of objective evaluation seems appealing. But the danger lies in the lens itself: AI systems, shaped by their designers’ biases, often fail to account for the nuanced value of roles centered on emotional labor, preventive care, or cultural context—roles disproportionately held by women and marginalized groups. Automation equipment may track outputs, but it cannot measure the intention behind a community outreach effort or the discretion needed in diplomatic negotiations. When “efficiency” is defined by what algorithms can quantify, the invisible work that sustains social cohesion becomes expendable.

A Looming Social Risk in the Global South

The risk is particularly acute in developing nations, where AI-driven job displacement can deepen unemployment in economies with scarce alternative opportunities. The digital literacy divide leaves many workers ill-equipped to transition into roles requiring fluency in intelligent automation tools, while uneven digital infrastructure exacerbates inequality. Rwanda offers a glimmer of hope with its policy of mandatory community consultations and AI literacy programs for government automation projects—a model that prioritizes inclusive governance over blind technological adoption.

Governance That Protects Human Dignity

The crux of the issue lies in three missing governance pillars: explainability (so employees understand why they are rated “low value”), human-in-the-loop decision-making (to allow for compassion and context), and public transparency (to ensure independent oversight). Without these, automation equipment and AI become tools of quiet exclusion—workers are not fired, but “scored out” by systems that prioritize efficiency metrics over human context. In the Global South, where resistance to automation is often framed as “anti-progress,” this creates a climate where ethical reflection is sidelined.

Contextual Governance, Not Imported Frameworks

The solution is not to reject AI, but to ground its deployment in contextual governance. Multidisciplinary teams must co-design systems with embedded ethics officers and built-in auditability. Crucially, frameworks for intelligent automation and industrial automation must be adapted to local social structures, not imported from the Global North. Most developing nations are AI users, not developers, making them vulnerable to biases in foreign-built systems. Meanwhile, reskilling programs must scale to support workers displaced by automation equipment, ensuring inclusive participation in the digital economy.

What Kind of System Are We Building?

When AI becomes the arbiter of human worth, silence becomes complicity. It is not enough to build efficient systems; we must build systems that recognize why human labor—with its emotional intelligence, cultural nuance, and contextual wisdom—matters. The challenge for the Global South is to harness the potential of intelligent automation and industrial automation without sacrificing the dignity of those who power its societies. In the end, the question is not how to make AI work, but how to make AI work for all.