In the evolution of robotics from “mechanical execution” to “intelligent interaction,” autonomous robotic systems, with their core capability of “operating without continuous human intervention and dynamically adapting to the environment,” have broken through the “scripted limitations” of traditional automated robots. They have become a key technology reshaping fields such as industry, healthcare, and logistics. These systems not only complete preset tasks independently but also make autonomous decisions and adjustments when encountering unexpected situations (e.g., path blockages, environmental changes), truly realizing “active judgment by machines.” This article will comprehensively analyze the essence and value of autonomous robotic systems, covering definition differentiation, core characteristics, technical principles, and industry applications.

Redefining Autonomous Robots: More Than “Automation,” It’s About “Adaptability”

To understand autonomous robots, we first need to clarify their fundamental difference from “automated robots.” While both reduce human involvement, the core distinction lies in their “ability to respond to changes”—a difference that directly defines their application boundaries and value:

Core Definition of Autonomous Robots

An autonomous robotic system refers to a cluster of robots that, through three core capabilities—dynamic perception, autonomous decision-making, and closed-loop control—independently complete task planning, environmental interaction, and fault adjustment without continuous human input. Its core is not “complete independence from human involvement” (initial task setting and work range calibration still require human participation) but “freedom from reliance on continuous human control.” Even if the environment changes beyond preset parameters (e.g., temporary obstacles, deviations in part specifications), the system can analyze the situation in real time, generate solutions on its own, and avoid halting operations to wait for human instructions.

For example, an autonomous AGV (Automated Guided Vehicle) used for material handling in factories can not only navigate through workshops along its initially planned route. If it encounters temporarily stacked shelves (not marked on the initial map), it can use LiDAR to capture obstacle information in real time and autonomously re-plan the shortest detour route within 1–2 seconds. In contrast, a traditional automated AGV would directly trigger a shutdown alarm when facing such a situation and require human intervention—either removing the obstacle or reconfiguring the route—before it can resume operation.

Key Differences from Automated Robots

In terms of working logic, automated robots are essentially “tools that precisely execute pre-programmed scripts.” They repeat fixed actions (e.g., welding the same joint at the same angle and force every time on a production line, or grasping parts of the same specification along a fixed trajectory) and cannot handle changes outside the script. A 10mm shift in part placement or slight vibration at the welding station may cause operational failure.

Autonomous robots, however, possess “the ability to actively perceive and adjust.” They use sensors to capture environmental data in real time (e.g., part position deviations, station vibration amplitude), then analyze the causes of deviations via AI algorithms and autonomously adjust action parameters (e.g., correcting grasping angles, compensating for vibration displacement). For instance, an autonomous tightening robot in automotive assembly will automatically fine-tune the robotic arm’s posture to ensure precise screw alignment if it detects a 2mm shift in the screw hole position—no manual re-calibration of the station is needed.

In terms of core objectives, automated robots focus on “improving the efficiency and precision of repetitive tasks” and are suitable for scenarios with stable environments and single tasks (e.g., material unloading on assembly lines, fixed-station inspection). Autonomous robots, by contrast, focus on “expanding the task boundaries of complex scenarios” and can operate reliably in environments with frequent changes and flexible tasks (e.g., mixed-model production lines, outdoor unmanned delivery).

Three Core Characteristics of Autonomous Robots: The Technical Foundation Supporting “Autonomy”

The “autonomous capability” of autonomous robots is not achieved by a single technology but is jointly supported by three progressive core characteristics: “dynamic perception,” “autonomous decision-making,” and “closed-loop control.” Together, these three form a complete closed loop of “perception–decision–execution–feedback,” and none can be omitted:

Dynamic Perception: Equipping Robots with “Eyes and Touch”

Perception is the prerequisite for autonomous decision-making. Autonomous robots use a combination of “internal sensors + external sensors” to monitor their own status and the external environment in real time, ensuring comprehensive and accurate data collection:

Internal sensors: Monitor the robot’s own operating status, including motor speed, battery level, joint temperature, and robotic arm load. For example, if the motor temperature of an autonomous welding robot exceeds a preset threshold (e.g., 80°C), the internal temperature sensor will feed back the data in real time and trigger a speed reduction protection mechanism to prevent equipment damage.

External sensors: Build an “interaction bridge” between the robot and the environment. Core types include:

LiDAR (Light Detection and Ranging): Used for 3D environmental modeling and obstacle detection, with a precision of up to ±2cm.

Vision cameras (2D/3D): Used for part recognition and position localization (e.g., identifying material boxes of different specifications).

Ultrasonic sensors: Used for short-range obstacle avoidance, suitable for narrow spaces.

Force sensors: Installed at the end of robotic arms to detect grasping force and avoid damaging fragile parts (e.g., glass products).

These sensors work together to enable the robot to generate “real-time environmental snapshots.” For example, an autonomous picking robot in a warehouse uses LiDAR to build a 3D map of the warehouse, vision cameras to identify product barcodes on shelves, and force sensors to control grasping force—ensuring precise operation even in dynamic environments (e.g., other robots moving around, slight shifts of products on shelves).

Autonomous Decision-Making: Giving Robots “Judgment Ability”

Decision-making is the “brain” of an autonomous robot. Its core lies in converting massive amounts of sensor-collected data into “executable action commands” through AI algorithms and machine learning models. Key capabilities include task planning, exception handling, and dynamic optimization:

Task planning: Based on initial task objectives (e.g., “transport 10 boxes of materials from Area A to Area B within 2 hours”) and real-time environmental data (e.g., path congestion, material box weight), the robot automatically breaks down task steps (e.g., “first pick up 3 boxes from Shelf A1 → avoid the congested route in Area C → prioritize using pallets with higher load capacity”).

Exception handling: When encountering unforeseen situations, the robot autonomously generates alternative solutions. For example, if an autonomous delivery robot detects a temporary road closure while driving on a street, it will use map data and real-time traffic information to re-plan a detour within 3 seconds, prioritizing routes with fewer sidewalks and traffic lights.

Dynamic optimization: Continuously improve decision-making efficiency through machine learning. For example, an autonomous factory AGV records the time and energy consumption of each path plan, then optimizes subsequent route selections via a reinforcement learning model—gradually reducing the time required for similar tasks by 5%–10%.

This decision-making ability is not achieved by “presetting all scenarios” but through “data-driven real-time analysis.” For instance, an autonomous surgical assistance robot in healthcare can adjust the movement speed of surgical instruments autonomously based on real-time changes in the patient’s blood pressure and tissue tension (detected by force sensors), avoiding tissue damage caused by excessive operation speed.

Closed-Loop Control: Ensuring “Precision and Safety” in Decision Execution

Control is the “hands and feet” of an autonomous robot. Through a closed-loop control system (a cycle of “execution–feedback–correction”), it ensures that decision commands are executed precisely while strictly adhering to safety rules:

Motion control: Executes decision commands via servo motors and precision transmission systems (e.g., ball screws) while feeding back execution precision in real time. For example, when an autonomous assembly robot executes the command “screw the bolt into the hole,” if the vision camera detects a 0.5mm alignment deviation, the control system will immediately adjust the robotic arm’s posture to correct the deviation before continuing the tightening action.

Safety control: Embeds multi-layer safety rules to ensure the robot does not harm personnel or equipment during autonomous operation. For example, a collaborative autonomous robot (e.g., a robotic arm assisting workers in a workshop) will automatically reduce its movement speed if sensors detect a human approaching within 50cm, and immediately stop if the human comes within 20cm—avoiding collision risks.

Boundary control: Strictly abides by preset work scopes and task boundaries. For example, an autonomous inspection robot in a factory uses GPS and LiDAR for localization. If it detects that it is exceeding the factory boundary (e.g., approaching a wall), it will immediately trigger a reverse movement command to prevent deviation from the work area.

This closed-loop control ensures the robot’s actions are “both precise and controllable.” For example, an autonomous press-fitting robot in new energy vehicle battery assembly monitors press-fitting force and displacement in real time (via force-displacement sensors). If the press-fitting force exceeds a preset threshold (e.g., 1000N), it will immediately stop press-fitting, trigger an alarm to avoid damaging battery cells, and record abnormal data for subsequent analysis.

Industry Applications of Autonomous Robots: From “Efficiency Improvement” to “Scenario Expansion”

Autonomous robotic systems are no longer “laboratory technologies”—they have penetrated multiple industries, solving complex problems that traditional automation cannot address and driving transformations from “efficiency optimization” to “scenario expansion”:

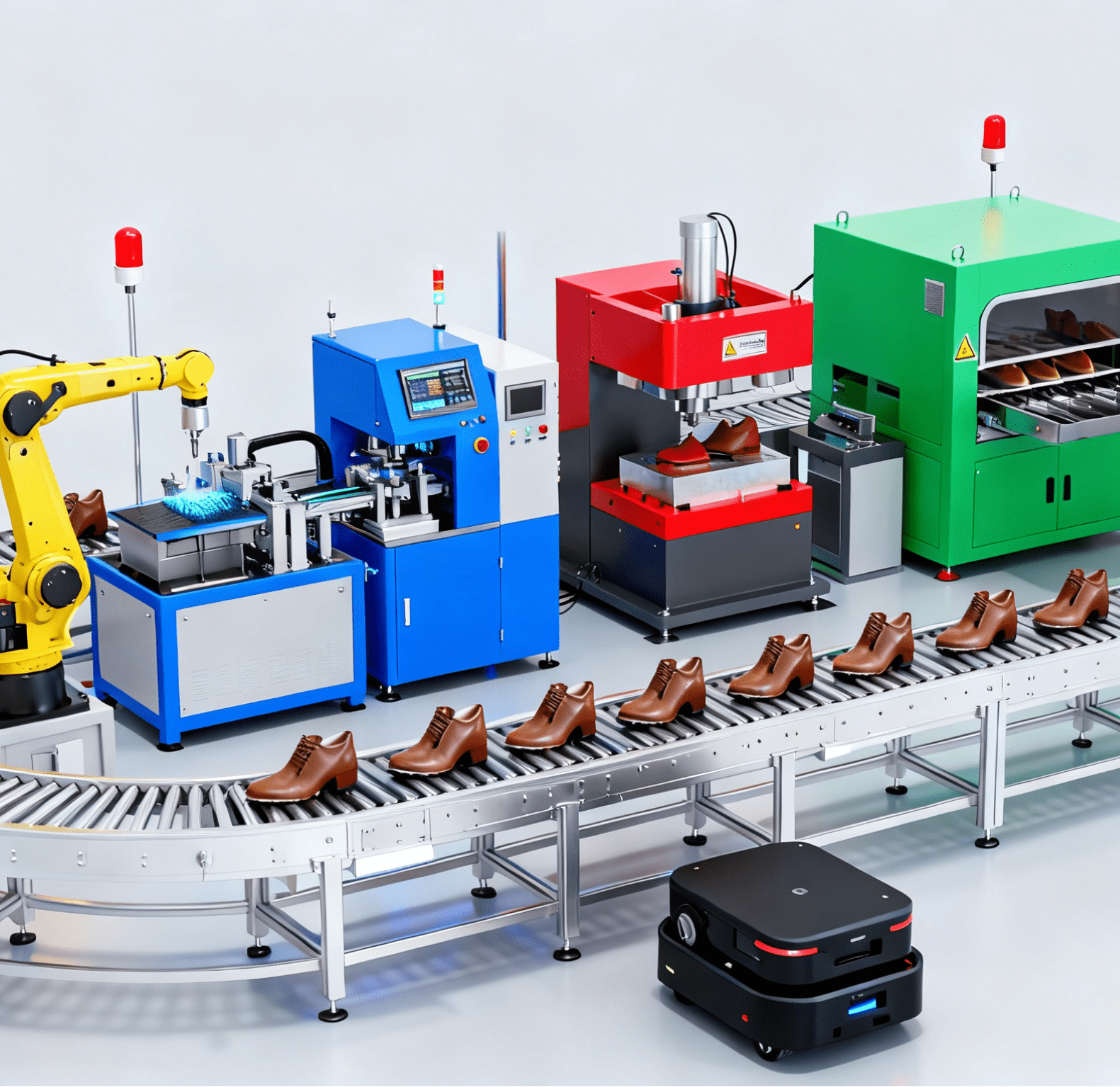

Industrial Sector: Reshaping Flexible Production Models

As manufacturing shifts from “large-batch single-product production” to “small-batch multi-product production,” autonomous robots have become the core of flexible production:

Autonomous AGVs and material management: Replace traditional fixed conveyors to move dynamically in workshops and adjust material delivery sequences in real time based on production progress. For example, autonomous AGVs in an automotive parts factory can independently determine whether to “prioritize delivering engine parts or chassis parts” based on the material consumption rate of the production line—reducing the material shortage rate of the production line by 30%.

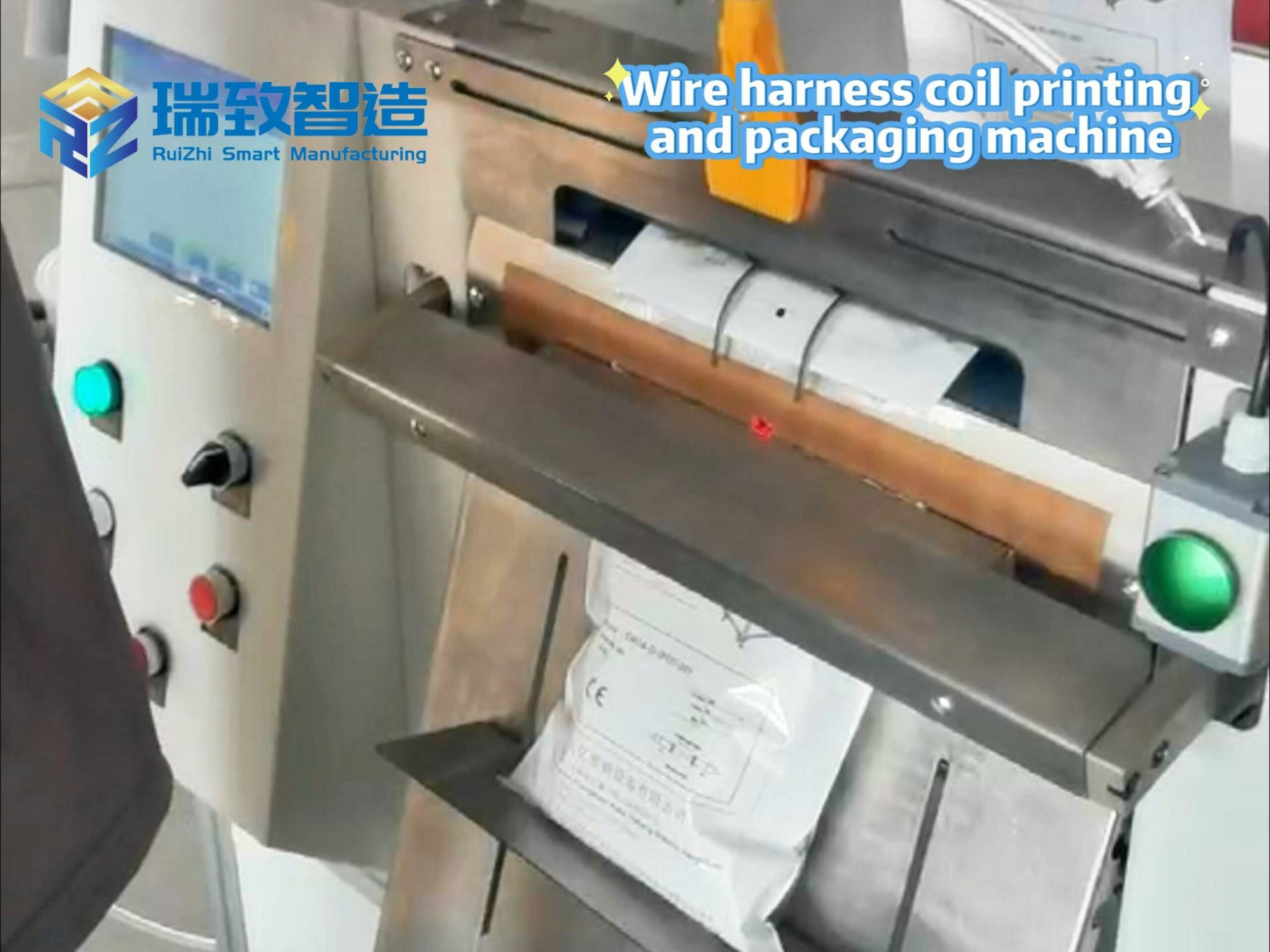

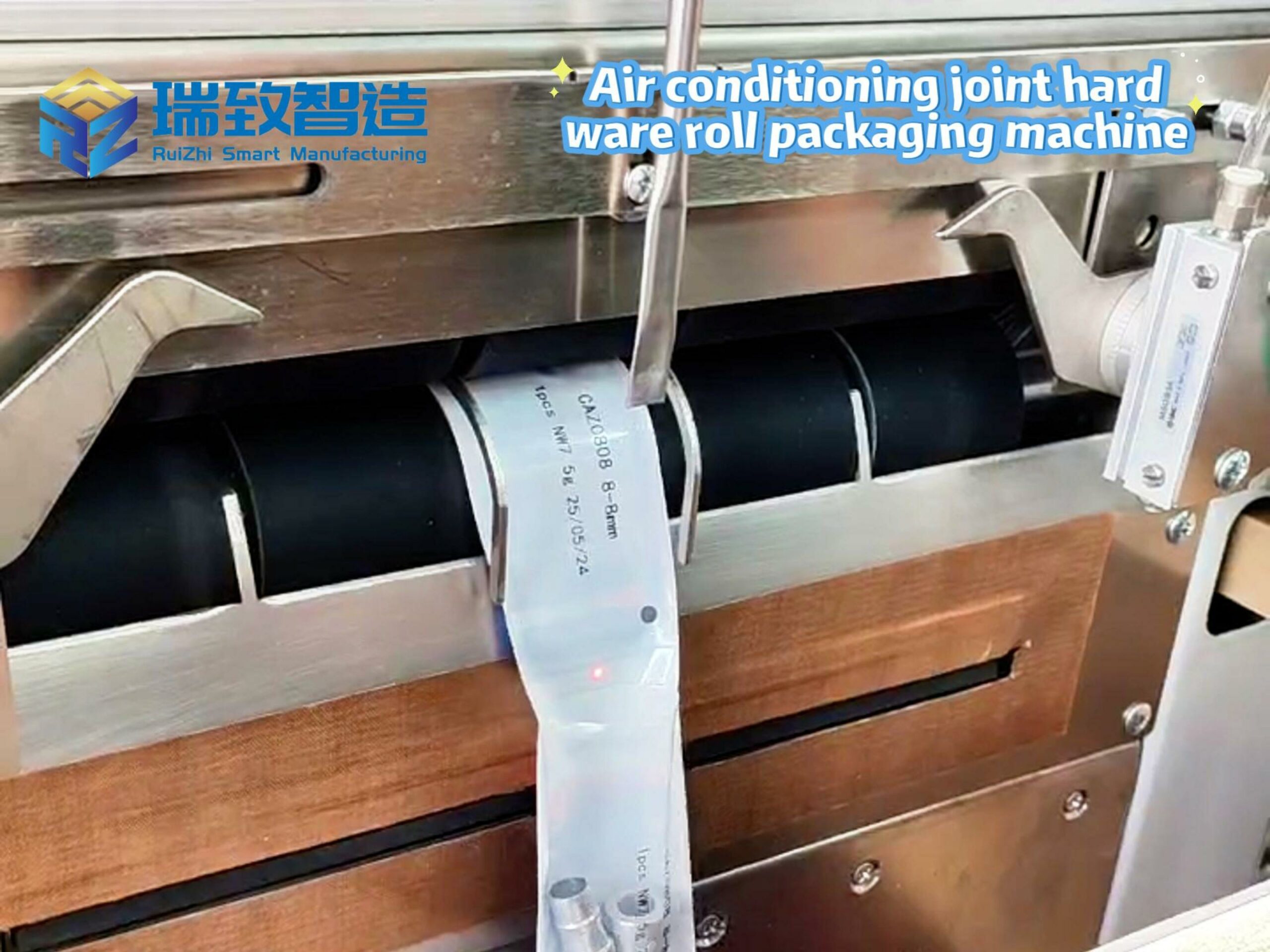

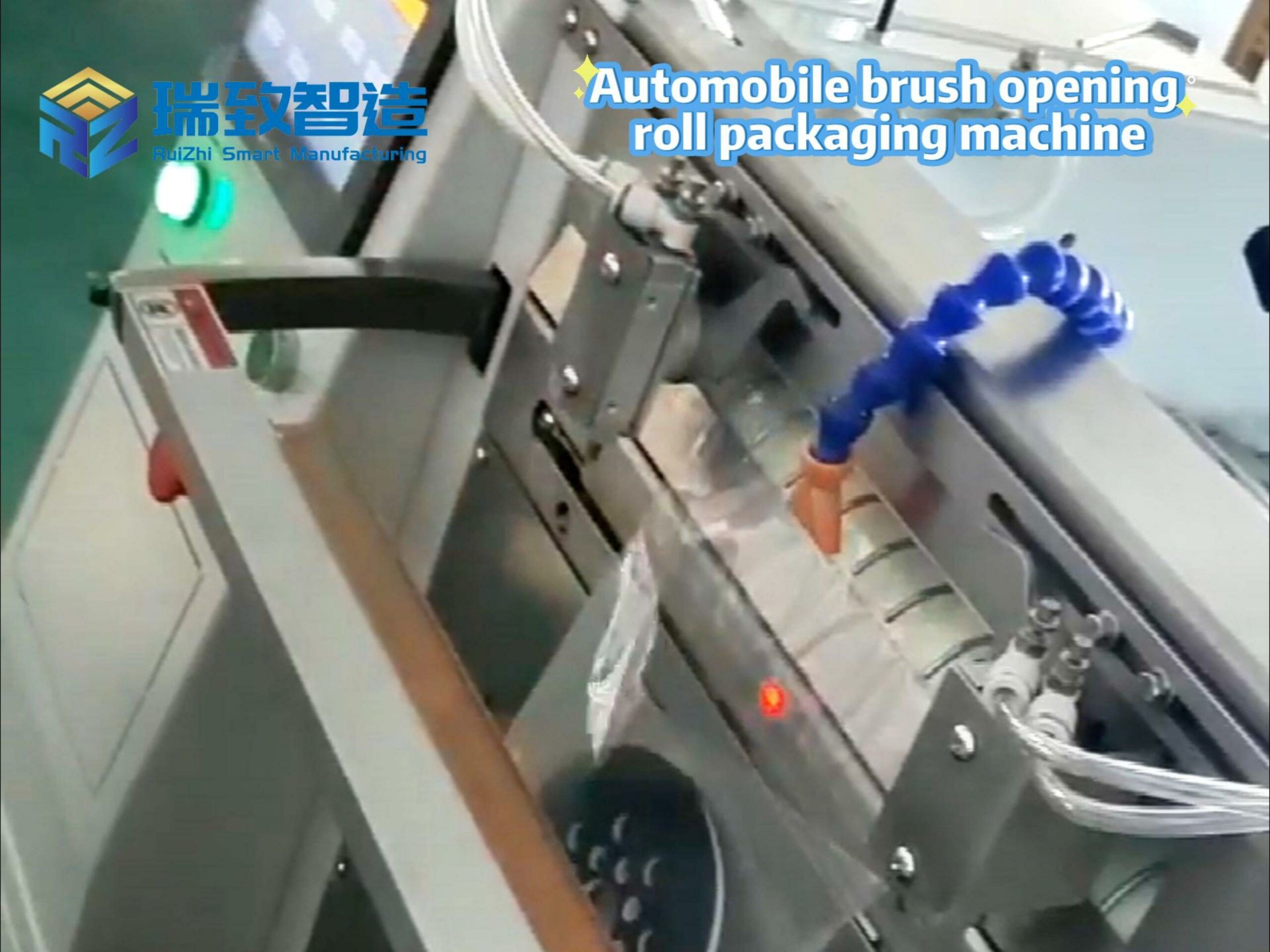

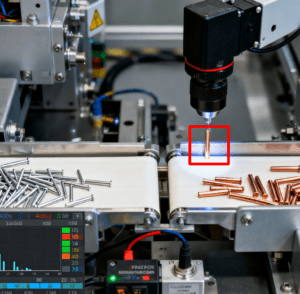

Flexible automatic loading and unloading vibrator: As a key material supply component in autonomous industrial robotic systems, it solves the rigid limitation of traditional vibrators that “one type of part requires one set of fixtures.” This vibrator integrates 2D vision sensors and adaptive vibration control algorithms: when switching from feeding φ5mm aluminum pins to φ8mm copper pins (common in 3C product assembly), it can automatically identify part size and material via vision, adjust vibration frequency (from 50Hz to 35Hz) and track baffle position in real time, ensuring stable and orderly feeding without manual adjustment. For example, in a laptop keyboard assembly line, the vibrator detects abnormal part jamming through torque sensors, immediately pauses and adjusts vibration parameters, then resumes feeding—coordinating with the autonomous robotic arm’s picking rhythm to reduce material supply interruptions by over 60%, and perfectly adapting to the “multi-variety, small-batch” production needs of the line.

Autonomous assembly and inspection: Adapt to the assembly needs of multi-variety parts. For example, an autonomous assembly robot in a consumer electronics factory uses vision cameras to identify different models of mobile phone middle frames and autonomously switches grippers and assembly programs—enabling “one production line to assemble 3–5 models” and shortening the model changeover time from the traditional 2 hours to 5 minutes.

Autonomous equipment inspection: Replace humans in high-risk, high-frequency inspection tasks. For example, an autonomous inspection robot in a chemical plant can move independently in high-temperature, toxic environments (e.g., around reactors), use infrared cameras to detect equipment temperature, and gas sensors to detect leaks. Its inspection efficiency is twice that of humans, and it avoids exposing personnel to hazardous environments.

Healthcare Sector: Enhancing “Precision and Safety” in Diagnosis and Treatment

The application of autonomous robots in healthcare focuses on using precise control and dynamic adaptation to assist or even partially replace doctors in completing high-difficulty tasks:

Autonomous surgical assistance: For example, an autonomous positioning robot in orthopedic surgery builds a 3D model of the patient’s bones using CT image data and combines it with real-time intraoperative positioning (via an optical tracking system) to autonomously adjust the angle and depth of surgical instruments. This controls the precision error of screw implantation within ±1mm—far better than the ±5mm error of manual operation.

Autonomous ward services: For example, an autonomous delivery robot in a hospital can, based on instructions from the nurse’s station, autonomously transport medicines and consumables from the pharmacy to wards, avoid pedestrians and hospital beds en route, and confirm the identity of the recipient via facial recognition to prevent wrong medicine delivery.

Autonomous rehabilitation assistance: For example, an autonomous rehabilitation robot can collect the patient’s limb movement data via sensors and autonomously adjust training intensity. When a stroke patient undergoes hand rehabilitation training, the robot dynamically adjusts resistance based on the patient’s grasping force—ensuring training effectiveness while avoiding secondary injuries.

Logistics and Public Services Sector: Expanding the Boundaries of “Unmanned Services”

Autonomous robots enable logistics and public services to break free from “human resource and time constraints,” achieving 24/7, full-scenario coverage:

Autonomous warehouse picking: Autonomous picking robots in e-commerce warehouses use visual recognition and AI algorithms to autonomously locate products on shelves and plan optimal picking paths. Their picking efficiency reaches 120 items per hour—three times that of humans—with an error rate of less than 0.1%.

Urban autonomous delivery: For example, autonomous delivery robots in the food delivery industry can travel on urban sidewalks, use LiDAR for obstacle avoidance and vision cameras to recognize traffic lights, realizing unmanned delivery “from merchant to community entrance” and alleviating the “last-mile” delivery pressure during peak hours.

Autonomous agricultural operations: For example, an autonomous plant protection robot can identify crop growth conditions (via vision cameras) and detect soil moisture (via sensors) to autonomously adjust pesticide spray volume and driving routes. This reduces pesticide usage by 20% and avoids the risk of pesticide exposure for manual sprayers.

Conclusion: Autonomous Robots—Evolution from “Tools” to “Collaborative Partners”

The value of autonomous robotic systems lies not only in “replacing humans in repetitive, high-risk tasks” but also in becoming “collaborative partners” of humans through “dynamic adaptation and autonomous decision-making.” In industry, they collaborate with workers to complete multi-variety production; in healthcare, they assist doctors in improving diagnosis and treatment precision; in daily life, they provide more convenient services for people.

With improvements in sensor precision (e.g., LiDAR costs decreasing by 30% annually), optimizations in AI algorithms (e.g., decision response time shortened to within 1 second), and advancements in safety control technologies, the application scenarios of autonomous robots will expand further—from indoor factories to complex outdoor environments, and from single tasks to multi-task collaboration. In the future, autonomous robots will no longer be “isolated machines” but important components of intelligent ecosystems, driving in-depth transformations in social production and lifestyles.