The Challenges of Data Centers in the AI Era

With the continuous development and large – scale application of artificial intelligence, data centers around the world are facing unprecedented operational pressures. The training and inference of AI models require massive computing resources, which directly drive up energy consumption and cooling demands. Currently, global data centers account for about 1% – 2% of the world’s total electricity consumption, and Goldman Sachs predicts that this proportion could rise to 4% by the end of this decade.

This growth stems not only from the explosive demand for AI computing but also reflects the deepening dependence of the industry on high – performance GPU clusters. To deploy more computing nodes in a limited space, data centers are trending towards higher – density layouts. However, the resulting challenges of energy consumption, heat generation, and cooling are becoming increasingly severe.

At the same time, regulatory agencies and the public worldwide are paying more attention to the environmental impact of data centers. Some regions have started to restrict or reject new data center projects, citing concerns such as potential strain on local electricity and water resources and increases in energy costs. How to achieve more efficient and sustainable operations while meeting the AI computing demands has become a core issue that the data center industry must face directly.

The Evolution of Computing Platforms: Balancing Performance and Energy Efficiency

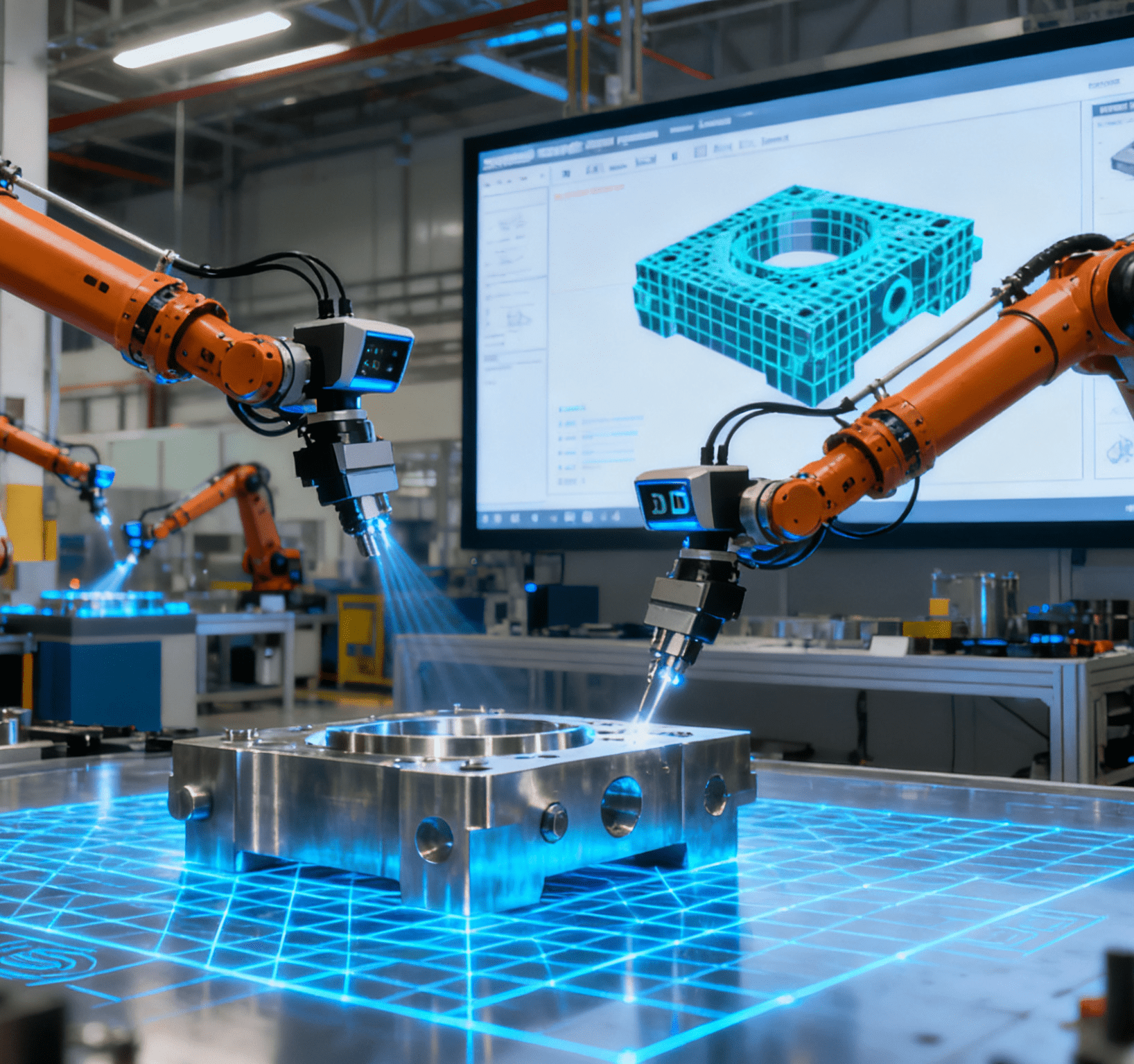

The primary way to enhance the overall efficiency and sustainability of data centers is to optimize their computing cores. As the key driver of AI computing, GPUs have entered a new development stage that emphasizes both high – performance and energy – saving. New – generation GPUs represented by NVIDIA’s Blackwell architecture have significantly improved energy efficiency while maintaining high – parallel computing performance; manufacturers such as AMD are also launching high – energy – efficiency chips optimized for AI loads.

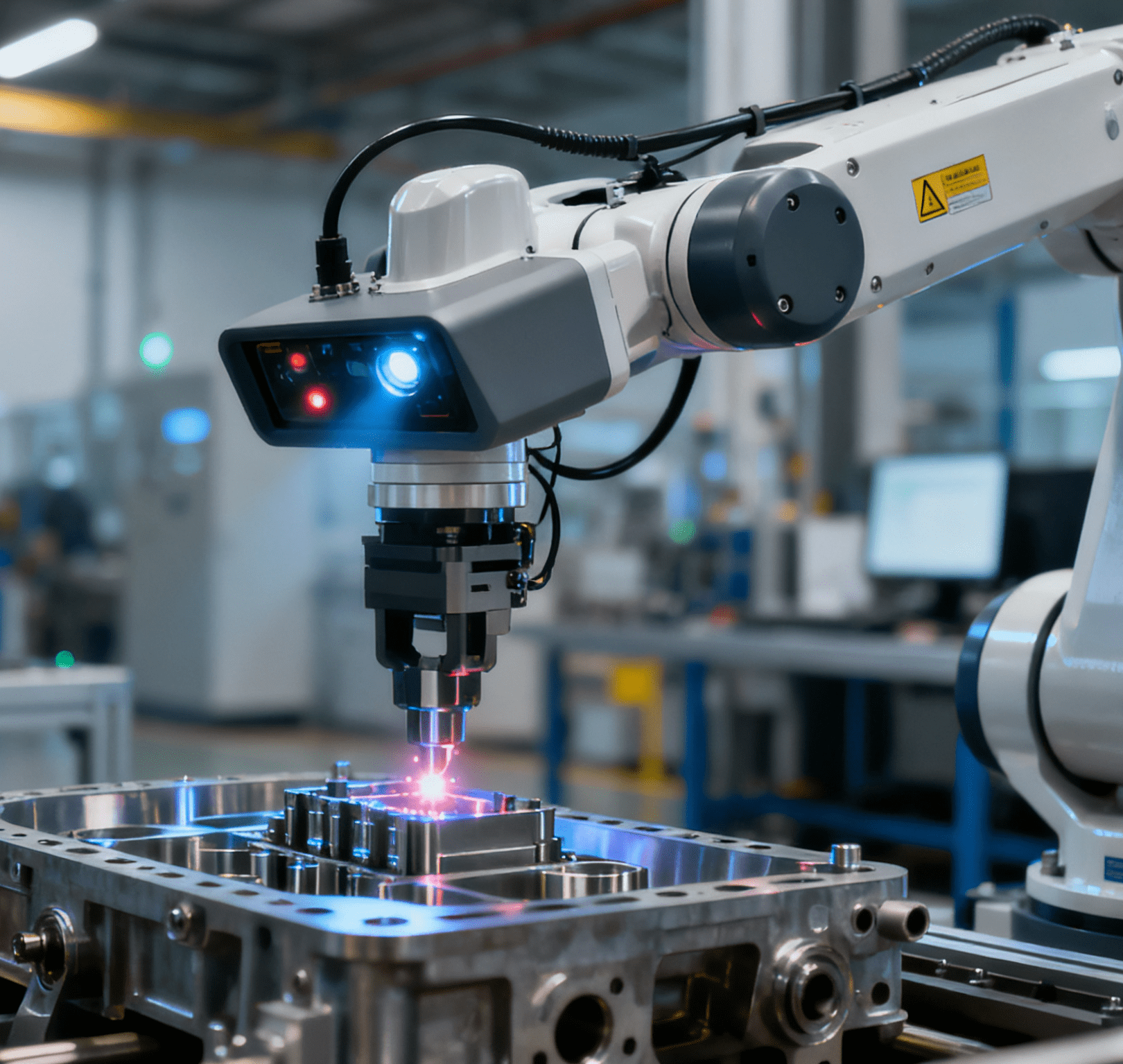

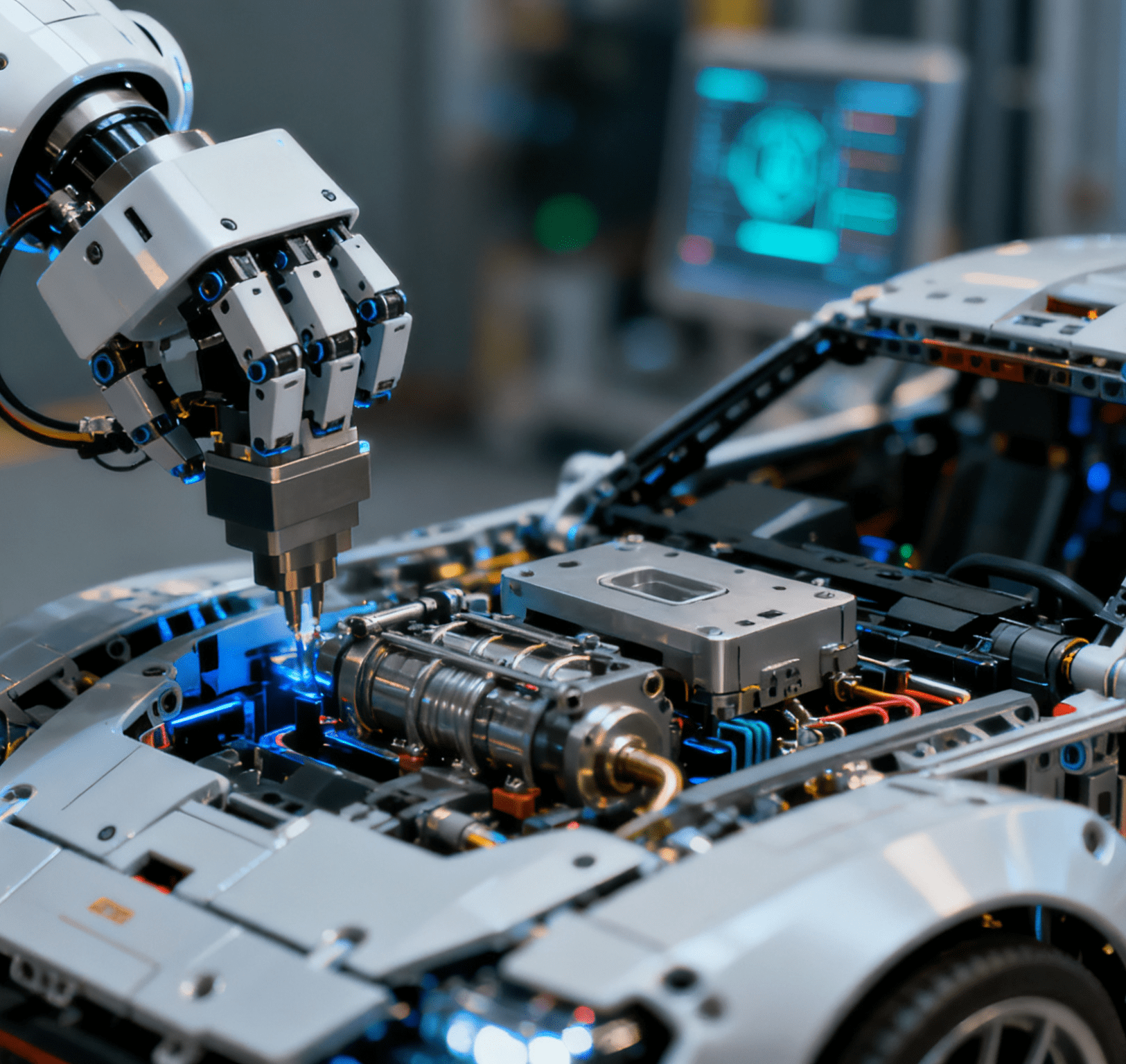

However, the improvement of hardware performance is just the starting point. When evaluating computing platforms, data centers are paying increasing attention to indicators such as Performance per Watt and Embedded Carbon. Industry standards like SPECPower have become important references for measuring server energy efficiency. By incorporating energy – efficiency and sustainability indicators into procurement decisions, enterprises can not only reduce long – term operating costs but also encourage chip manufacturers to continuously strengthen green innovation in future product designs. Notably, such high – efficiency computing platforms also provide strong support for precision manufacturing scenarios—for instance, the AI – driven computing resources of data centers can optimize the production parameters of آلة تجميع المؤشرات البيولوجية, which requires ultra – precise control of assembly processes and strict quality monitoring, realizing real – time adjustment of assembly speed and precision based on production data, and improving the qualification rate of products while reducing energy consumption of production lines.

Cooling Technology Innovations: Addressing the Heat Load of High-Density AI Computing

The cooling system is a crucial part of the energy consumption structure in AI data centers. Traditional compression refrigeration systems are reliable but have low energy efficiency and emit a large amount of greenhouse gases. According to the data from the International Energy Agency (IEA), data centers and network facilities account for about 1% of global energy – related greenhouse gas emissions.

To address the high – heat – density loads brought by AI clusters, data centers are accelerating the adoption of more efficient cooling technologies:

– High – temperature operation and Free Cooling: Reducing energy consumption through external cold air;

– Hot/Cold Aisle Containment: Optimizing air – flow management to reduce cooling waste;

– Liquid Cooling (Direct – to – Chip/Immersion Cooling): Directly introducing the coolant into the chip or server cabinet, significantly enhancing the heat – conduction efficiency.

Especially in AI training scenarios, liquid cooling technology has become the mainstream trend. Compared with air cooling, it can not only achieve higher heat – density management but also reduce the volume and energy consumption of cooling equipment, thereby reducing the overall carbon footprint.

The Remodeling of Power Systems: From Energy Storage to Resilience

The power system is the cornerstone of the stable operation of data centers. Uninterruptible power supply (UPS) provides crucial short – term power supply when the main power grid fluctuates or experiences a power outage, ensuring that servers and cooling systems can smoothly transition to the standby power – generation state.

Traditional UPS systems commonly use Valve – Regulated Lead – Acid (VRLA) batteries. However, this technology has limitations in terms of energy density, service life, and environmental impact. In recent years, Nickel – Zinc (NiZn) batteries have gradually become the preferred solution for new – generation data centers due to their higher power density, smaller footprint, and better environmental characteristics.

Compared with VRLA and lithium – ion batteries, nickel – zinc batteries have the following advantages:

– Higher power output and energy density;

– Safer material sources and easy recyclability;

– Significantly reduced greenhouse gas emissions — approximately 25% of that of lead – acid batteries and 16% of that of lithium – ion batteries;

– Smaller water and energy usage footprint.

In high – energy – consumption AI data centers, the miniaturization and high – efficiency of the battery system can free up more space for computing resources, while also enhancing the overall power – supply reliability and response speed.

Sustainable Strategies for the Future

As AI technology continues to reshape the data center architecture, the energy and efficiency pressures faced by the industry will further intensify. Enterprises need to re – examine the design of their infrastructure from a systematic perspective and promote a comprehensive upgrade of cooling, power management, and hardware selection.

Future data centers should continue to evolve in the following directions:

– Modular and intelligent management – Through AI – driven energy scheduling and temperature – control optimization, real – time load balancing and predictive maintenance can be achieved;

– Green energy integration – Increasing the proportion of renewable energy use to build a low – carbon energy ecosystem;

– Full – life – cycle carbon management – From equipment manufacturing to decommissioning, achieving traceable and quantifiable control of carbon emissions;

– Resilience and redundancy optimization – Ensuring high – reliable operation even during energy shortages or extreme climate events.

Conclusion

Artificial intelligence has brought about unprecedented computing power demands and has also driven profound changes in data center technologies. From efficient GPUs to liquid cooling, from new – type energy – storage batteries to intelligent energy – consumption management, the industry is moving towards a more green, efficient, and sustainable future. Facing the dual pressures of energy consumption and the environment, only by continuously innovating in technology and strategy can data centers maintain resilience and competitiveness in the AI era.